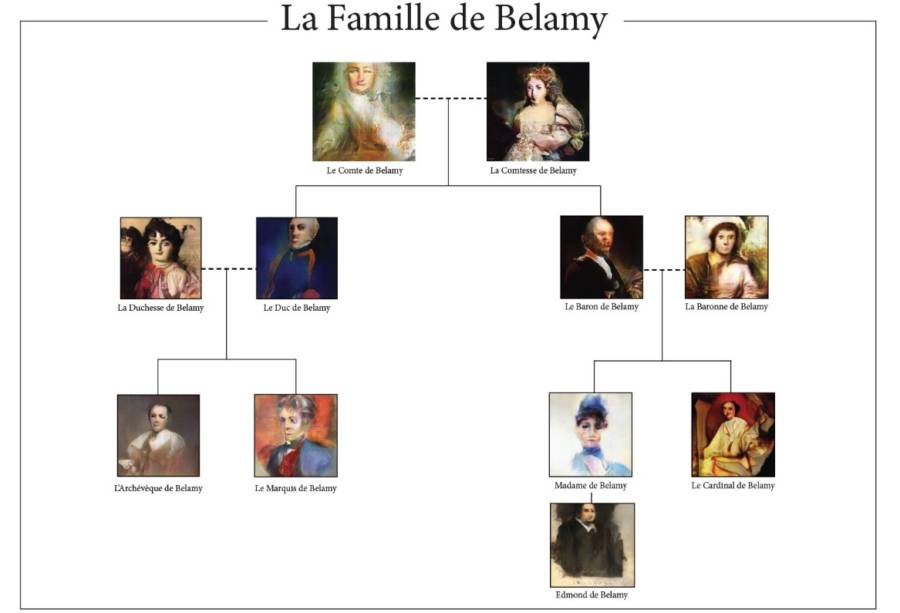

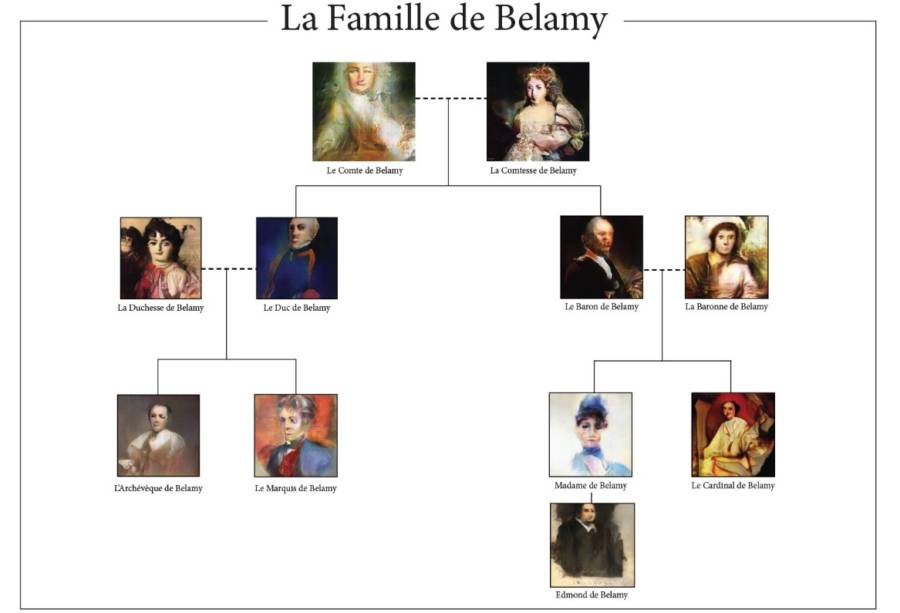

Case Study: Edmond de Belamy, the First AI-created Artwork to be Sold at Auction

Written by Jonghyun Jee (John)

PowerPoint Slide: https://docs.google.com/presentation/d/1_eeYZaG6oL4r_ZzJkrk7BY5UhmNUHYYz8heThtWTZBU

Ten or more years ago, I was reading a book about futurology. I don’t remember most of its contents, but one thing still fresh in my memory is a short list in its appendix. In the list was a number of best jobs for the future, mostly consisted of the occupations I’ve never heard of. A single job caught my attention: an artist. It had seemed so random and unconvincing until I read the description. The author noted that AI and robots are going to replace most human jobs, therefore an artist—of which capability has been considered uniquely human—will probably survive and thrive even more. Ten years have passed since then and a lot has changed. A way more lot than the author might have thought.

Last October, an artwork named “Edmond de Belamy” was sold for $432,500 in a Christie’s auction. As you can tell from the image above, the portrait seems somewhat unfinished and uncanny. We’ve seen a myriad of abstract and puzzling artworks in recent years, so you may wonder what makes this portrait so special, worthy of hundreds of thousands of dollars.

If you look closely enough into the signature at the bottom right, you will notice a random math equation—which is extremely rare to find on top of a classical oil painting. This signature left by the creator of this artwork quite accurately states who painted it: an algorithm.

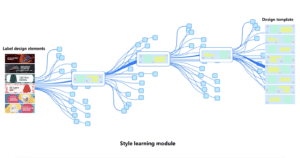

That is, the painting we’re seeing now is the first-ever sold artwork created by not a human mind but an artificial intelligence. “Edmond de Belamy” is one of a series of portraits called “the Belamy family,” created by a Paris-based collective “Obvious.” They utilized “GAN,” which stands for Generative Adversarial Network (as far as I remember, Aven briefly introduced what GAN is and gave us a couple examples such as CYCLEGAN and DISCOGAN).

The mechanism underneath this portrait is pretty straightforward. Hugo Caselles-Dupré, a member of Obvious, says that “[They] fed the system with a data set of 15,000 portraits painted between the 14th century to the 20th. The Generator makes a new image based on the set, then the Discriminator tries to spot the difference between a human-made image and one created by the Generator. The aim is to fool the Discriminator into thinking that the new images are real-life portraits.”

At this point, humans were the one who fed the AI with a data set. In the future we may see an AI system that can somehow choose which sort of data it will be fed. Whether a robot Picasso is coming or not, I’m excited to use this entirely new medium to explore beyond and beneath the realm of art we currently have.

Sources:

Image 1 & 2 © Obvious (https://obvious-art.com/edmond-de-belamy.html)

Image 3 © https://allthatsinteresting.com/ai-portrait-edmond-belamy