Project Description

This series of paintings was created based on images generated by an artificial muse. Neural style transfer was used to merge together past artworks, creating newly styled images. This serves as a technique for artists to gain new inspiration from their past works and to use A.I. as a tool for optimizing creativity.

Background/Inspiration

Roman Lipski’s Unfinished collection is a never-ending collection of paintings in which he uses neural networks to generate new artworks based off of his own paintings. He started out by feeding a neural network images of his own paintings, which it used to generate new images. Lipski then used these generated images as inspiration to create new paintings before repeating the process again and again. Lipski essentially created his own artificial muse and thus an infinite source of inspiration.

Motivation

As a person who likes to draw and paint, one of the many struggles I know too well is creative block. I’ll have the urge to create something, but struggle with a style or the subject matter. Inspired by Roman Lipski’s work, for my final project I wanted to create my own artificial muse to give ideas for new pieces of art. This project was also an exploration of the creative outcomes that are possible when AI and artists come together and equally work together to produce artwork.

Methodology

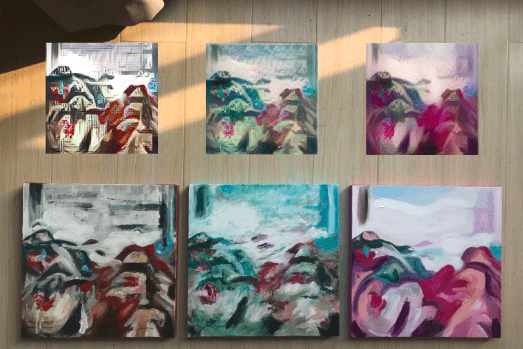

I used the style transfer technique we learned in class to train multiple models on previous artworks I have done. The trained styles were then transferred onto images of other past artworks, and this process was repeated with multiple layers until a series of three images was produced. These three images were used as inspiration to paint three new paintings, the final works.

Experiments

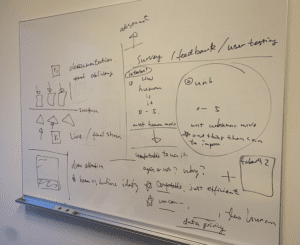

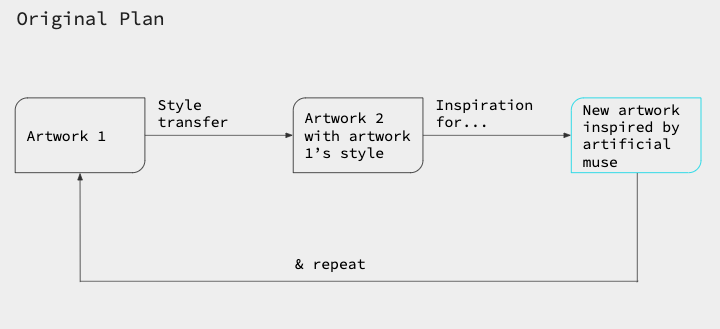

The process I ended up following was different from my original plan. I originally wanted to follow a process like this:

However, as I was experimenting in the first phase, I realized that this would not be ideal for two reasons. The first was that each model takes 20 hours to train, so to take time to train one model, make a painting, and then wait another 20 hours for that style to train, and repeat that whole process two more times for a total of three paintings would take too long for the time we had. The second reason was that the trained model doesn’t necessarily look visually interesting, so it wouldn’t make sense to rely on that painting’s style to transfer into a good model.

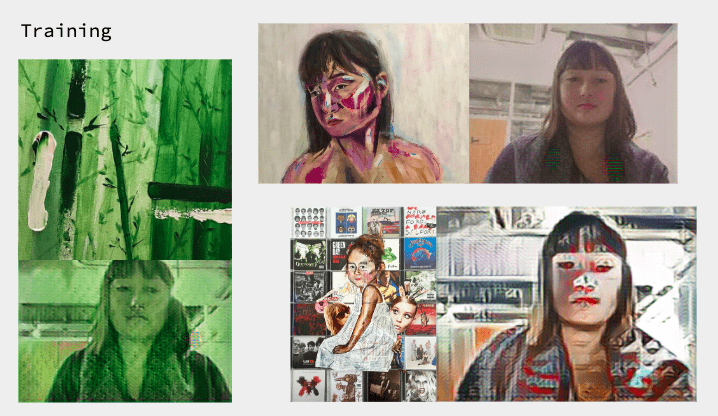

What I ended up doing was first I trained eleven different models on eleven different styles of my old art pieces, and here’s an example of a few of them transferred onto input from a webcam:

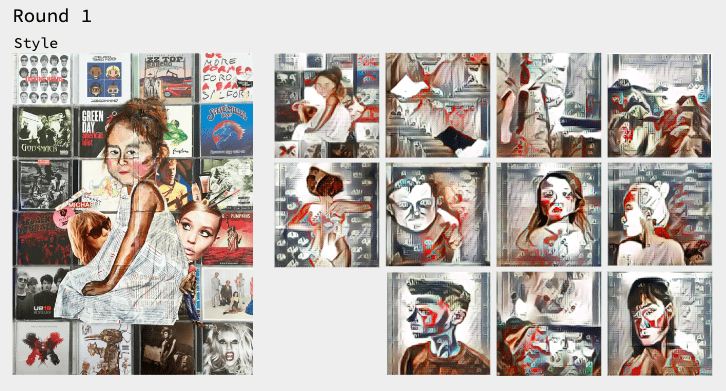

Some of them definitely turned out better than others. The bamboo one and the one on the top, for example, I didn’t like very much. The bamboo one just turned everything green while the other one didn’t seem to do anything at all. However, I really liked the model on the bottom since it actually altered the shapes a bit, so I decided to take that style and transfer it onto other previous artworks:

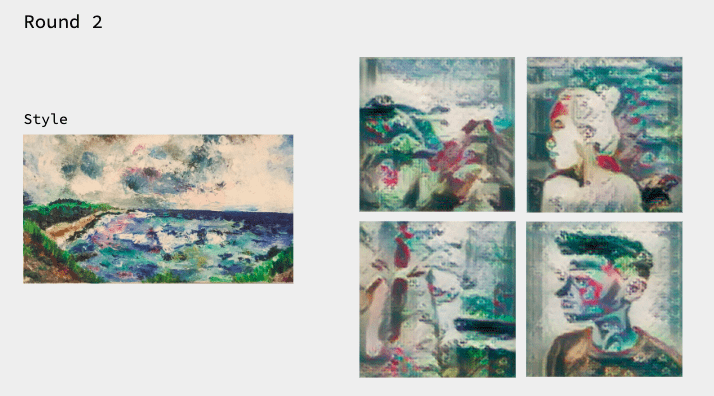

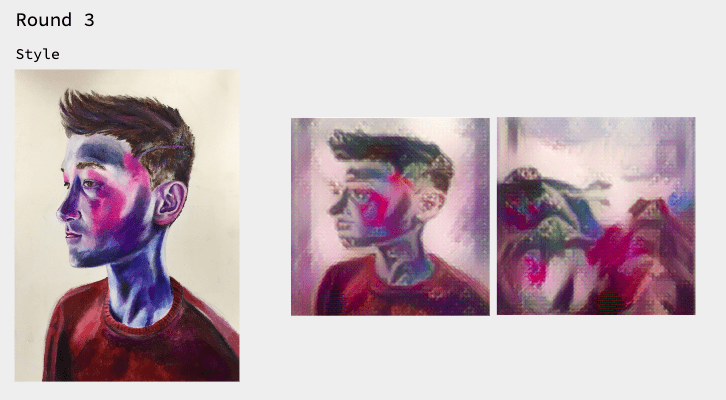

From these, I selected the images I liked the most and ran it through another style:

I then took two of these images that I liked the most and ran them through yet another style:

It was then down to picking between these two series:

I quite liked how the portrait turned out because it looks like a robot to me, which kind of fits the whole AI thing, but I ended up choosing the mountains because I thought it would be quicker to paint because of time constraints. The mountain landscape was also a bit more abstract, which I liked.

Social Impact

I think the process I followed for this project is indicative of how AI can be used as a tool during the creation process rather than as a tool that creates the final generated product. Like I was inspired by Lipski, I hope that other artists can take inspiration from this and experiment with AI to enhance their creative process.

Further Development

This project could honestly go on forever until I run out of old artworks, but that would be nearly impossible because of the number of combinations I could test out. I am also curious how this project would turn out if I had followed my original plan and continuously trained models based on new paintings I created rather than the styles coming entirely from old artworks.

I could also develop it further by using different AI techniques like DCGAN rather than style transfer. I am curious to see how a GAN could recreate my art, however I am not sure how big of a dataset it would need, and the process would be a bit different.