PROJECT TITLE

Strike!- Zhao Yang (Joseph)- Inmi

CONCEPTION AND DESIGN

For the final project, my partner Barry and I decided to make an entertaining game. Basically, we chose to model an existing aircraft game. Since it’s classic and interesting, we don’t need to spend more time to introduce the mechanics of the game to the users so that we can spend more time focusing on how to accomplish a better game. For our project, we not only keep the original mechanics of the aircraft game but also add some other changes to the game. For the original game, the user needs to control the aircraft to attack the enemies and tries to avoid crashing into the enemies so that the aircraft can stay longer and get a higher score. If the aircraft doesn’t crash into the enemies or it destroys the enemies, it won’t lose their health points. However, we make some changes to this mechanics. In our game, if you let an enemy flee, your health points will also decrease. So the game encourages the user to try their best to attack the enemies. The reason why we make this change is to ensure that the game would end at some point and to increase the difficulty of the game. It is one of the creative parts of our final project. On the other hand, the way to interact with the original aircraft game is too limited. In the past, the only way to interact with the aircraft game is to click the buttons and use the joystick. In this way, the users only can interact with their fingers and hands. In that case, the users’ sense of engagement is not strong. Thus, in order to improve the aircraft game, we make changes to the ways of interaction. Based on our preparatory research, we found a project that uses Arduino and Processing. That project “tried to mimic the Virtual reality stuffs that happen in the movie, like we can simply wave our hand in front of the computer and move the pointer to the desired location and perform some tasks”. Here is the link of that project.

https://circuitdigest.com/microcontroller-projects/virtual-reality-using-arduino

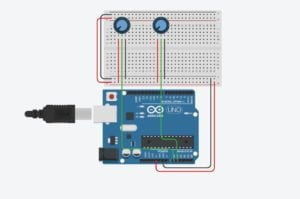

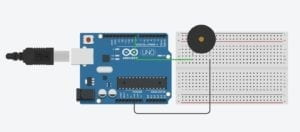

In that project, the user can wear a device on his hand so that the computer could detect the motion of his hand. Then he can execute commands on the computer by moving and manipulating his hand. In my opinion, the interactive experience of this project can give people more sense of engagement. Hence, I think we can make a way to interact with our project to engage the user’s whole body. Then we came up with the idea that people can open their arms and imagine they are the aircraft itself and then tilt their bodies to control the movement of the aircraft. This is the way we expect people to interact with our project. Therefore, we chose to use the accelerometer sensor as the input of our project. This sensor can detect the acceleration on three axes. We chose the acceleration on Y-axis as the input in particular. If the user can wear the device on their wrist and wave their arm up and down, the acceleration on Y-axis will change. Then we can map this acceleration to Processing to control the movement of the aircraft. In this sense, it gives the user a sense that they are really flying. And this way to interact can reinforce the user’s sense of engagement. Moreover, the accelerometer sensor is quite small. So we can make a wearing device based on it very easily. And it’s convenient for users to wear it and take it off so that the users can enjoy the game more quickly. These are the reasons why we chose the sensor and why the sensor best suited to our project purpose. Honestly speaking, Kinect might have been another option. And it aligns with our idea that we expect people can engage their whole body to interact with our project. It’s actually our first choice of the input medium. However, it was not allowed to use the Kinect for the final project. So we have to reject this option.

FABRICATION AND PRODUCTION

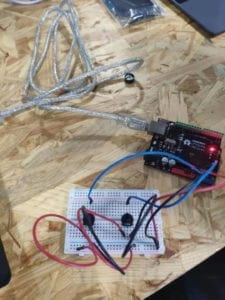

Since we tried to model an arcade game, we decided to laser cut an arcade game case in our production process. By using the case to cover my computer, it can provide the user with the sense of playing an arcade game instead of playing a computer game. This case provides a better outlook for our project. On the other hand, another significant step in our production process is to make the accelerometer sensor as a wearing device. In this case, it would be more sensitive if the user wears it instead of just holding it in their hands. And here are the images of our design of the arcade game case.

During the user test, most of the feedback was positive. Only a few of the users suggested that we should add the functions to make the aircraft can move forward so that the game could be more interesting. Furthermore, at that time, we only had one sensor. We found a problem that by only wearing one sensor, the user could only move their right arm to control the aircraft. And it was a little confusing for the users to open their arms and tilt their bodies to control the movement of the aircraft. Even though they read the instruction, some of them were still confused about the way of interaction. If we didn’t explain to them, most of them couldn’t properly interact with our project. And these are several of the videos during the user testing process.

As a result, after the user test, we decided to add another sensor to control the aircraft to move forward. Besides, we changed the one-player mode and two-player mode to easy mode and expert mode. However, it still aligns with our original thought about two players. Because of the addition of another sensor to control the aircraft to move forward, the game becomes more difficult. It’s really hard for the user to use their right arm to control the aircraft to move left and right and flip their left hand to control the aircraft to move forward and backward at the same time. If you don’t want to challenge the expert mode alone, you can ask your friend to collaborate to control the movement of the aircraft. From my perspective, the adaption of adding another sensor is effective and successful. During the IMA End of Semester Show, by wearing two sensors, even though the users chose to play the easy mode, which can only move the aircraft left and right, it made more sense to them to open their arms and tilt their bodies to control the movement of the aircraft. And the users can easily follow our instructions without being confused. I think our production choices are pretty successful. They align with our original thought that the users can use their whole body to interact with our project. Moreover, the way of using the sensors makes the game more interesting and gives the user more sense of engagement.

CONCLUSIONS

In conclusion, the goal of our project is to make an entertaining and interesting game so that the users can have fun with it and spend their spare time playing it to relax. My definition of interaction is that it is a cyclic process that requires at least two objects which affect each other. In my opinion, our project quite aligns with my definition of interaction. The motion of the user’s body controls the movement of the aircraft in the game. Meanwhile, the scores and the image of the game immediately show to him. It aligns with the part that the objects affect each other. Moreover, the user has to focus on the game and keep interacting with it so that he can get a higher score. It aligns with the part of the cyclic process. In this sense, our project aligns with my definition of interaction successfully. Basically, all the people who have played our game align with our expectations of how they should interact. Just sometimes some of the users didn’t read the instructions that we provided to them, so they might be confused about how to interact. If we had more time, we could come up with more innovative ideas on the mechanics of the game. Since the mechanics of our game is quite similar to the original game, the user might not find much novelty on our game. If it is allowed, we would like to try to use the Kinect as the way of interaction because the direct detection of our motion can make the connection between the way of interaction and the game itself more clear. After all, our idea of engaging the whole body to interact fits the use of Kinect more than the use of the accelerometer sensor. I’ve learned a lot from our accomplishments in our final project. For instance, we have to test the game by ourselves, again and again, to ensure that the user can experience the best version of the game. We had to spend a lot of time debugging. Besides, in order to laser cut the arcade game case, I learned how to use illustrator. What’s more, the most important thing when creating an interactive project is that the user is always the first thing that we need to consider.

Code

https://github.com/JooooosephY/Interaction-Leb-Final-Project