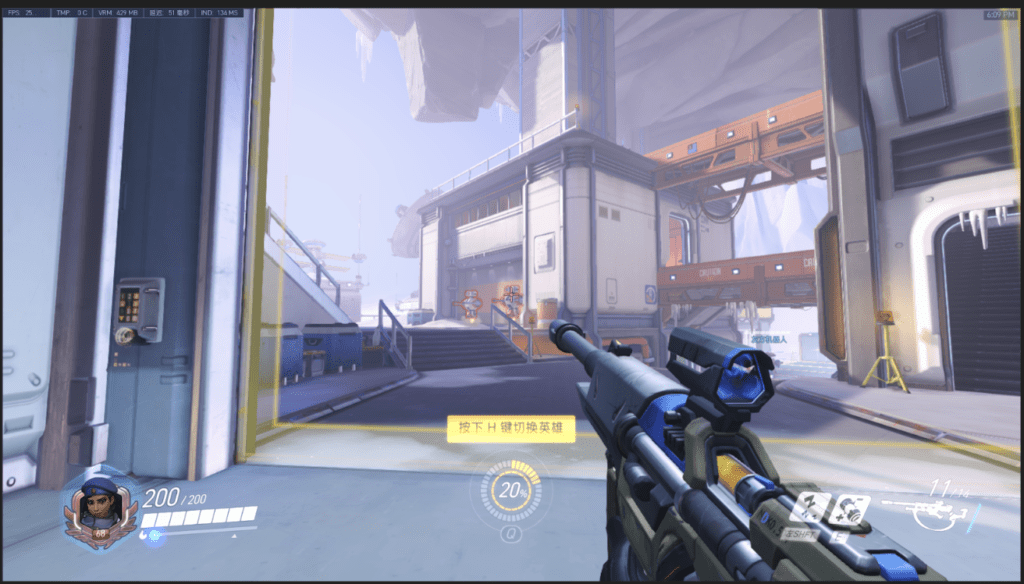

The following clip is a demonstration of my project: Nose & Notes

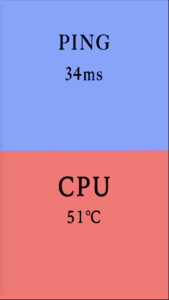

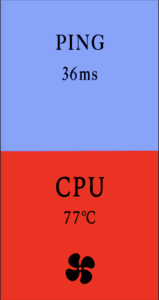

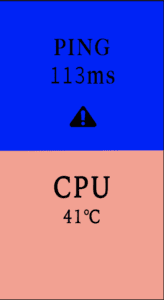

The user can strike notes by moving the position of the nose, the colored rectangles will appear on the screen to illustrate which area is being stroked.

Nose & Notes is based on the PoseNet machine learning model that locates the user’s body parts and generates live coordinates for different body parts. My project uses the coordinates for the nose and dissects the canvas into nine sections, with eight sections each representing one note from the scale and the ninth playing note A in chorus. The user can then plays pieces of music by moving his head and getting his nose within different sections. It is also possible for multiple players to strike notes together.

The initial plan was to have different body parts all able to play notes within the screen, so it can enable a single player to play chords. However, after testing “rightWrist” and “leftWrist”, these two body parts are often wrongly recognized by PoseNet and I would constantly strike on notes that I don’t want to strike. Even though the recognition conditions for those body parts have set to be more than 0.8, it would still wrongly recognize and eventually gets stuck.

I ran into a few problems in programming. One major problem is that I’ve been using Atom to program and run my code and the sounds I store in my folder wouldn’t start preload in the page. Then I was advised that I should run my code on the local server to preload the sounds. I then downloaded the atom-live-server and successfully launched a trial live server to run the program. However, when I use Terminal to run my code, it wouldn’t run properly and the console reads as follows:

Another issue that affected my original plan was that since the sounds are programmed to play every time a section detects the nose, it will constantly start the mySound.play() function as long as the user’s nose is within the area, which is not very pleasant to listen to. Originally, I wanted to make it into 9 sections saying phrases or sentences when the nose is in those areas, however, if the code plays the beginning of each audio continuously, it wouldn’t sound like a complete word, just a syllable instead. Therefore, I used notes to make compensation for this drawback. It still sounds a bit ragged since the sound files themselves have background noises, but are definitely smoother than inserting other audios instead.

If more time is provided, I would turn it into a game which requires the user to use nose, right wrist and left wrist to participate. Three color blocks will randomly appear on the screen and the user will need to use the three body parts to fill in those three blocks, a countdown will also appear on the screen, the user gets one point if he/she finishes the goal within the few seconds.

The following is the link to the code:

https://drive.google.com/open?id=1pfMnVntYXmOIVGkaGhn_F9QUpq7Xrds_