Title: The DARK Space

Designer/Performer: Phyllis Fei

Description

The DARK Space is a 10-minute real-time audiovisual performance that explores fluidity, flexibility, interaction, and transformation in abstract shapes (aka dots and lines) as well as their relationship with minimal music. It emphasizes the idea of “freedom within limits” and “order within chaos” through dynamic motions of simple shapes. It is a minimal piece that resonates an immersive experience, aiming for an open space for imagination and interpretation from the audience.

Perspective and Context

The DARK Space lies in the genre of live audiovisual performance, and the use of technology (Max) allows for real-time production. It. As is described in The Audiovisual Breakthrough (Fluctuating Images, 2015), it is a time-based, media-based, and performative “contemporary artistic expression of live manipulated sound and [visual].”1 It heavily focuses on improvisation: contents are presented and modified simultaneously while the action of turning the knobs on the MIDI controller is happening.

Unlike VJs who follow popular trends so as to seek simple but effective audiovisual experience in more commercial circumstances (such as club environments), The DARK Space seeks for more personal and artistic practices while asking for more conceptual feedback from the audience.2 This piece is more of “free-flowing abstractions,” and is thus more conceptual than the VJing context. Similar to the genre of live cinema, The DARK Space asks for appreciative audiences from whom there arises “in-the-moment awareness, responsiveness, and expression”.3

- Carvalho Ana, “Live Audiovisual Performance,” in The Audiovisual Breakthrough (Fluctuating Images, 2015), 131.

- Menotti, Gabriel, “Live Cinema,” in The Audiovisual Breakthrough (Fluctuating Images, 2015), 93.

- Menotti, 91.

Development & Technical Implementation

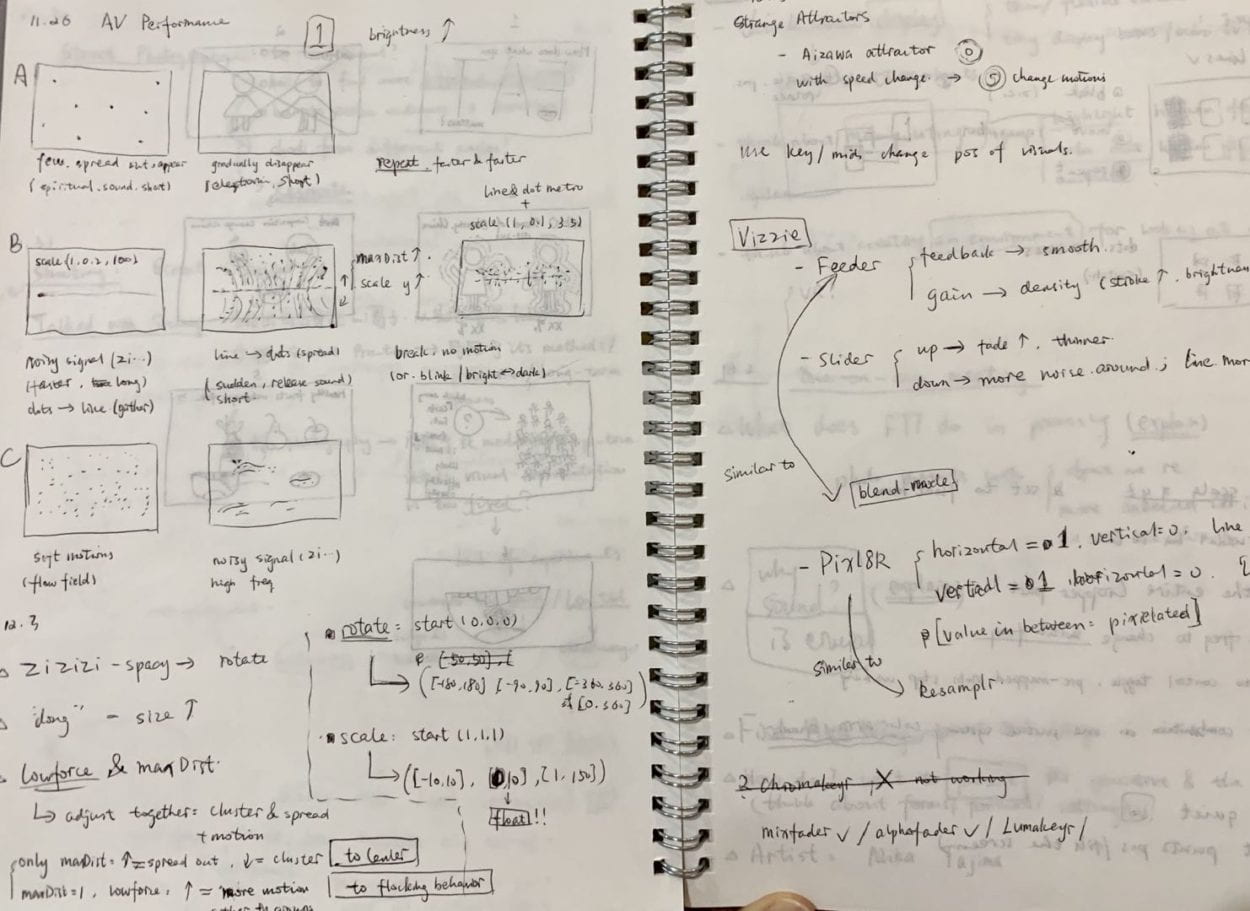

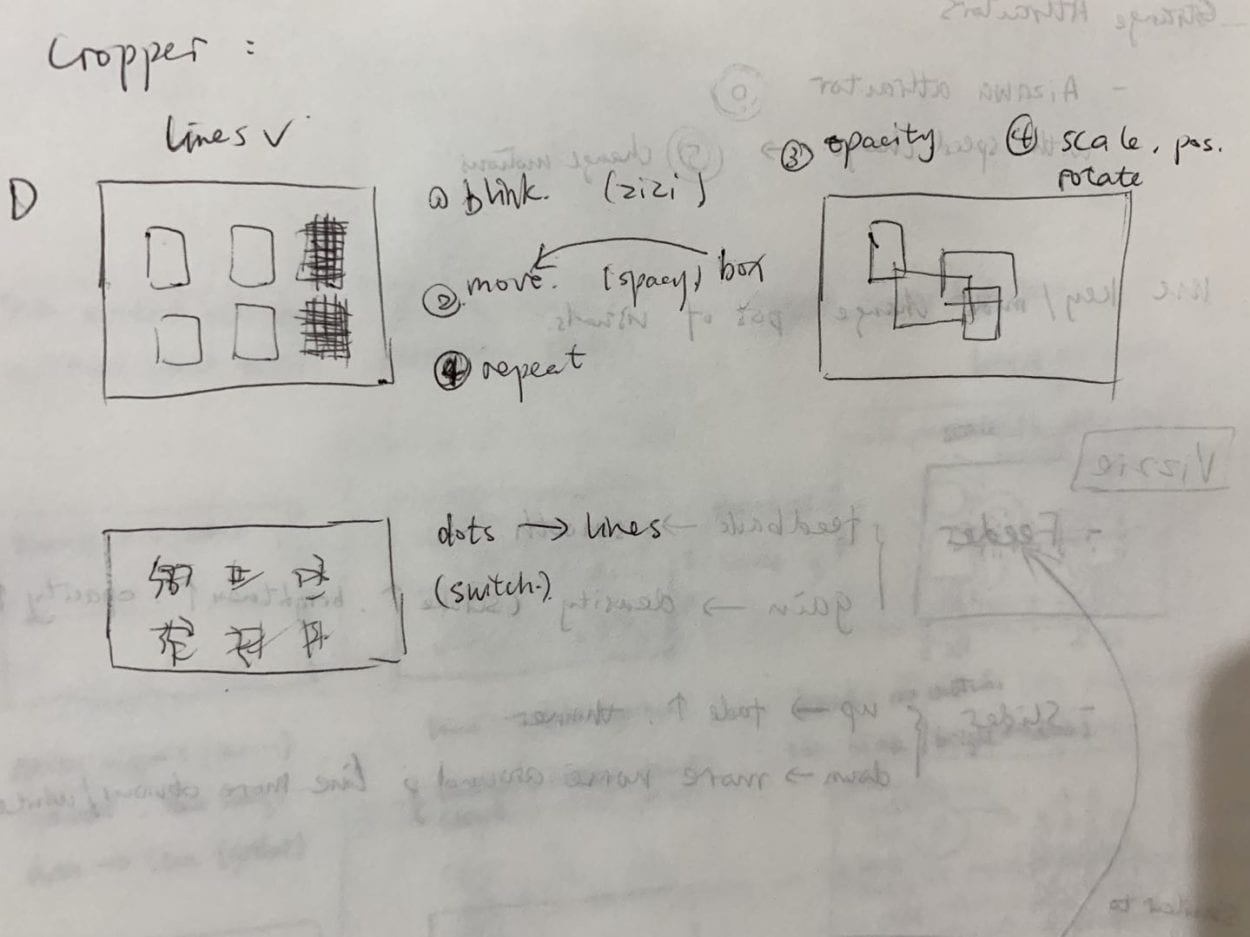

I started the entire production by carrying out a storyboard/scene design of the performance (see Figure 0.1 and 0.2).

This is the link to my Github gist.

Visual Production

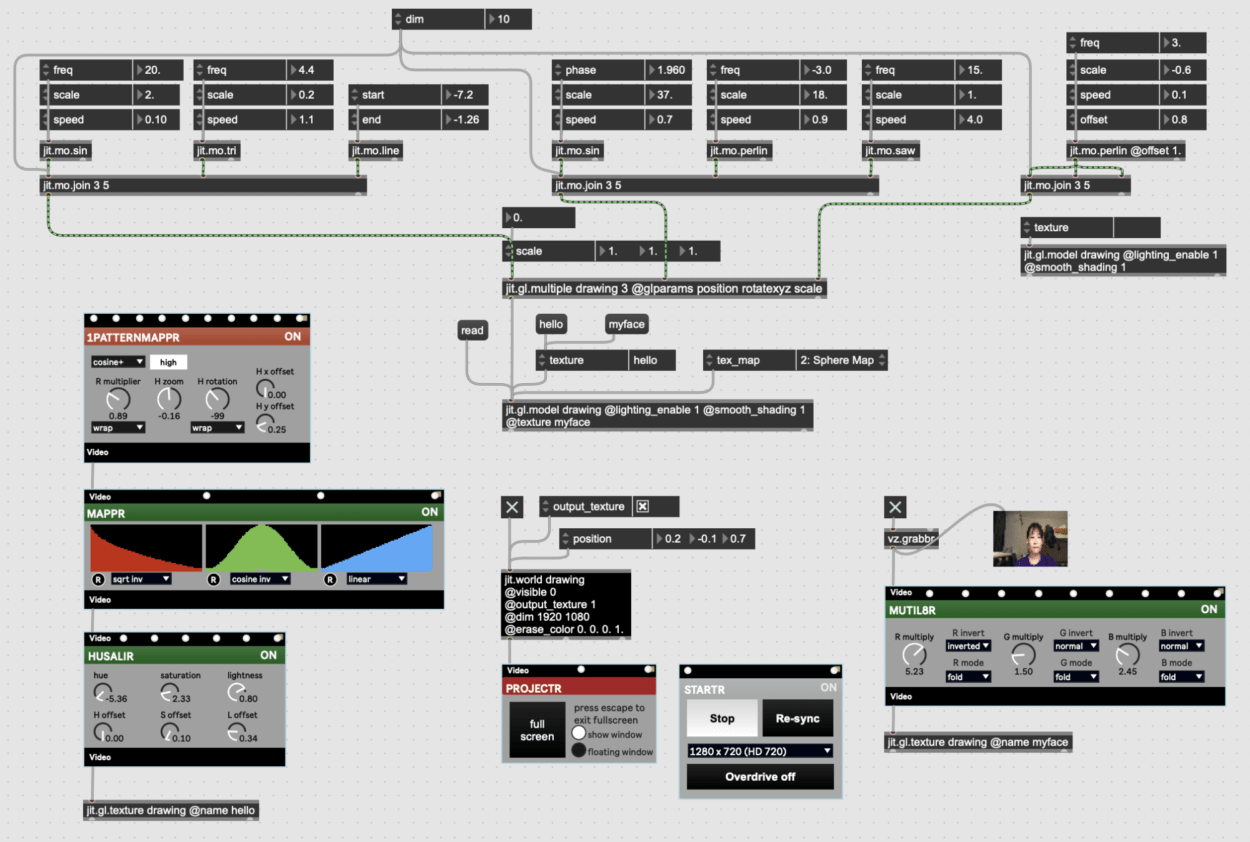

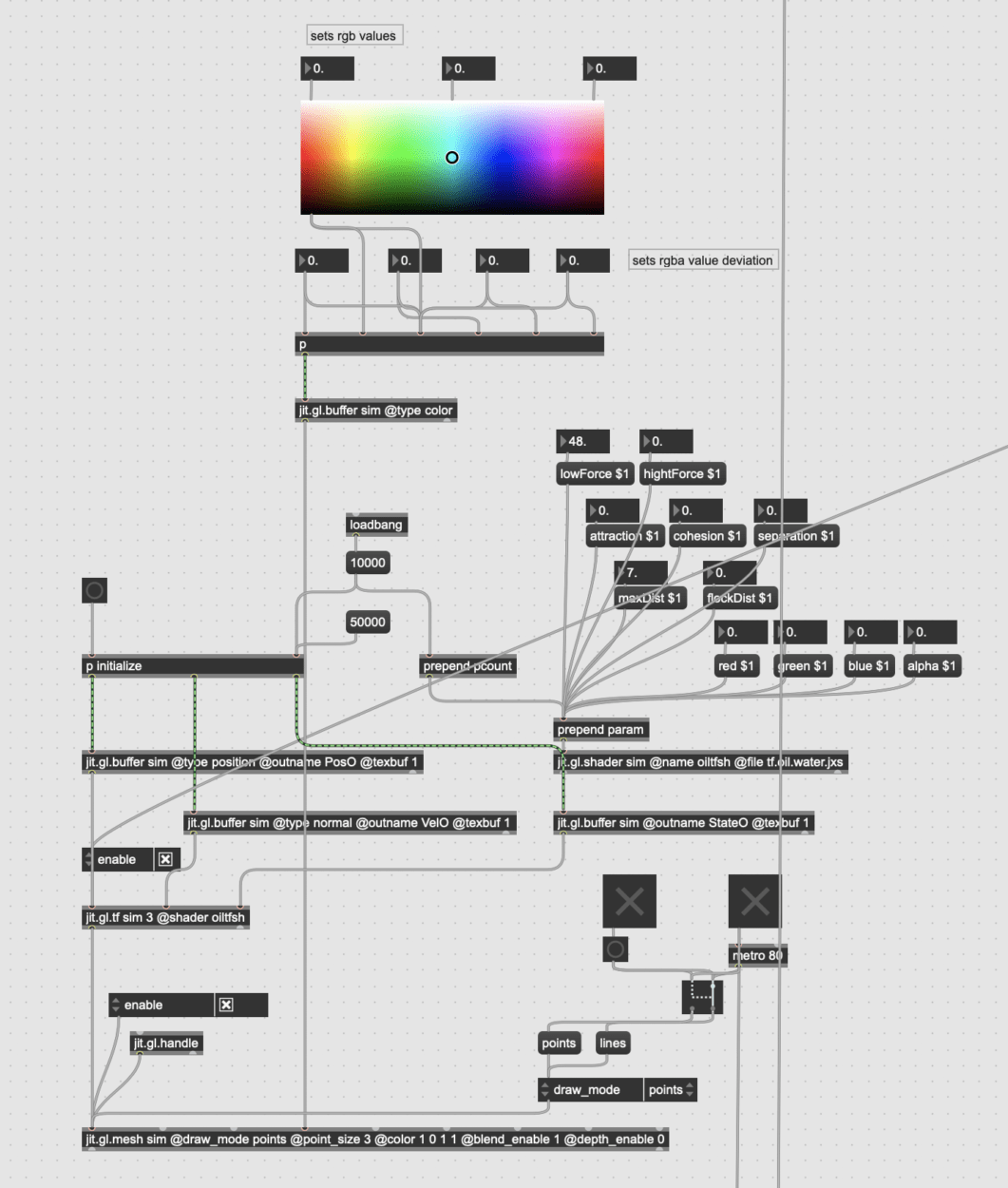

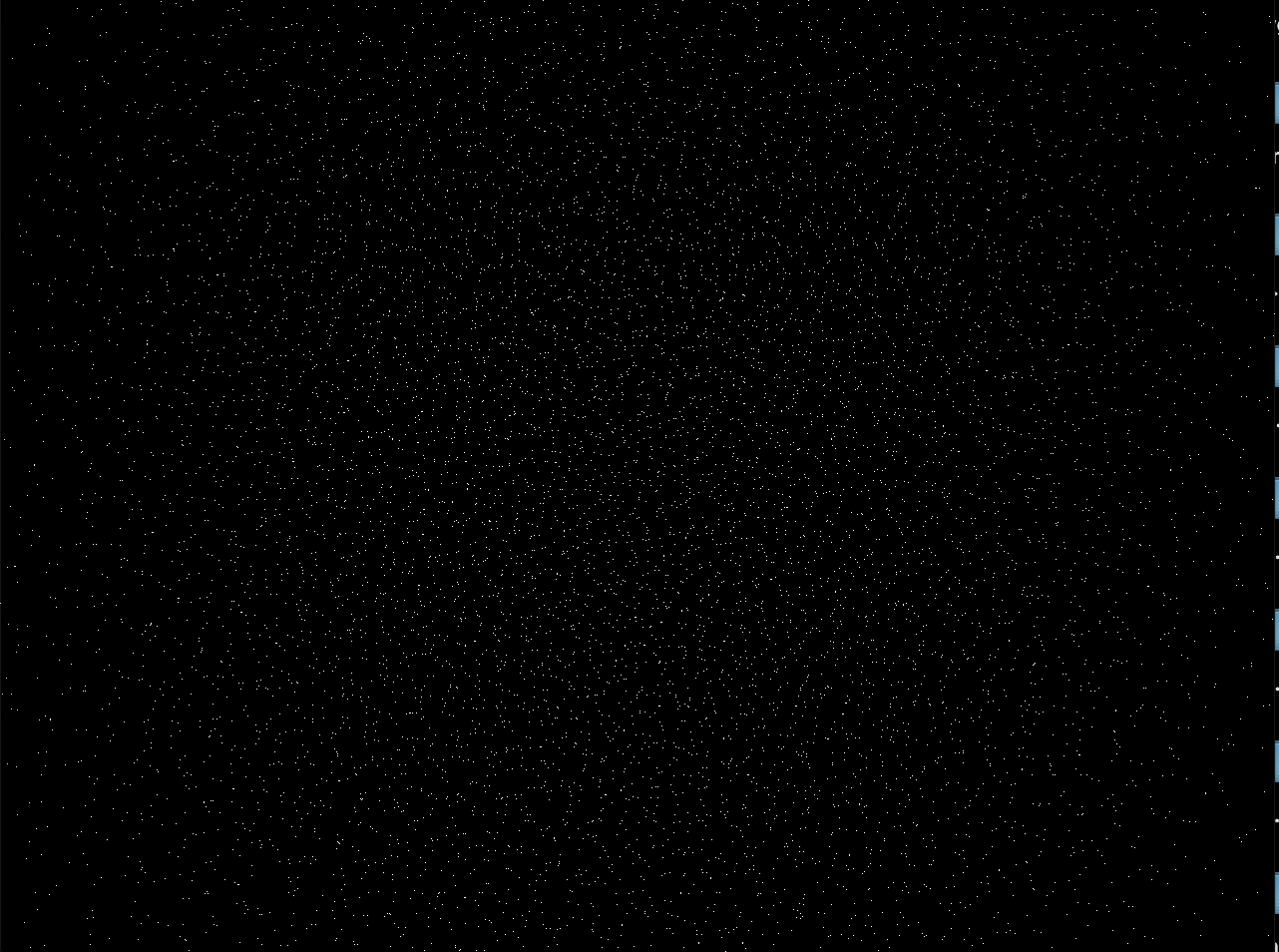

I decided to work with particle systems for this project but had no previous knowledge about jitter… so I started with following an online tutorial by Federico Foderaro. The logic behind it is very similar to that in Processing, however, it is the language that I’m not familiar with. To ensure the quality of visual performance, I moved heavy graphics to shader world and luckily found the GL3 example library in Max, which is extremely helpful to my project. I also tried an example patch about attractors but decided not to include it in my project. So I kept working on the simple particle system patch I borrowed from the library.

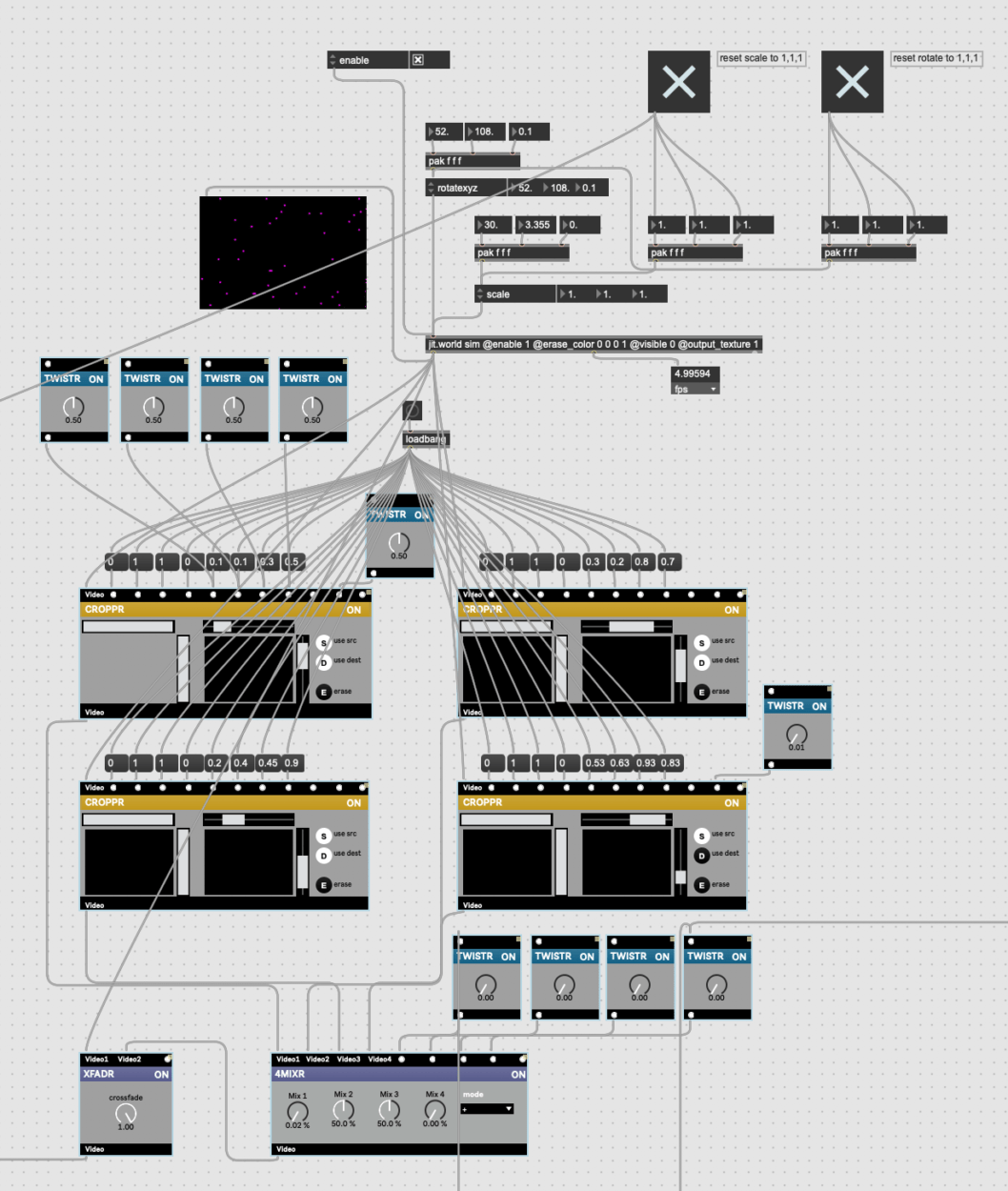

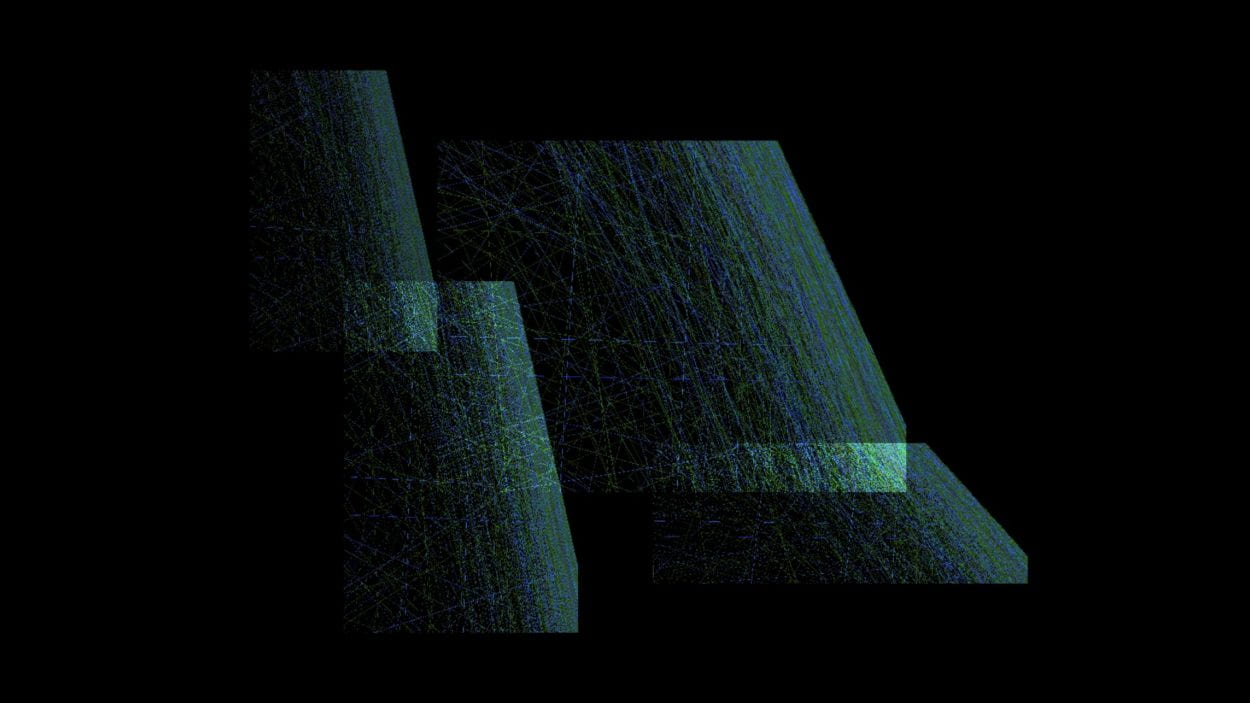

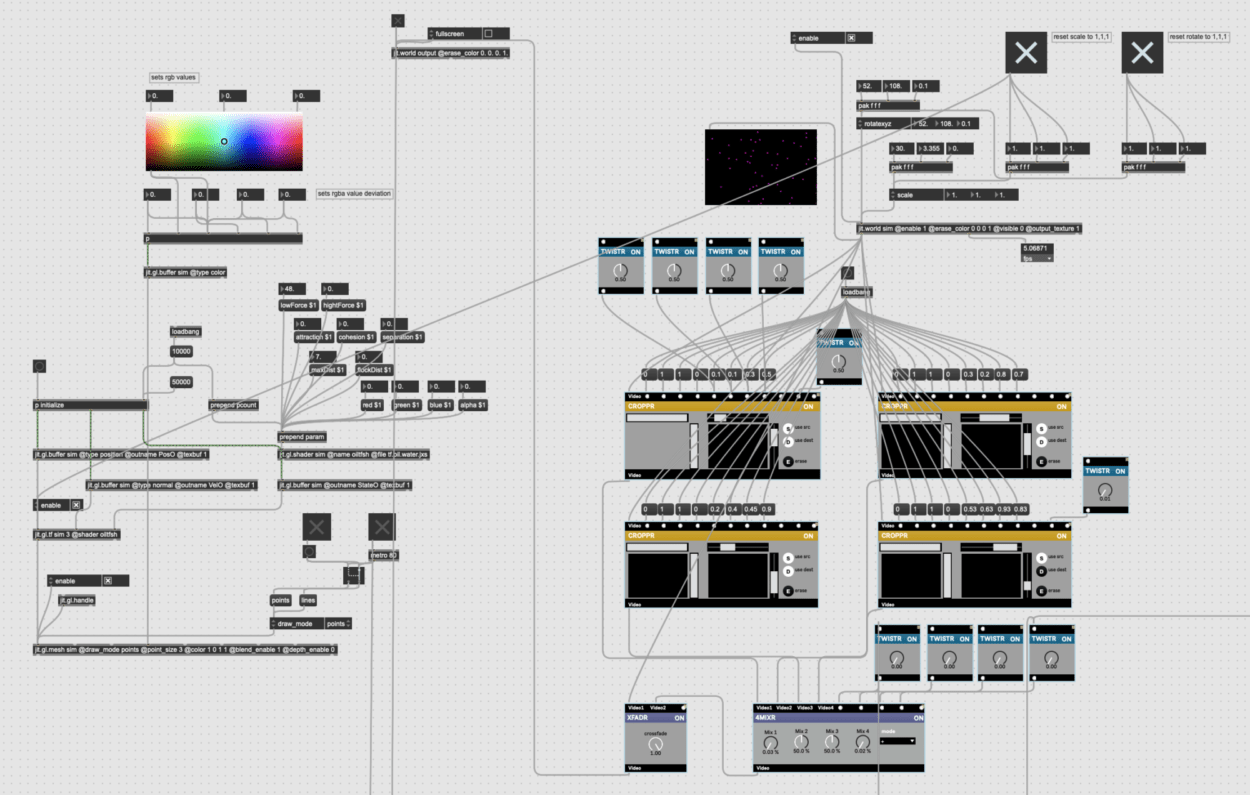

After experimenting with the particle system, I picked out “lowForce” and “maxDist” and “draw_mode” as parameters to be manipulated in realtime, to change the motions or switch between points and lines condition. Colors are also added to the system with rgb deviation (BIG THANKS TO ERIC) to create more vitality and variations in realtime visual production. Figure 1.1 is a screenshot of the particle system section in Max.

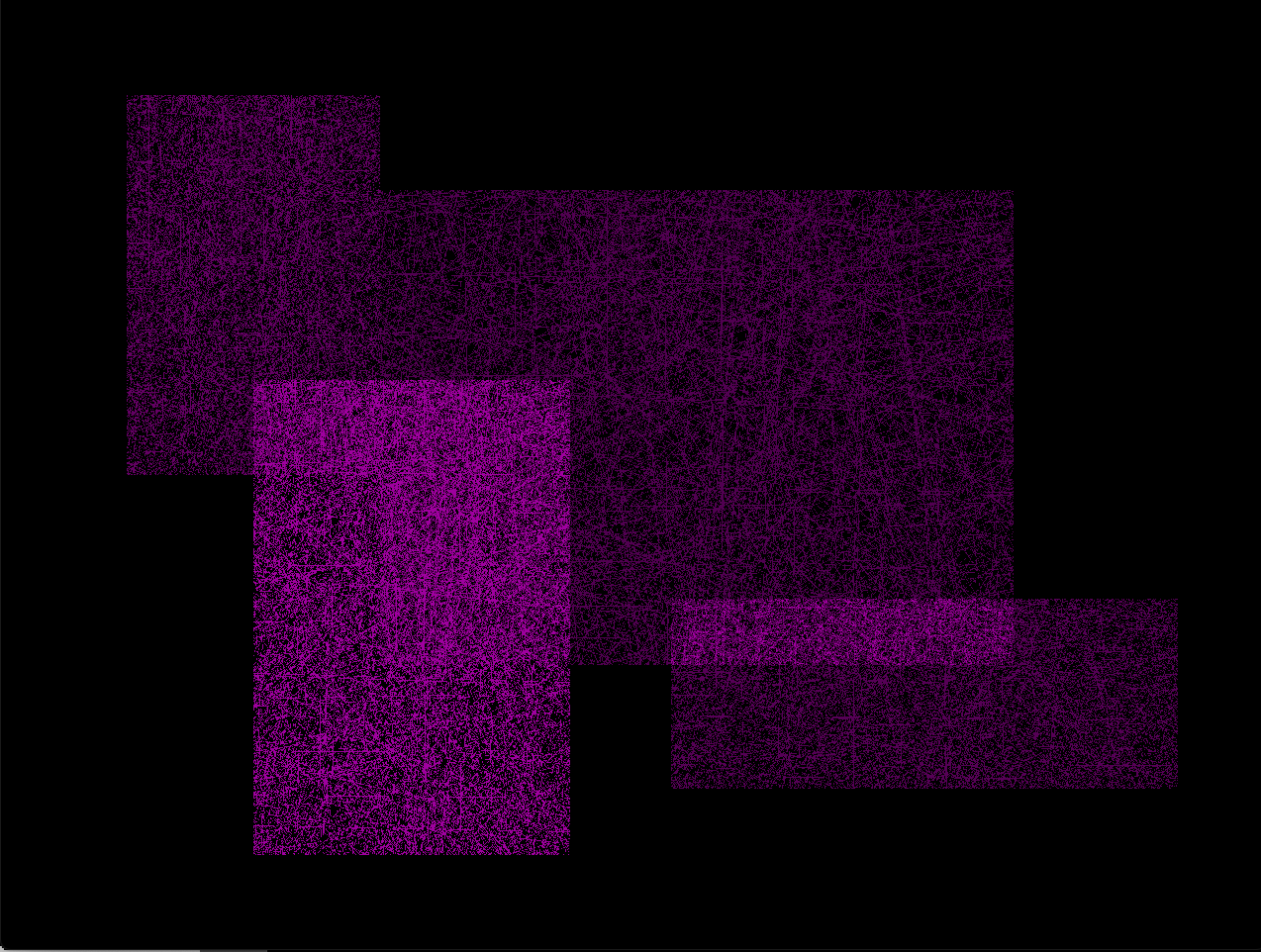

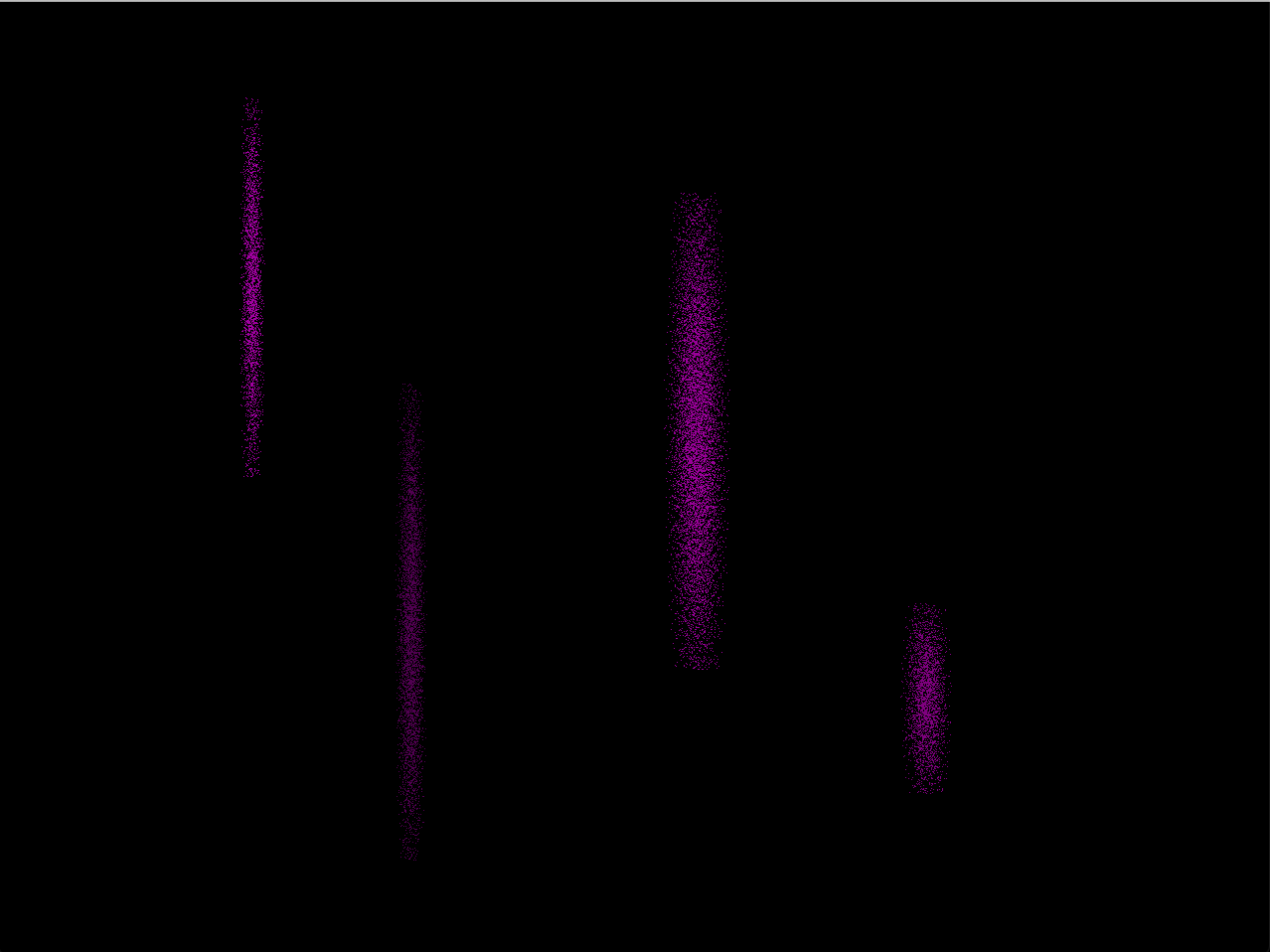

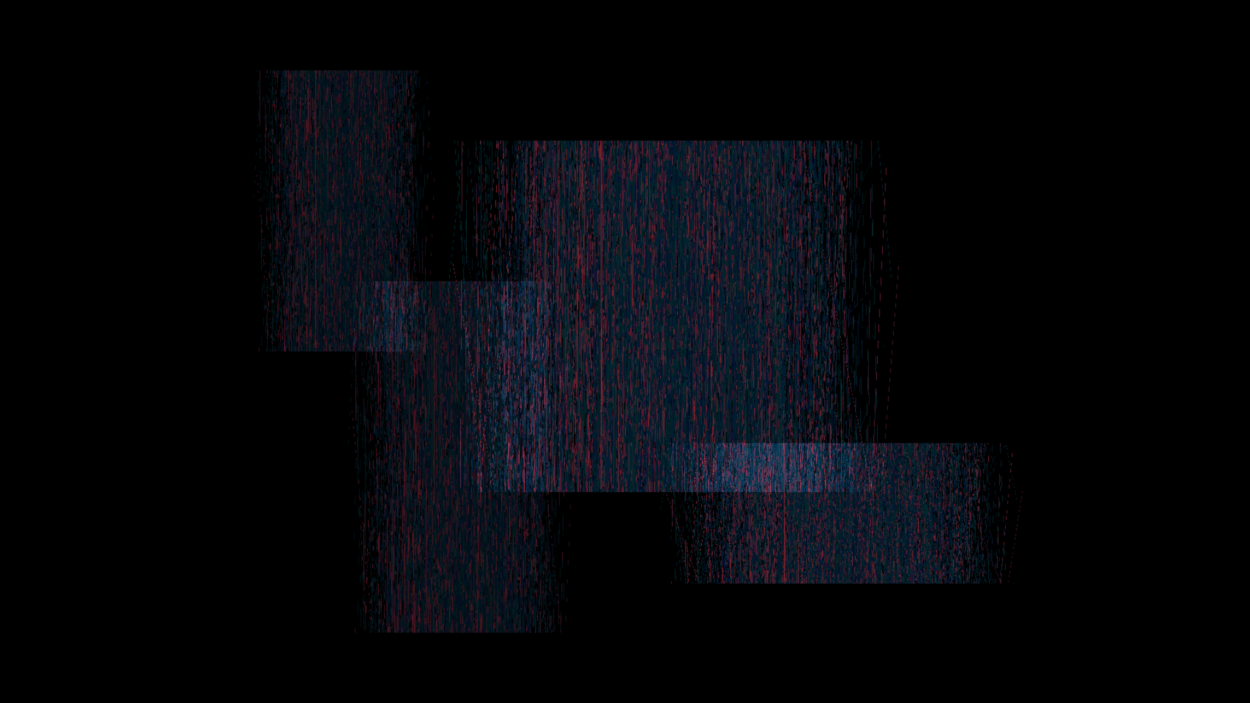

I added VIzzie to the system to create different layers of my visual story, following my storyboard. Here I basically placed the same scene from above four times on the screen but with different positions (see Figure 2.1 and 2.2). I allowed the MIDI controller to adjust the opacity (fader) of each small rectangular section and created more visual dynamics.

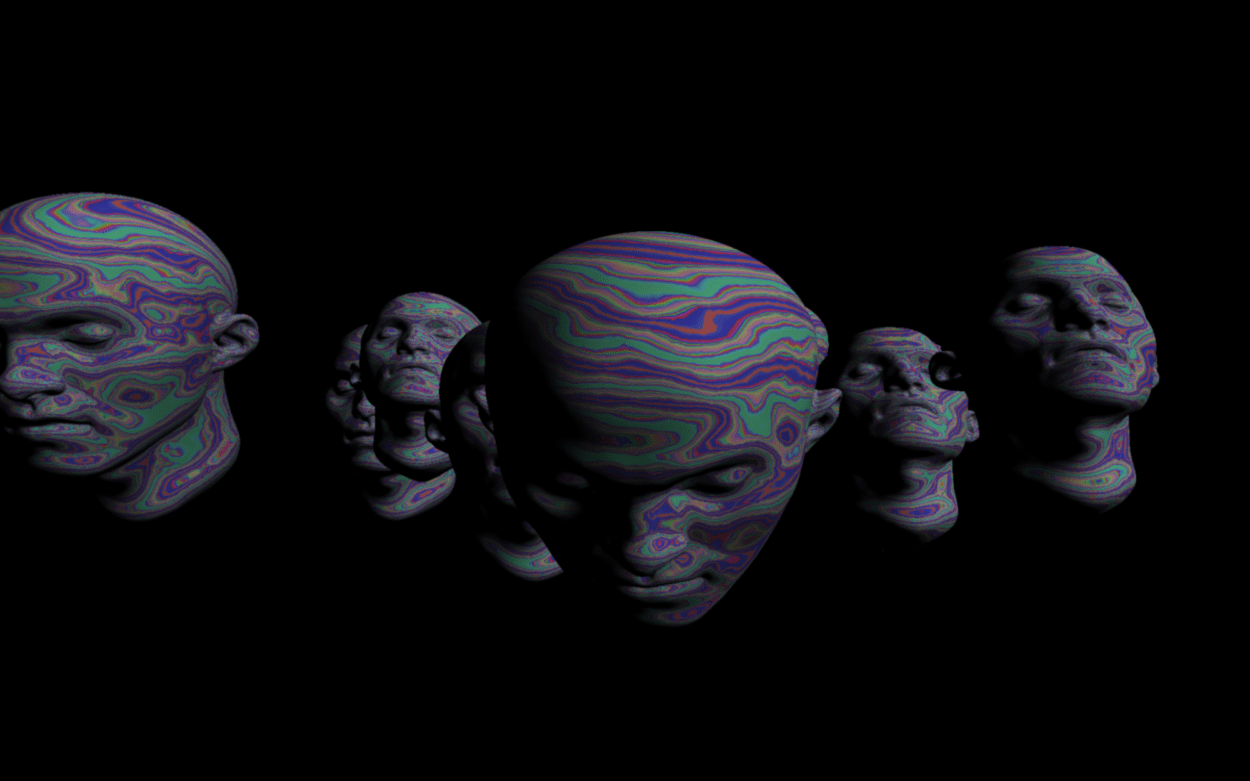

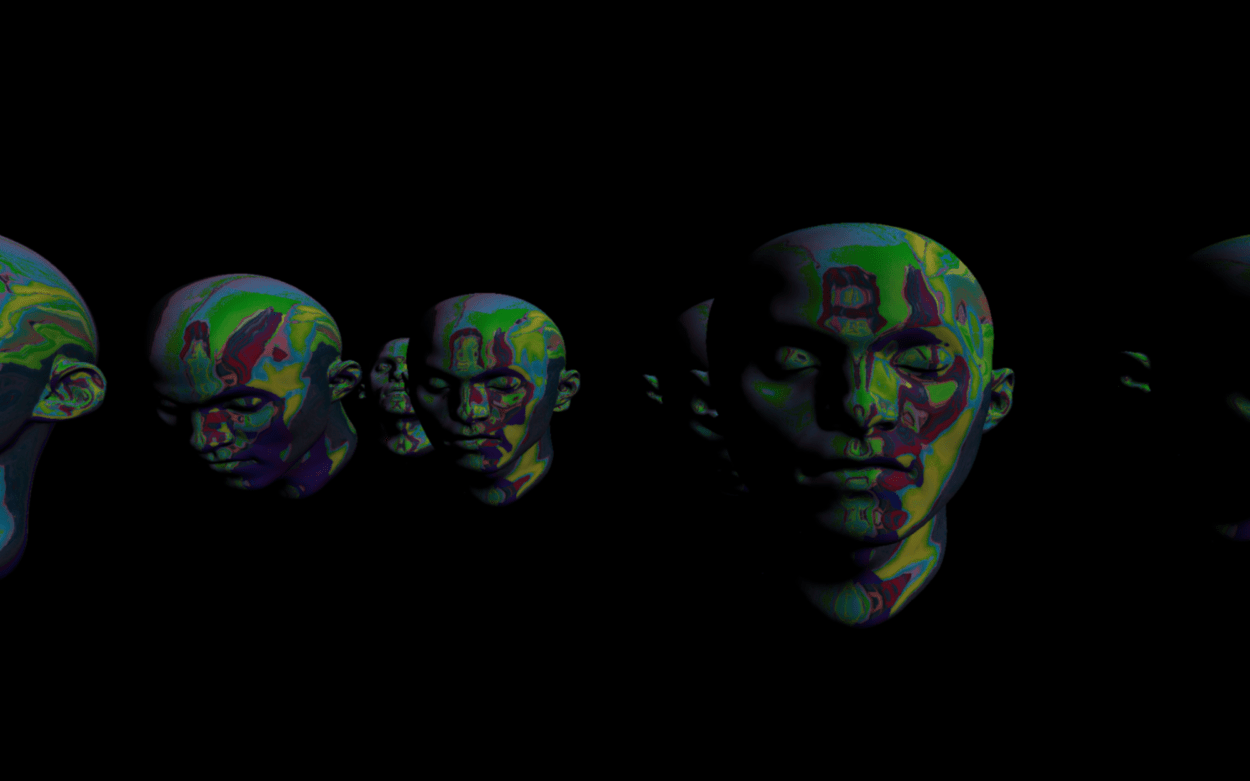

I got quite interesting visual outcomes when changing color composition, scale and rotation parameters (see Figure 2.3, 2.4 and 2.5).

This is a screenshot of the entire visual production system I manipulated in the performance (see Figure 3).

Audio Production

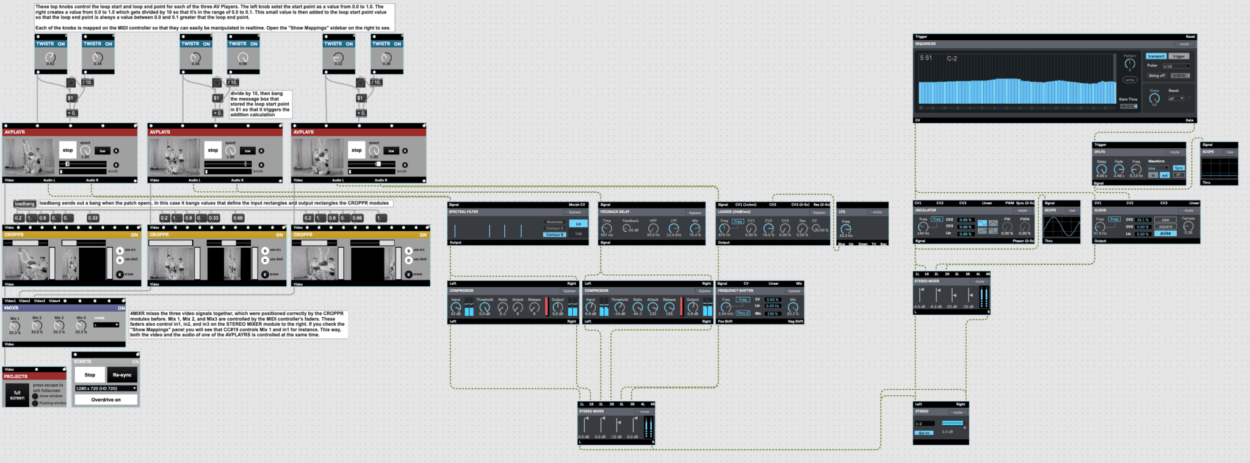

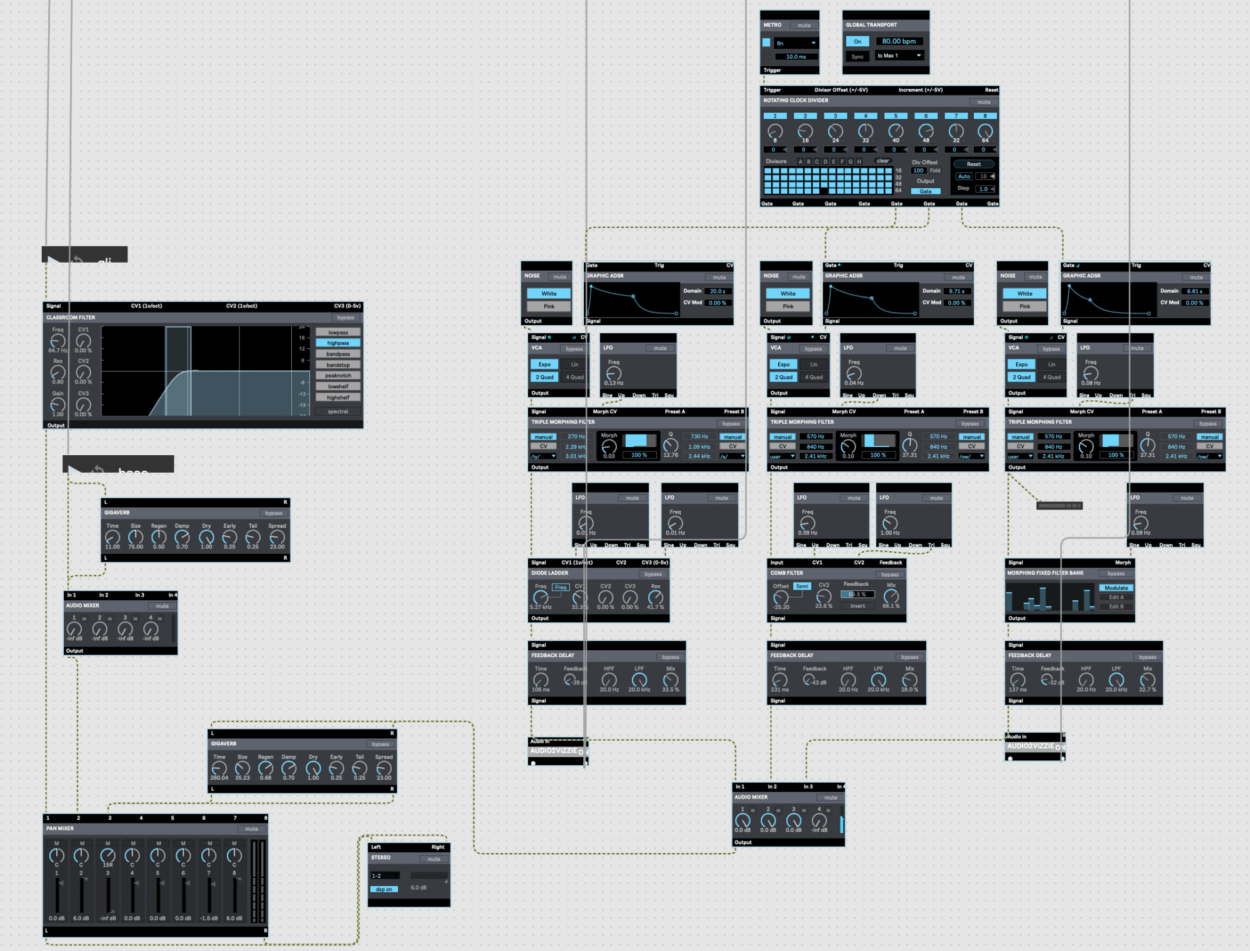

I made the base layer and some short sound samples in GarageBand and edited them before export. Below is a screenshot of my base layer sound design (see Figure 4).

Eric suggested using Classroom Filter to filter out the high pitches so that the short sound samples can better fit in the base layer. Eric also helped me design a third sound sample to enhance the uncanny, mysterious, minimal audio experience. Below is a screenshot of the audio production (see Figure 5).

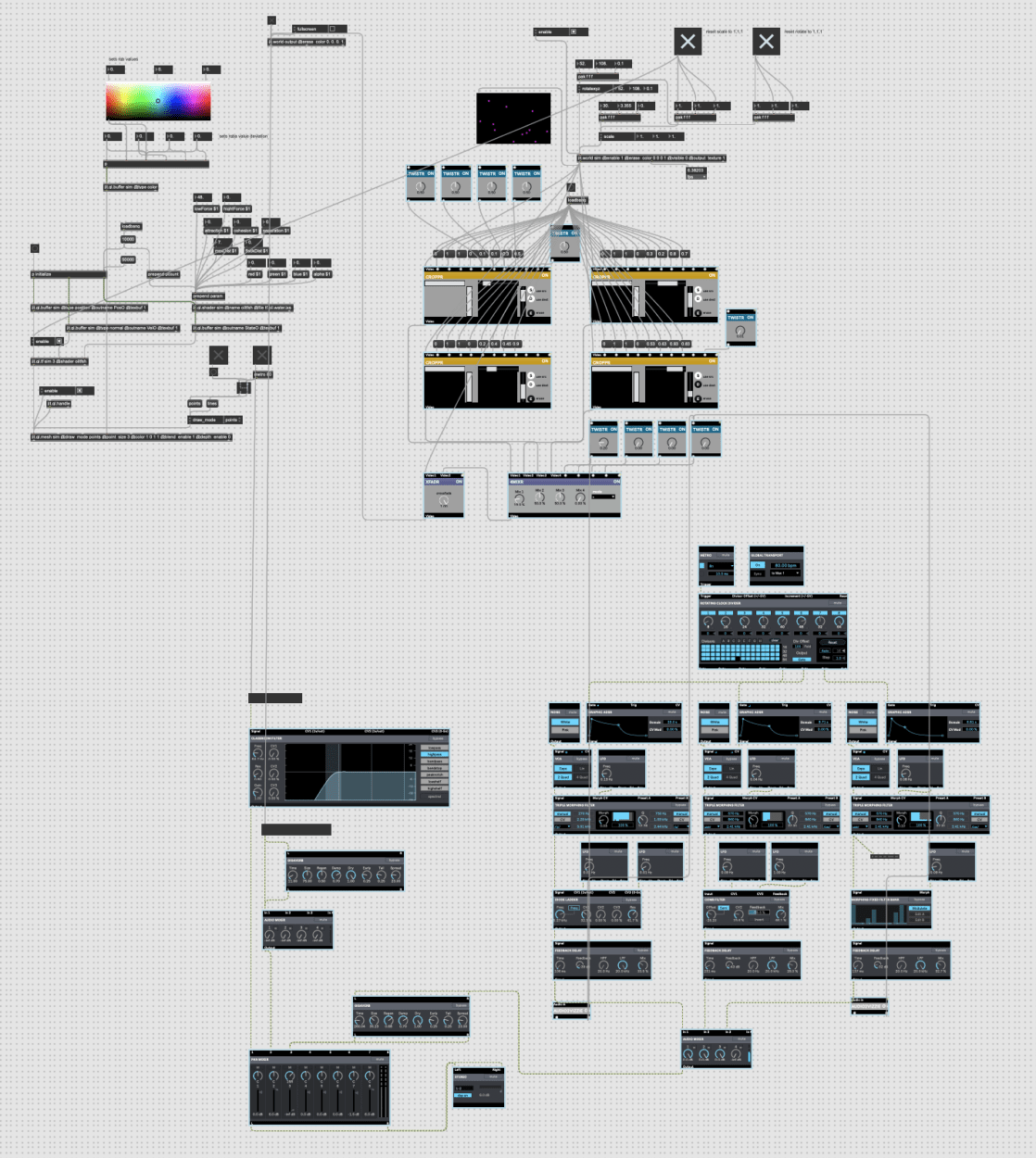

Below is a screenshot of my entire patch which gathers both audio and visual production (see Figure 6).

The change in draw_mode triggers rapid signal sounds. The fading extent of two sections out of the four are affected by the mysterious sound in the performance.

Performance Setup

I assigned parameters (such as scale, position, rotation, forces, fader, etc.) to different MIDI knobs to make everything be in my control more easily. The photo below shows the interface of how I improvised with my audiovisual system on a MIDI controller (see Figure 7). There was also a separate display screen during the performance for me to see the visuals I produced in realtime.

Performance

The performance went quite well during the showcase, I enjoyed it, but it could be better. I think the sound and the visual accompanied each other quite well in realtime and did offered people an immersive, minimal experience. As I discussed with Eric earlier, however, the sound could ‘ve been richer with more variations. I was a bit upset about my ending (the last 20s or so) — I wasn’t able to find the right knob to turn the audio off and it was a bit out of my control.

Visual wise, I wasn’t expecting the graphics to be that slow. I’ve worked with the system long enough and clearly knew that the visual was much smoother while rehearsing. I also produced much more beautiful scenes with better color combinations, scaling/rotating/positioning parameters… Although I know that the unexpected is always a crucial aspect of live performances that cannot be avoided, and I received quite positive feedback from the audience, I still wish that it could’ve been better.

Conclusion

It was my first time showing my work to a larger audience other than NYU Shanghai people, which was already so amazing to me. I appreciate it so much for such a great opportunity that Eric offered :). I’ve found myself a big fan of audiovisual performance but have never imaging myself really doing it one day — I am surprised at how much I have been able to achieve in both the visual and the audio all on my own. I will definitely work with jitter more in the future and produce better audiovisual performances.