Adventure of Sound

This project is open source. Source code can be found on https://github.com/DaKoala/adventure-of-sound. You can also try this project on your machine by following the guide in the readme file of this repository.

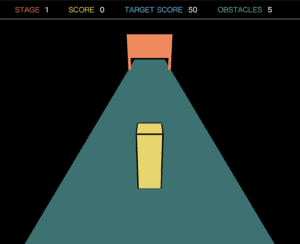

This project is an interactive sound game. Users need to control the size of a box in the center of the screen. There will be obstacles appearing in the front and there are holes of different shapes on the obstacles. The goal is to make the box fit the size of the hole. The better it fits the hole, the higher score the user will get. There are multiple stages and each stage has a target score. Failing to meet the target score or bumping into an obstacle will lead the game end.

Architecture

Typically a HTML file + a CSS file + a JS file is a pattern adopted by most p5 projects. As for me, to make my code well split, I used a bundler, which is Parcel, to bundle my code. Therefore, I can separate my code into different files but in the end only a single JS file will be generated.

I also utilized TypeScript, which is a superset of JavaScript. It was only effective when I wrote my code. TypeScript provides some features that JavaScript does not have, like static type check, which is the feature I love the most. Final output is always vanilla JavaScript.

Inspiration

The game is inspired by a TV program Hole In The Wall, in which competitors are required to pass through walls with different postures.

The basic idea of the game is the same as the TV program. There are holes on the wall and players are required to shape the box.

Sound Processing

Implementation: https://github.com/DaKoala/adventure-of-sound/blob/master/src/sound.ts

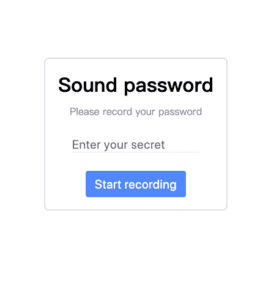

Sound is the only way that users interact with the box. Width of the box is related to the volume and height of the box is related to the pitch. I used p5 sound library to get the volume, which is the AudioIn.getVolume method. For the pitch detection, I used ml5 Pitch Detection. It is worth pointing out that the pitch detection function is asynchronous, so what I did was executing this function recursively and storing the value on the instance.

Drawing Objects

Box: https://github.com/DaKoala/adventure-of-sound/blob/master/src/SoundBox.ts

Obstacle: https://github.com/DaKoala/adventure-of-sound/blob/master/src/Obstacle.ts

Track: https://github.com/DaKoala/adventure-of-sound/blob/master/src/Track.ts

This game is 3D, which was a challenge for me because I rarely develop 3D projects.

This first challenge I faced was changing the perspective, which was implemented by calling the camera function in p5. It took me some time to figure out the usage of different parameters. The value I finally set was the result of testing several times and finding the most comfortable value for me.

It was also challenging for me to draw objects. The way to draw objects in 3D environment is different from the one in 2D environment. What I did was using the translate function to translate the origin and drawing objects.

To save code, I designed a function called tempTranslate, standing for Translate Temporarily.

const tempTranslate = (func: () => void, x: number, y: number, z?: number) => {

translate(x, y, z);

func();

translate(-x, -y, -z);

}

This function is useful for drawing 3D shapes.

Game Design

Game play is an important part of a game. For a game with simple logic like this, it should not take beginners too much time to figure out the interaction. Also, incentive that attracts players is another part need to be take into consideration.

To help users understand the interaction better, the box has a minimum size so even if there is no sound, the box is visible. However, there is a problem that players can score with no sound input.

I got some helpful feedback during presentation and did some modification after that. When the box size is too small, no score will be added.

Difficulties

I think the largest difficulty in the development of this project is handling 3D objects. I am a newbie this field and still learning. Therefore, the shapes in the game seems a little awkward.

I am still not familiar with some concepts in 3D development such as light and camera. I think these things are meaningful to learn and this project for me is an introduction.

Words in the End

This course is the last IMA course I take in my college (apart from the Capstone). I really enjoy the happiness brought my IMA courses and wonderful IMA instructors.

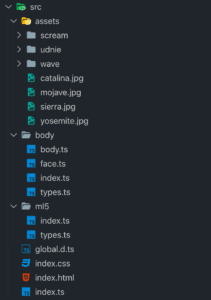

Structure of my source code

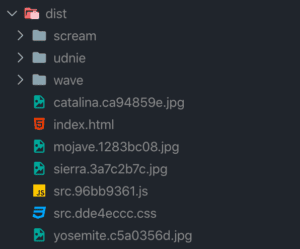

Structure of my source code Structure of my distribution code

Structure of my distribution code