Final Project Proposal

For our final project, we decide to further mimic the pattern drawn by the male pufferfish (as the photo below) using a sand table, magnets and a robot with stepper motors.

What we have achieved in our midterm is a 2D pattern with concessions of both not drawing the exact pattern and not being able to control different depth of the pattern. See the photo below.

The reason why we choose to further discover sand drawing is that the pattern drawn by the pufferfish not only has an aesthetic touch but also exhibits the power of a tiny organism that can create such perfect, grand (2m in diameter), mesmerizing and meaningful art. We want to prove that a 13-centimeter-long pufferfish can draw, a small robot could also accomplish.

The updates to be experimented: 1)Encoder motors controlled by Arduino. 2)Electromagnet/Robotic arm that lifts the magnet up and down. 3)A more decent-looking sand table. 4)The magnet acting like a pufferfish that uses shells for decoration but instead attracts metal.

How the above constructs as a research paper: We would share our experiments of and inquiries into different methodologies to achieve our desired outcome–a more precise and 3D version of the pufferfish’s pattern. As Prof. Rudi brought up, maybe we can exhibit the project as art pieces and receive art critiques to be included in the paper.

Collective Behavior

For the collective behavior project, Kevin, Anand, Gabi and I worked together to create a defend swarm.

The swarm steps:

-

- enemy detected

- The closest robot moves towards the enemy

- With the help of the camera, the first robot navigates to the enemy and tries to push it out of the boundary

- If the first one can’t push it out, the second one will come and join it to form a chain sequence, so does the third one

- If the enemy has been pushed out of the boundary, all of the three robots are reset to the original position.

We didn’t manage to get all of the functions to work, but we got some functions to work properly. And here is the code:

calculating the position of bot, as well as the angle it is facing (performed by recognizing the four corners of the bot’s QR code)

def calcBotData(data): #data has the four corners #calcBotData returns the x,y of the center of the bot and the angle it is facing, can also be used for the enemy bot

id = data[0]

corner1 = data[1]#corner1data Should be at the location of the head

corner2 = data[2]#corner2data Should be at the location of the tail

#these corners are across from each other

#calculating center of the bot

x = (headx + tailx)/2

y = (heady + taily)/2

headx = corner1[0]

heady = corner1[1]

dy = heady – y

dx = headx – x

angle = math.atan2(dy, dx)

if angle < 0:

angle = 2*math.pi – angle

#THIS ANGLE IS IN RADIANS

return [id,x,y,angle]

sending ‘reinforcement bots’ in the case where one bot cannot push out the object

def initiateCall(availablebots, enemy, dest):

reinforcementBot = availablebots[0] #store first bot in list into variable

availablebots = availablebots[1:]

distance = math.hypot(reinforcementBot[0][0] – enemy[0], reinforcementBot[0][1] – enemy[1])

timeA = 6.38 * orient(reinforcementBot, dest)

timeD = 60 * distance

command = str(reinforcementBot[0])+ “,0,”+ str(timeA)

#SEND TO SERIAL

sleep(timeA + 2)

command = str(reinforcementBot[0])+ “,1,”+ str(timeD)

#SEND TO SERIAL

timeA = 6.38 * orient(reinforcementBot, dest)

command = str(reinforcementBot[0])+ “,0,”+ str(timeA)

#SEND TO SERIAL

command = str(reinforcementBot[0])+ “,1,0”

#SEND TO SERIAL

return availablebots

resetting the bot position

def reset(robots):

received = robots

for i in range(3): #for each bot

move[i][0] = bot[i][0]

for j in range(1,3): #calculate vector, j = 1 for x, j = 2 for y

move[i][j] = start[i][j] – received[i][j]

if move[i][1] != 0: #exclude x = 0

tanAngle[i] = move[i][2] / move[i][1] #calculate tan, y/x

if move[i][2] > 0: # y > 0

angle[i] = math.atan(tanAngle) #angle = 0 ~ pi

elif move[i][2] < 0:

angle[i] = math.atan(tanAngle) + math.pi #angle = pi ~ 2pi

elif move[i][2] == 0: # angle = 0 or pi

if move[i][1] > 0: #x > 0

angle[i] = 0;

else: #x < 0

angle[i] = math.pi

else: # x = 0

if move[i][2] > 0: # y > 0

angle = math.pi / 2

elif move[i][2] < 0:

angle = math.pi / 2 * 3

else:

angle = 0

These functions required some coding skills and took us some time to test and combine the functions. However, we couldn’t get serial communication to work properly. Due to the limit of time, we stopped after trying it a few times. Also, our project is too big to be done in two weeks. For future improvements, we hope to add serial communications and to test if the whole code works properly .

Biology Observation

Process:

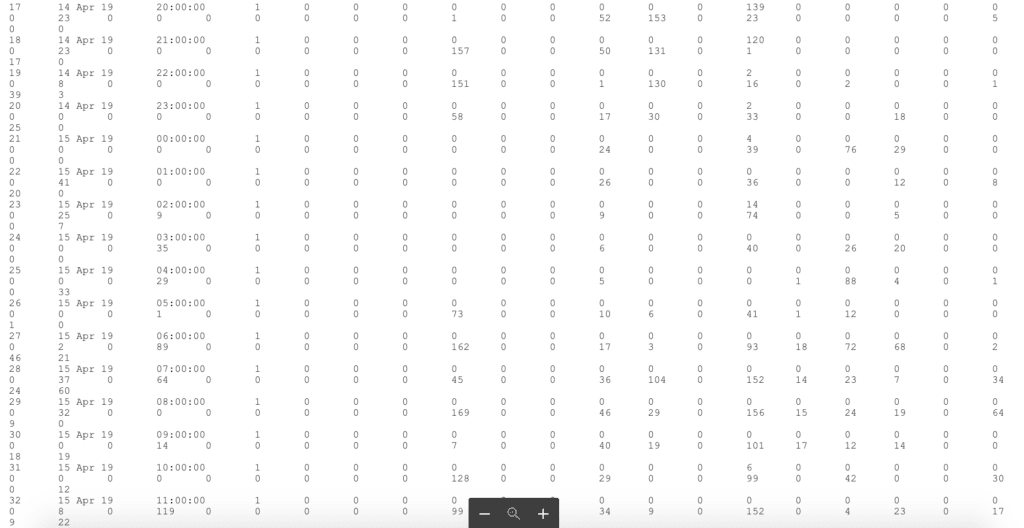

We injected C02 to immobilize flies. Once they were all temporarily immobilized, we moved them to the fly pad and observed gender with a microscope. Then we selected 16 males for two types, put each fly into vials with food, and covered the vials with cotton, labeled them and put them into the monitor.

Results:

The interpretation rule:

Column 1. Index at reading (from 1)

Column 2. Date of reading

Column 3. Time of reading. Please start from line 13. 16:00:00. From this time on all monitors are read once every hour.

Column 4. Monitor status. 1= valid data received.

Column 5 to 10 are not used in this experiment.

Column 11. Channel 1

Column 12. Channel 2

…

Column 42. Channel 32.

Reflection:

It’s interesting to experiment and observe fly behavior. For our experiments, most flies followed the circadian rhythm. Some flies didn’t follow the circadian rhythm might due to the death caused by our mistakes during the experiments.

NOC Final Project

Description:

Dreamwalker is a human-graphics interactive dance performance which aims to explore if computer graphics can replace a human in a duet. My experiences as both an IMA student and a dancer inspired her to observe the similarities between human movement and computer graphics.

This piece applies four different interactions using three different projectors to create the illusion of someone you lost in the past coming back to you in a dream.

The interaction between a human body and a virtual body also intends to make the audience consider: how can you tell if something is real or no?

Visuals:

For the visual part, I used the concept of nature of code to make it. It starts with dots moving around. When the wakes up, the graphics get the awareness and begin to have shapes and finally grow into a human being.

Non-human visuals:

I used autonomous agents and flow field to create non-human shapes. With a Kinect, when the dancer moves to the ground, the graphics will follow her. I also used sin and cos functions to mimic the movement of legs.

Human Shapes:

I used motion capture to capture the movements and use noise to draw the human skeleton shapes. I also played with opacity when the visual dancer turns.

Presentation:

https://docs.google.com/presentation/d/1aEQu5dnVH2e_3doHIWDBPLQejHqynlH24GERCwZAfPE/edit?usp=sharing

Final Video Documentation: