Finally, this semester’s journey has come to an end. With the final project done, I am now writing this blog post to record the process of the whole project in the meanwhile try to reflect and synthesize the achievements and flaws to be improved.

Part I. CONCEPTION AND DESIGN

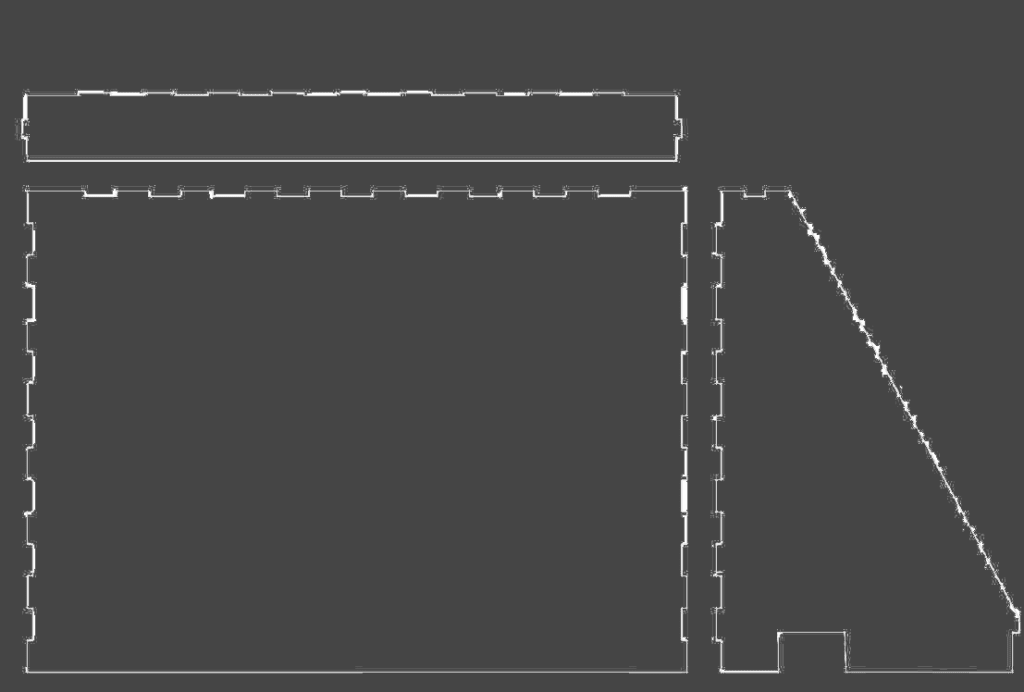

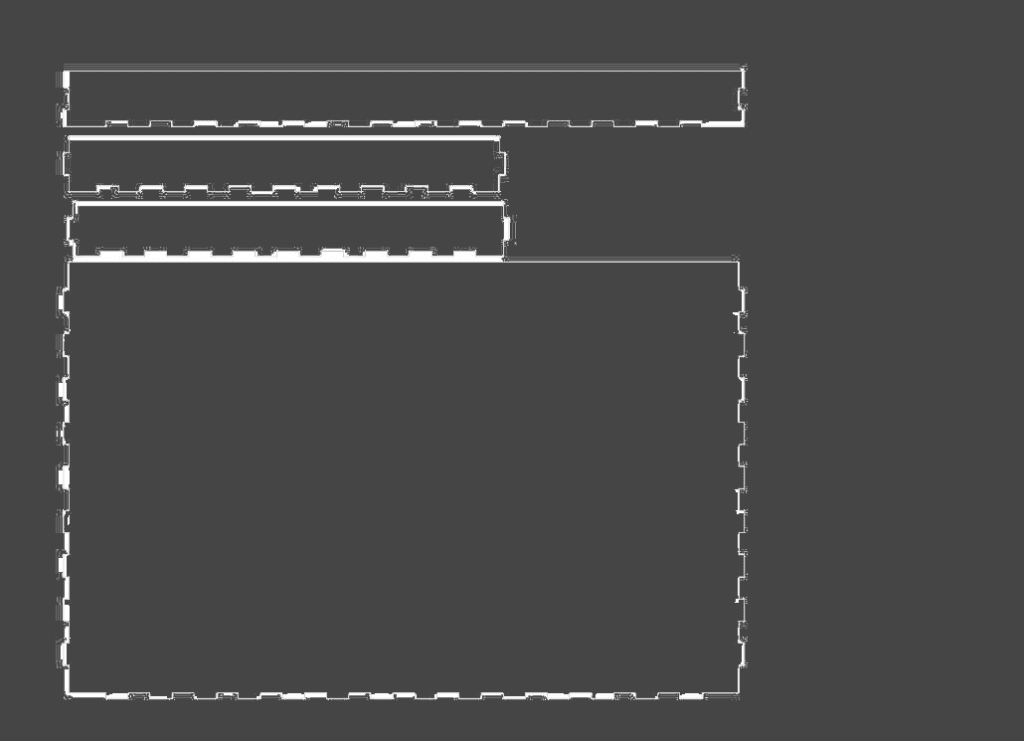

When started thinking about our final project, we have defined that the experience we would like to create should involve body interaction rather than just pressing down keys and buttons. As Joseph and me are both game players, so we decided to bring a game with body interaction controlling. We chose a retro aircraft war game, because its classic and easy to be understood. We thought that it would be more interesting while adding some difficulty if the user can open up their arms and control the aircraft with their body by tilting left and right. Just like the way aircraft banks. Thus, we decided to use accelerometer to detect the motion of the user and mapping them into the game. Besides, we think that such a body interaction game should completely get rid of touching the computer, so that the users don’t have to press the keys or move the mouse while wearing devices with long cables. Thus, we decided to make our game, all the menus and selection controlled by body interaction too. On the material side, since we modelled a retro game, we would also like to recreate and arcade game box to bring some sort of retro feeling. So, we designed the box that fits the test computer, and laser cut it out using wood plank. To be frank, using a projector might be simply a better choice, since the interaction involving body should be displayed on a huge screen. Using a computer gives a feeling of unbalance while the user has to stare at a small screen while standing at a distance from it. We did not think about this point, this is an idea that definitely worth improving. Besides, on the material of wearable devices, there were several choices, like gloves, bands, and even clothes. Finally, we decided to use bands. Just like the way people wear a watch. In one way, a light band does not add any extra unnecessary weight to the device. In another way, using a band can make sure to the largest extent that our sensor gets the correct value. Since most people wear a watch in the same way, that makes it much easier when detecting the motion.

Part II. FABRICATION AND PRODUCTION

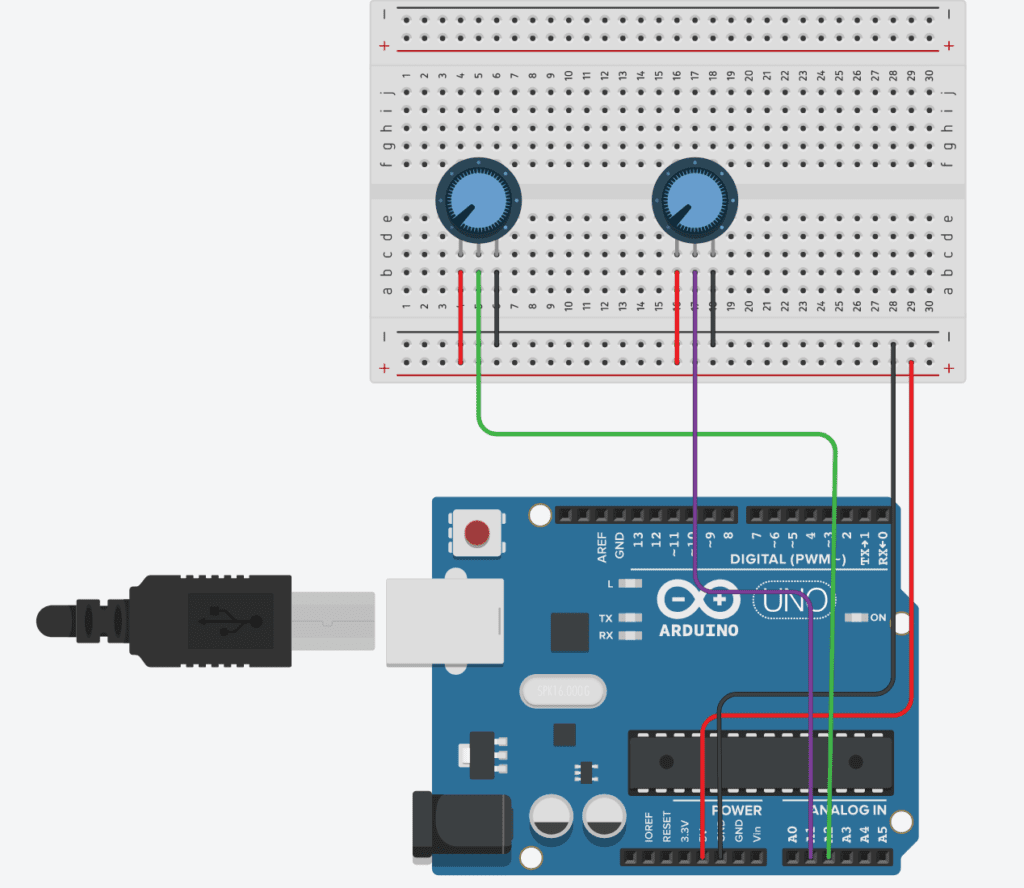

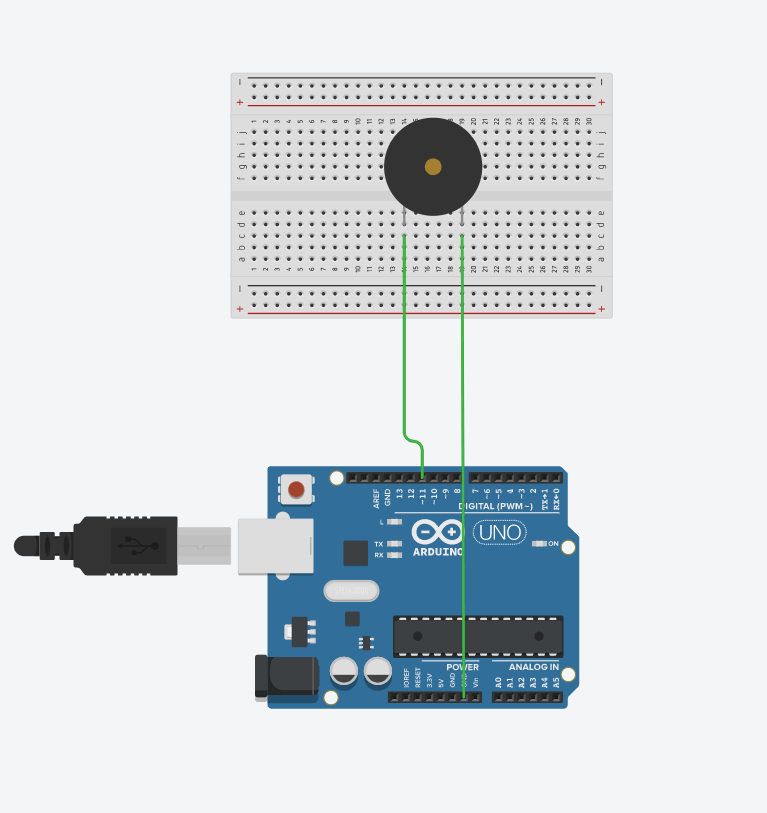

In the process, there were a lot of achievements that we had never done before, but also, I have to say that there were simply a lot of difficulties that were completely beyond our imagination. I would like to start with the successes. First of all, is the most basic game elements and aircraft controlling. The basic component of the game includes music effects, images, and game logic. The music effects and images are relatively easy to solve. The game logic, which includes collision detection, grade and level, multi forms of enemies take some time. For the collision detection, we set a radius for each enemy aircraft, adjust them until they are accurate to the best. For the level system, we let the aircraft adjust the bullet images and amounts according to the current score. Though they are not difficult, but they are indeed time-consuming. For the controlling part, we used serial communication between Arduino and Processing. But one problem is that, the accelerometer is actually quite sensitive, causing the aircraft flickering on the screen. Also, using an accelerometer means that there might be a possibility that when user make severe movement, the acceleration value might be too big for the map function. Thus, we define a threshold that, when the value of accelerometer changes minutely, the signal does not send, and while the value change drastically, we let the value change slowly and gradually and finally to the point where the user wants to be. All these mechanisms work invisibly behind the screen, but it takes a lot of time and effort to tuning and choosing different threshold values to make sure the aircraft is stable on the screen so as to create a better user experience. Another important achievement we make is the body-controlled cursor system. It moves the cursor on the screen by moving the arm up and down by the user. This important system makes our ideal, free of keyboard and mouse controlling mode come true. To be specific, the mechanism is that, the user moves the cursor by body, stay on an item for three seconds, boom, the item is selected. The latency, in our case, is three seconds. This is set to make sure the user only gets into the item that he or she wants to get in. To give the users clear information, we have to use an indicator to show that the system is running, don’t worry. We use an empty circle, that completes itself a third every one second to indicate the cursor status. Once the circle completes, the system chooses the item where the cursor stays at. This mechanism is also user friendly to avoid users moving and clicking while dragging long cables. Besides, we have the well-cut case that is done completely by Joseph. I know it is not easy to make those finger connections between boards, especially for a not rectangle box. But he finally did it, making the computer looks a small arcade. Also, I would like to express my gratitude to him here. I believe all of these achievements are what we can be proud of.

Next comes to the difficulties. The biggest one is the I2C communication. We planned to have a two-player mode, which obviously requires two sensors. However, the two accelerometers have the same physical I2C address. It is basically impossible (or just impossible for my level) to communicate without an I2C shield or a multiplexer on a Arduino with one SDA&SCL pin. We tried different ways but did not get improvements. This is why we were showing up in user test session with only one sensor.

Speaking of user test, the trouble of using one sensor immediately reveals. The users only use one arm to control, which is not the way that we expected it to be. Using one arm does not feel like an aircraft at all. Besides, some indicates the instructions could be clearer and more new playing method can be involved. But still, most of our feedback are positive. A lot of users think it is cool and they like it.

User Test Session Videos

After the user test session, we made according improvements. First, the sensor problem. We finally choose to use two Arduino boards with one sensor on each. And to utilize this sensor, we develop another advanced mode, where user can control the aircraft vertically. Though this mode is extremely difficult which really requires coordination between arms since they are not moving in the same way. At least, with two sensors, the users start to use both arms, which creates that feeling of flying. Besides, we improved our instruction to make it as clear as possible. These improvements are quite effective, since a lot of users, enjoy our game in the IMA show.

User Playing During IMA Show

Part III. CONCLUSION

Reflecting back to our definition of interaction. We defined it as a highly user-involving, body interaction. I think our project has tried to align with it as much as possible. In order to make the user concentrate and being involved, we used game as the carrier of the interaction. In order to make body interaction, we try to model a motion controlling experience. The users seem to enjoy it. They play with their arms open, trying their best to hit every enemy plane. Some users tried the advance mode, alone or cooperate with friends. It’s difficult, but they play with smile on their face. We have developed a leaderboard, and the users are really competing with each other in the IMA show. A lot of users take pictures or record a video of him/herself enjoying the game. Another user who reached the highest score, (really high score) took a photo of the leaderboard. All these makes us happy. But this is not a perfect project. There are flaws that we need to improve. Using two simple sensors are definitely not the best way, we will try to use Kinect in the future projects if possible. Also, we will try to utilize the code so that the game runs more smoothly. We can also definitely make this game complete rather than playable by now. Moreover, we can definitely create more new elements into this game or adapt the body control concept to another application to create a who new experience. But on this point, I still want to say that, being new does not mean a completely new way of controlling for me. The application of the body control idea on this game is new because not much people tried to do so and not much player enjoyed this new application. It is because of new that a lot of players would enjoy our game. However, the keeping the idea of being new is always good and enlightening point in any interactive developing process. We will persist in this idea and try to do better in the future.

Finally, to wrap the whole thing up, to make a game is to make the gamers enjoy it. That is why we choose to make a game. And it is the ultimate goal that this project wants to bring. This is the significance we are doing this. The most important thing from this project, or even from this class is to always keep trying. A lot of our successes are resulted from some accidental trials. And keep moving, no matter what the failure is.

Thank you, to the best professor Inmi, my partner Joseph, all my classmates, and the people who are reading this page.