Instructor: Rudi

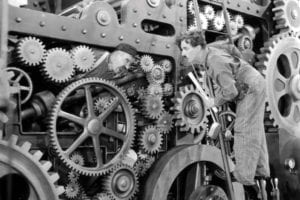

The concept of my final project changed several times over the process of production and testing. Initially, I would like to build a wearable device that visualizes users’ anger into smokes – intimating the anger presentation in the cartoon. However, with the adjustments I have made over time, the concept for the final project, Focus Helmet, is fairly simple – visualizing the level of focus of users into smoke. The idea behind linking the level of being focus and smoke comes from comparing the human brain as engines/machines. With the higher level of input of an engine, the more likely it consistently generates smoke and visualizes its input. It also reflects a larger problem of modern society, we always see ourselves as individuals with freedom of choice and will, but in the meantime, we are always powerlessly subjected to the wheels of the economy. The society requires us always to stay focus on the personal inputs by different means, but rarely ask whether we wanted to do so or not. The recent societal issue of “996” is right on top of this problem. Just like in the Modern Times by Chaplin, workers almost integrated into the machines as part of it.

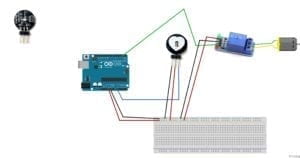

I started the project with the use of a pulse sensor, a relay, and a 5v humidifier. I purchased all the parts and jumped on working on it. Upon user testing, the circuit I built was still with the pulse sensor attached.

However, because of its raw look, users were not able to tell how to work on it rather than putting the sensor on, especially, because of the comparatively slow change in a heartbeat with the change of emotions, the prototype was not able to visualize the change in the emotion efficiently but only to track the pulse. Thus, I decided to change the pulse sensor to a brain wave sensor which is more efficient in capturing the change in the mind into data. With the Neurosky brain wave sensor I purchased online, I was able to move forward the project. I ran into a lot of difficulties in replacing pulse sensor with the brain wave sensor given the complexity of the brain wave sensor. I had difficulties in nearly all steps of using it, from connecting it to Arduino and coding on Arduino, to analyzing the raw data. The data collected from the brain wave sensor is fairly unstable and ranges dramatically. Eventually, I have successfully mapped it into a range that could sufficiently reflect the individual level of focus, and have the humidifier react to it. On the Processing side, I have applied a real-time graph to present the raw data from the brain wave sensor. Furthermore, to make it more presentable, I have attached the whole device to a helmet while dismounting the original humidifier into a smaller one attached to the side of the helmet. Eventually, the “focus helmet”, detect the level of the concentration of users, and generates smoke when users are consistently focused.

My project, different from most of the ones of peers, didn’t really involve two actors much “behavioral” interaction, but rather focus on the individual spheres. As I wrote in the previous posts, I believe one of the most important elements of interaction is the process of translation, translating the message from one actor to another, and I have always believed that technology is something that could strengthen the translation of messages. By visualizing a not really visible mental state – focus, my final projects aim to reflect a bigger problem, we are not born to concentrate on things, especially not things we don’t enjoy. What’s the difference between machines and us, if we keep concentrating on things that we are asked to.