This assignment is an exercise on training CIFAR-10 CNN on our own machine. Here is a complete log of my attempt on this matter.

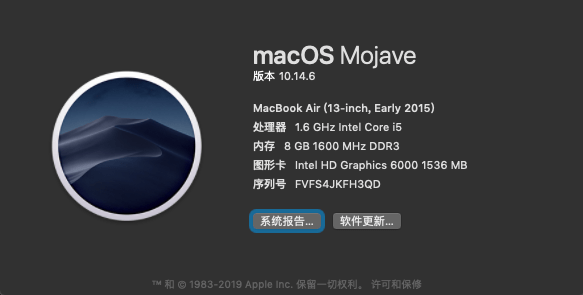

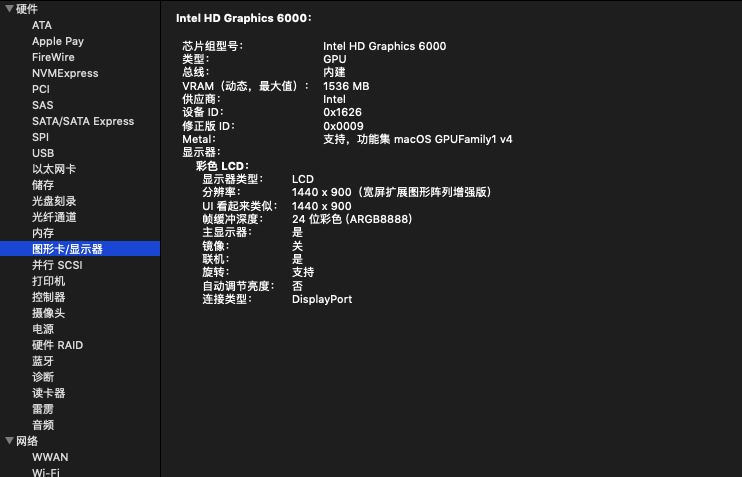

Machine Specs

I am training on a MacBook Air (produced in early 2015), with a macOS Mojave version 10.14.6, and a 1.6 GHz Intel Core i5 CPU.

*This machine is equipped with an Intel HD Graphics 6000 GPU, but I believe it doesn’t meet the bar for our training tasks, therefore I chose to proceed this training task on the CPU.

Training Setup

Total Time Spent

Runtime Performance & Outcome

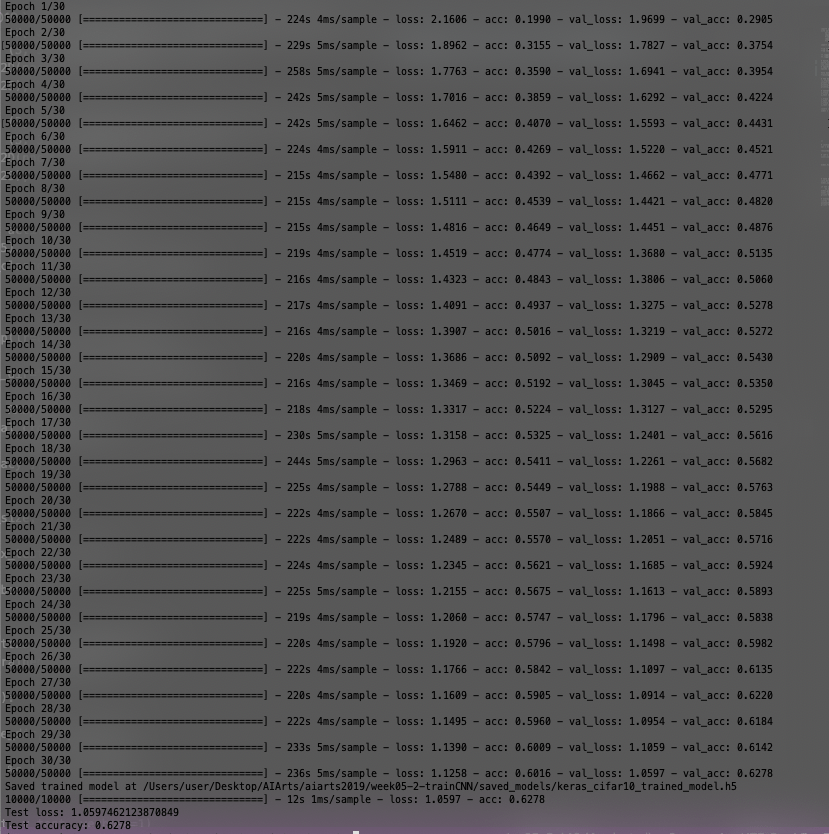

Final Outcome:

Reflection

In this training task, I adopted a relatively large epoch count and batch size, because I was really curious what the training performance will be like with a medium-weight task on my machine.

Data downloading surprisingly enough took a very long time, probably because of the data’s size and my network condition.

Training also took a considerably long time, but it’s actually a lot better than I’d expected, given the number of epochs and batch size I choose. Training took approximately 4~5ms/sample, and that makes around 230s/epoch, since I set 50000 samples per epoch.

As the training went through more and more epochs, it’s very easy to see that the loss value steadily decreased, from 2.1606 in the first epoch, to 1.2— in the 30th epoch, with a declining step ranging from 0.01 to 0.12 per epoch. Concurrently, the accuracy value increased steadily over each epoch trained, and went from 0.1990 to 0.6278 over 30 epochs. It is clear that the more epochs the training goes through, the better the outcomes are. It’s really a fun thing to watch how these statistics change over time, and I’ really curious to see where the limit lies for this type of training in terms of loss and accuracy.