Introduction

Having looked through all the examples on ml5.js, I think that Body pix is really interesting. According to the documentation, Body pix is an open-source machine learning model and its main function is to distinguish pixels and divide them into two parts—one represent the person and the others represent the background.

Experience

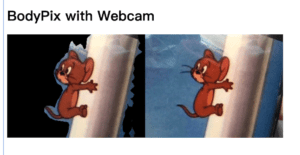

The way to use it is really simple. Take my experience as an example, the project used web camera to record my dynamic image as the input and the output was the same image but the background was black. After that I have an idea: if I use a picture of me as the input, will Body pix still work it out? The result is yes. I then used an image of a cartoon mouse to have another experiment and the result is as follow.

Insight

I found that Body pix can recognize the part of face and hair well, but it was poor at recognizing my finger. Also there was an obvious gap between the outline of my figure and the background. The process of outputting image was not very smooth, but intermittent. Thus it’s not completely instant. The accuracy of the result mainly depends on the model size and output stride, but the size of original image can also have effect on the accuracy. As TensorFlow says on Medium, “The lower the value of the output stride the higher the accuracy but slower the speed. The larger the value, the larger the size of the layers, and more accurate the model at the cost of speed.”

Application

At first I had no idea about its significance and how to put it into practice after my experience. It can only remind me of a potential automatic way of separating the selected object from the background on Photoshop. Thus I did some deeper research about the application of Body pix and found that it can be applied to augmented reality and artistic effects on images or videos.

If the issue of accuracy and speed can be solved, I suppose it could be used as a tool of virtual fitting. Since I really hate changing my clothes frequently in the fitting room and different conditions of the environment can cause different outlooks of the same people and clothes. But if we can use this technology to enhance augmented reality, things might be changed. The consumers can see what they look like in new clothes by AR without going to the fitting rooms or even the mall. And also Body pix can change the background of the image, so they can use it to change the light, color or other elements to have a more comprehensive understanding.

Reference:

https://medium.com/tensorflow/introducing-bodypix-real-time-person-segmentation-in-the-browser-with-tensorflow-js-f1948126c2a0

https://ml5js.org/reference/api-BodyPix/