Overview

At first I thought that the Neural Network was a work of bionics, which meant it mainly mimicked how the biological neurons worked. But this is just a random guess according to their similar names. However, after doing several research on the Neural Network and biological neurons as well as their connections and differences, I have a new conclusion about their relationship. Neural Network is inspired by the biological neurons and at first the research personnel wanted to imitate the way biological neurons worked and applied the method to the machine. However, since our biological neural network has been evolved for millions of years, it has already reached a considerably mature level. Thus it is impossible for the machine learning to completely copy the method or put the method into practice. Therefore in its later stage of development, it created new ways to close to or even reach the effect of biological neurons but essentially, it is just a mathematical or computational model. That is to say, neural network loosely model biological neurons and the connection between these two is weaker and weaker.

General introduction

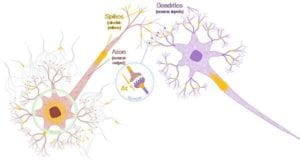

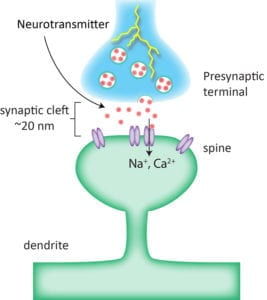

The most essential factor of biological neuron is nerve impulses, which are electrical events produced by neurons. Nerve impulse can let neurons communicate with each other, processing information and performing computation. When a neuron spikes, it releases a neurotransmitter, a chemical that move across a synapse for a tiny distance and then reach other neurons. In that way, this neuron is able to communicate with other hundreds of neurons.

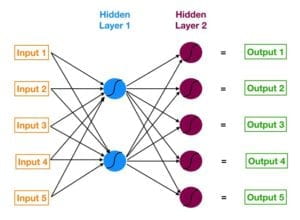

For the Neural Network, it is composed of connected artificial neurons. Each connection can transmit a signal to other neurons. It has three kinds of layers: input layers, output layers and hidden layers. On the input layer, many neurons accept non-linear information. Output layer shows the result after transmitting and analyzing the input information. Hidden layer, composed by neurons and links, is between the input layer and output layer and the number of layers and neurons on each layer is uncertain. Generally speaking, the more the number of neurons, the more nonlinear the neural network is and the more significant the robustness of the neural network is. To adjust the weights of each layer according to the correction of training samples is the process of creating the model. And the model is then validated by a back-propagation algorithm.

.

Connections & Difference

Obviously artificial neurons loosely model the biological neurons and the way they communicate is similar. According to wikipedia, both of them have the characters of nonlinearity, distribution, parallelization, local computation, and adaptability.

However, the connection among biological neurons is pretty weak, and new connection can be formed only when there is a strong impulse to let it release neurotransmitter. And only a small group of neurons strongly connected with each other. While the artificial neurons are highly connected with each other. The connection is fixed and it can’t create new connections by itself. While the artificial neuron layers are usually fully connected, the character of biological neurons can only be simulated by introducing weights that are 0 to mimic the lack of connections between two neurons.

Also I’ve watched a really interesting video demonstrating the difference on the operation mechanism between biological neuron and artificial neuron. This video takes a baby and candies as an example. When the baby sees the candy, there is an impulse to let the neurons of the mouth connect the neurons of the hands, and the signal will transmit on these connected neurons and then produce the feedback: hold out hand and make a gesture for sugar.

But for ANN, it has already formed a complete system and have learnt countless examples from the database to know what to do after seeing candies. Every time it uses back-propagation algorithm to validate and to do corrections.

Conclusion

Therefore, as we can see, ANN is inspired by biological neurons but the connection between them is loose. Also, at the late stage of its development, it abandoned the way that biological neurons works and turn to apply statistical methods and algorithm to build models to receive, process and analyze information.

Reference:

https://towardsdatascience.com/the-differences-between-artificial-and-biological-neural-networks-a8b46db828b7

https://en.wikipedia.org/wiki/Artificial_neural_network#Learning

https://www.bilibili.com/video/av15997699from=search&seid=4264978940427641437