Project Name: Music Accompaniment

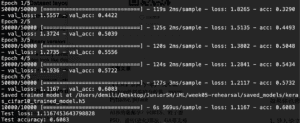

Project Description: Using Seq2Seq Model, with an input lead melody data, it will generate the accompaniment melody on the exact beat. The melody data then change to MIDI notes and time data and write into a new MIDI file. With the help of Logic Pro X, I bounce the merged MIDI files into the MP3 data.

Demo: