CONCEPTION AND DESIGN:

We originally want to create a VR experience where the user can feel like they are physically interacting with the virtual world. My definition of interaction is a continuous conversation between several actors. The VR experience will encourage the user to explore their way of interaction with the virtual forest. Since our goal is to encourage the user’s way of interaction with the virtual world, we borrowed multiple sensors, ranging from pressure sensor to distance sensor. We decide to test all those sensors first before selecting the specific ones to use because from the midterm experience I’ve learned that the functionality of sensors determine to a large part the outcome. So it’s better to ensure that the sensors work (the sensor can clearly identify a pattern so that I can monitor its value to serve my purpose). In addition, we also think about the context of interaction. For example, we later abandon the sound sensor because it’s hard to think of the context in which human sound can generate effects on the environment.

FABRICATION AND PRODUCTION:

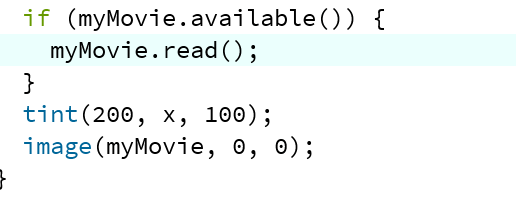

We finally choose distance and color sensor as our main sensors. The idea is that as people with different intentions approach the forest, there will be different effects shown on the screen. We aim to make people aware of the effects of their interaction with the forest. We decide to create 3 kinds of costume, each representing a symbol of human’s intention. For example, the white clothes with plastic attached represent human-induced plastic pollution. We cut down cardboards into several squares and paint them with different colors, like green, red and white. Fortunately the color sensor could differentiate between these colors. The user wearing the suit will be encouraged to approach the forest. During user test section, we were told that the interaction was not obvious and the user will not know that they have to run towards it to trigger the following effects. This is a tricky question for us. We ponder through it for some time. And later professor Inmi told us that the box had better be more relevant to what’s happening on the screen. A thought suddenly hit me: make the box a physical representation of the forest. Since our box also have a hole on top, I think of another way of interaction: instead of they wearing the suit and approach, the user could put elements into the box and see the corresponding elements. This completely changed our way of interaction of running towards the box.Our sensors are now reduced to only the color sensor.

CONCLUSIONS:

I think our project, though deviating much from our initial plan, realizes the VR effect in some way. Through interaction with the physical forest, they’ll be able to see effects on the screen, which serves as a connection between virtual and real world. However, I think the interaction is not very engaging and a little bit confusing. From the feedback we get to know that the user find the throwing act confusing as they feel like they are compelled to throw and then receive an educational video. It’s not out of their intuition and therefore not engaging. The ideal version in my mind goes like this: the user is put inside an immersive “forest” that has tangible existence, where they are encouraged to interact with the forest in any way they can imagine. For example, when they step on the grass, the grass may generate some sounds to inform the user about their existence. Or when they are trying to approach a tree and touch it softly, the tree will slightly vibrate and generate a welcoming sound, but when the user tries to do too much like they are touching the tree in an less respectful and aggressive way the tree would be unhappy. In this way the user will realize that the nature also has their own feelings and are equal beings like us that need to be treated carefully.

Our physical forest: