You Know You Have Magic!

by Yuru Chen and Molly He

For my final, I’m working with Molly He. Molly and I created a “magic” drawing project. The basic concept of our project is that the camera detects the color we want it to track and then show what the user draws on the canvas. On top of that, there is a “Follow Me!” sign moving on the screen which serves the purpose of telling user what gesture to make to trigger the car to move.

Here is the demonstration of our project:

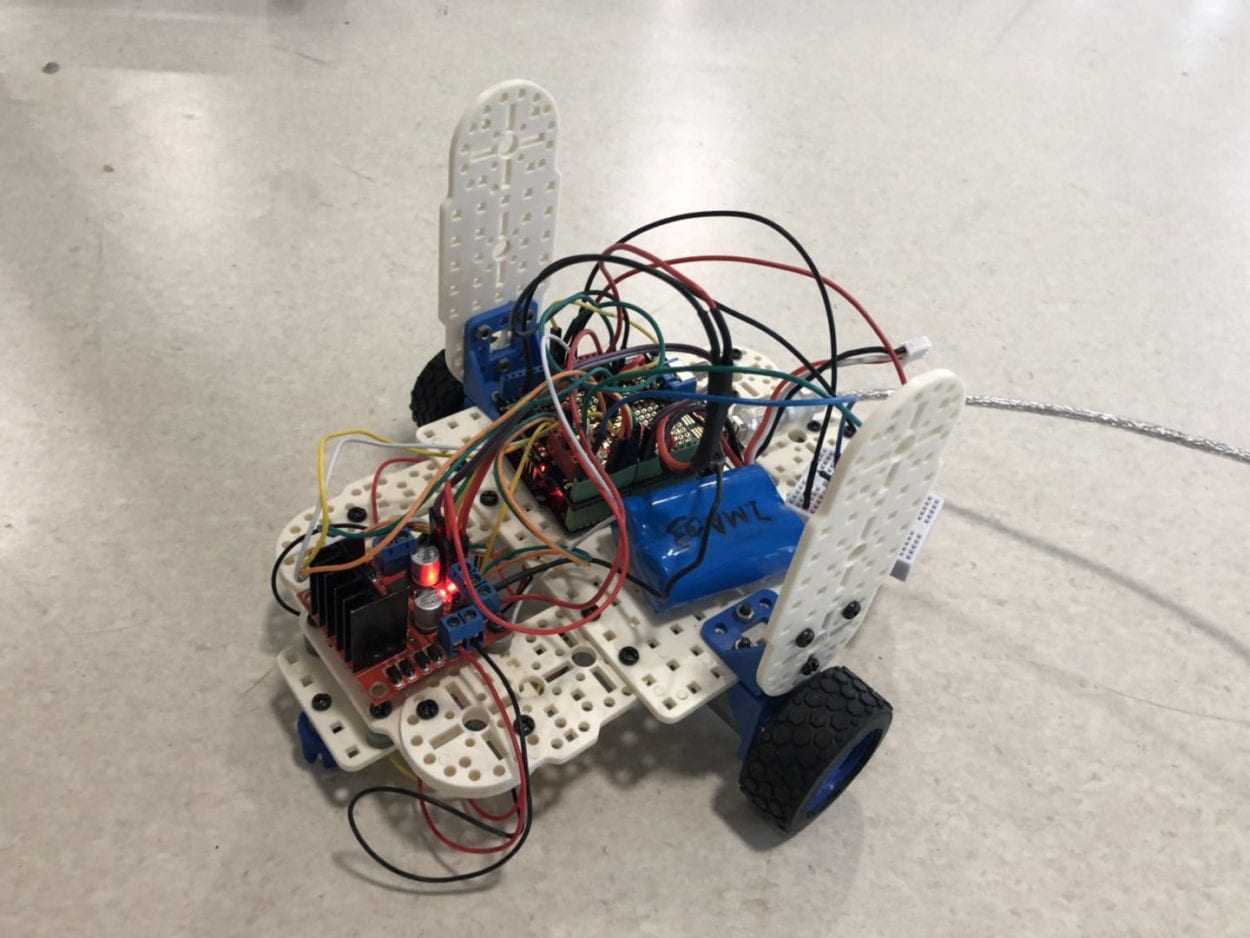

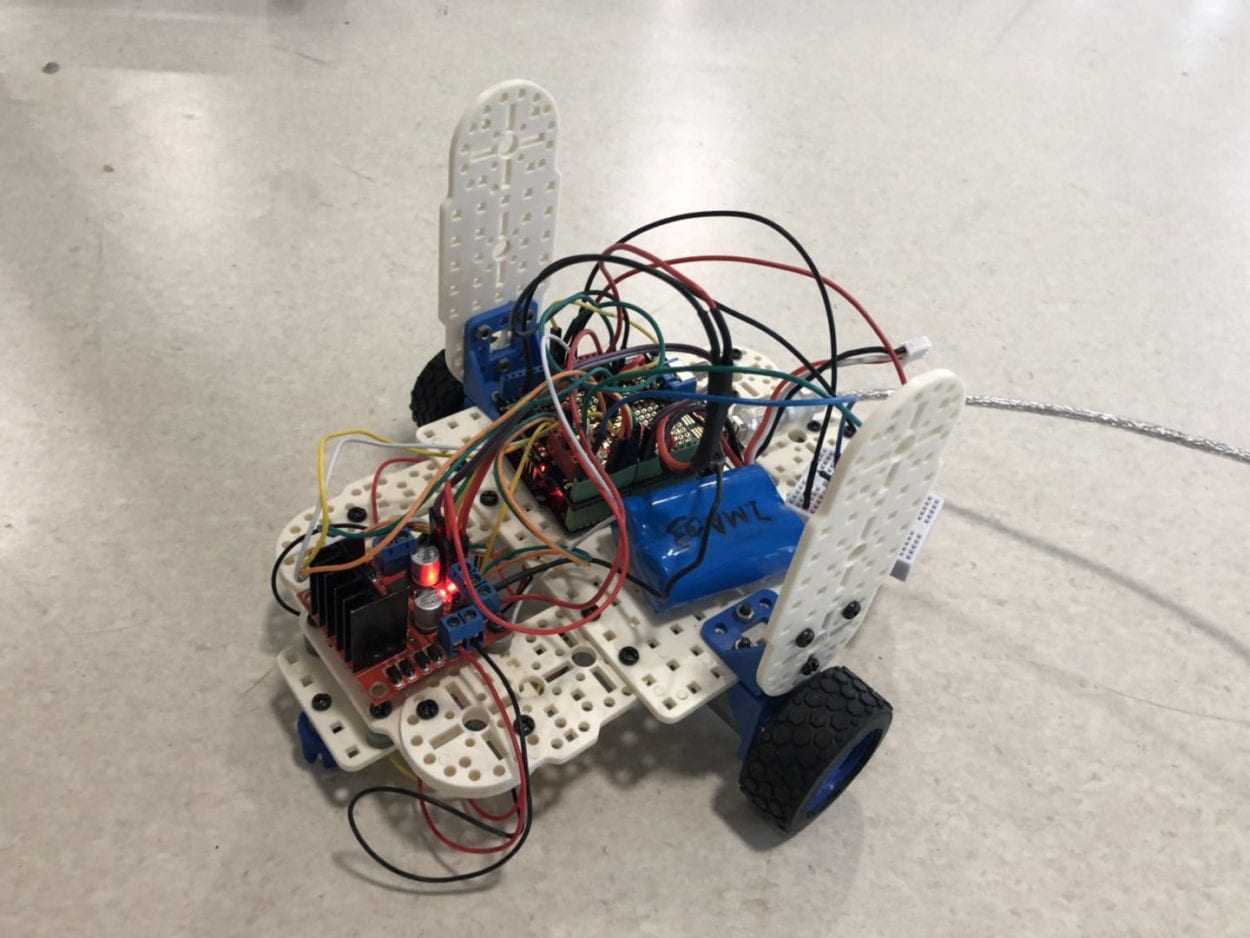

Here is a picture of our car:

Basically, we wanted our users to hold a “magic stick”, which is the same as Harry Potter. And then we needed the camera to detect the position of it, so we thought of color-tracking. Based on that we made a red spot on top of the stick so that the camera can detect it. Also, we wanted user to make a certain gesture, or to say, to draw a specific shape, which would serve as a spell, to trigger the car, so we thought of the most direct way which was to add a moving “Follow Me!” text on the screen to indicate what the user should do. In addition, for the processing part, we used HSV color-tracking in opencv to help us track the color. We actually had other options, one of which was to calculate the average distance between the color we wanted to track and the other colors, and by using PGraphic and topLayer function to draw on the screen. However, in the process that follows we found that this method is very unstable because the camera cannot find the preset color correctly. So we replaced this method with opencv, which solved the problem of the color-tracking difficulty. The criteria we used to choose the method of color-tracking was that first, it is feasible. Before we started the project, I reached out to several professors and staffs to ask what kind of method is the best for our project, and they suggested several ways to achieve our goal, from which I chose to use average position at first, and then with the problem I switched to opencv. Second criteria, I would say, was the accuracy. Because we were trying to track a specific color, there might be some distractions in the background which influence the accuracy of color-tracking, so the accuracy of color-tracking was the second criteria we considered during the process.

For failures, after we successfully completed the color-tracking part, we wanted to make the interface a better-looking one, so we thought of covering the image captured by camera by an image of parchment, however, we failed to do so, so we just kept what we had before. As for successes, we had trouble triggering the car. We asked one of the professors and he suggested that we can use “boolean”. We preset all the pixels as “false”, if the pixel detects a different color, then the pixel becomes true, and then we count the “true” pixels, when the number reaches 40, it will trigger the car. This is a very important success because if this process does not work then we couldn’t continue the Arduino part. During the user testing session, because we hadn’t finished the entire project yet, we replaced the car-triggering part with the appearance of a little ellipse on the corner of the canvas, referring to the car moving we initially wanted to achieve. However, this made users very confused about what our project was for. Also, some users suggested that it would be better if we could make the line we drew previously disappear, because it looked very messy with all the things we drew on the canvas, thus made it hard to tell where exactly the tracked spot was. Based on the feedbacks from the users, we made the following changes: 1) we added our car to it and successfully triggered it with pixel detection. 2) we decided not to use topLayer, instead, we chose to use storingInput to achieve the goal of making the previously drawn lines disappear. Those adaptions that we made were very effective, according to our final result.

In conclusion, the goal of our project is to give users an interesting and interactive experience. By asking users to do certain gestures to trigger the car, we create an interactive “communication” between the machine and user. This result aligns with my definition of interaction in the way that both user and machine interact with each other. However, if I had more time, I would add an sand table on top of the car which will cover the car up, it also serves the purpose of showing the route of the movement of the car. Molly and I initially wanted to make the car draw based on what the users draw, but because of the limited time we could not finish that part, so if we had more time we would definitely make that possible and make the whole project cooler. What’s more, if we had more time we would also improve the accuracy of color-tracking part, because although we switched from calculating the distances between colors to opencv, this is still not very stable, so if there’s more time I would fix this problem as well. During the process, we incurred several failures, among which the most memorable one would be when we were working on the color-tracking part. We stayed in the studio for at least 4 hours working on this, and then at last we found out that it was because of we didn’t change the canvas’s size at the very beginning, which is a very detailed mistake and requires patience. Therefore, the biggest takeaway I got from this class is that you need to be patient and always be careful with the details. Also, from what I’ve achieved, I learned that never be afraid to try new things for that you might gain extra experience from it. The key element of my project experience is that saying is always easier than doing, and if you want to achieve something, you need to put in effort, take it seriously, be patient and work for it. Also, if I were to further develop my project, I would try to use bluetooth to connect the car with the computer. In this way, I can put the car somewhere further, say, another room, when the user draw in a room the car will draw in another one. I think this is very meaningful as it can make our life more convenient.