The Aviator

The Aviator is a multiplayer interactive game which trains and tests people’s ability of coordination and multitasking with an interesting one-on-one match. It requires players moving their arms/hands to change the position of the aviator (which is shown on screen) to avoid obstacles or to get props while at the same time blowing into the tube to control the speed of the aviator. Buttons need to be pressed when users want to use a prop. It can also be used for team-building while each member takes one responsibility (having a group of three with a person watching the screen giving orders while the other two people blinded by eye masks control the direction and the speed).

Multitasking and team-building games are popular and needed either in schools or workplaces but there is not much device-based interactive games for small groups. For multitasking ones mostly they are online games designed for individuals (focus on eye-hand coordination) and for team-building ones they usually needs a larger area (often outdoor) and more people to carry out. But with this game we designed, it is possible for users to have these games indoor within small groups or pairs only and more body parts are required for the multitasking which increases difficulty but also urges users to have a little exercise. There are a lot of existed similar aviator games but only training eye-hand coordination or purely for fun (one example is the link below).

https://tympanus.net/Tutorials/TheAviator/

Doctor’s Training Kitchen

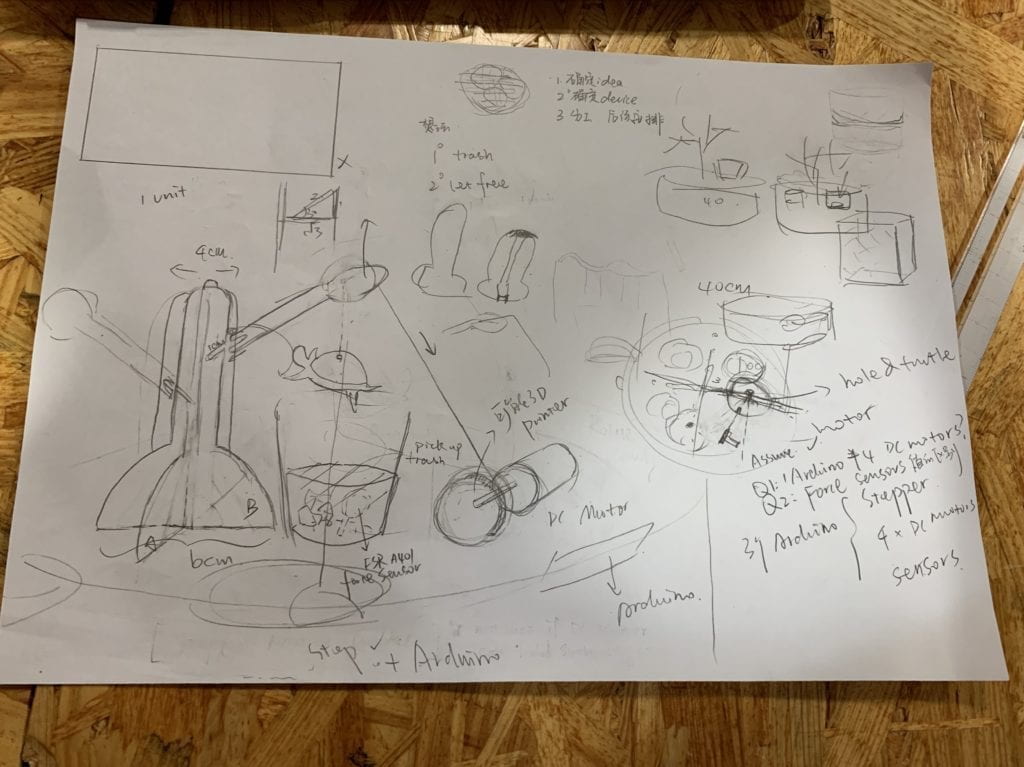

This is an interactive game begins with entering your height into the device and stepping onto a scale. The computed BMI will be indicated via an animated image showing the user’s health status (skinny/fatty/fit). Then a recommended recipe will pop up on the screen and users can learn to cook that step by step with guidance. User’s performance (the act of stirring, mashing, cutting, seasoning adding etc.) will be measured with sensors and taken into account to produce the final score which shows how healthy and suitable is the food they made for themselves.

The game is target at people who live on their own especially the youth who does not know pretty well what food is good for them and are too lazy to cook or learn to cook. Current cooking games in the market are mostly simulation games which does not teach recipes and process of making any dish. The only games I found which involves step-by-step food making is Cooking MAMA (as in the video shown below) but everything user do is on the screen so users are not learning much real skills. But as our design (if possible) use flex sensors and force sensors to catch hand movements and acts, it resembles what people do in reality and trains users better in the cooking skill for that particular dish while offering evaluation on their works. It will help more young people staying healthy by helping them to learn recipes good for them and learn how to cook that.

The Best Detective

This is a party game with a group of users. Photos of animals/scenes/ objects will be shown on screen in dots but initially covered with a dark layer and needs one chosen player to wipe out. The chosen player is going to do the wiping bit by bit while the rest players can guess what that picture is about. Chances to wipe is limited while only a restricted length of stroke can be wipe out each time. The wining person (who guess correctly) can order a victim to do one thing or answer a question and then he/she becomes the next wiper.

There is now wipe-out guessing games for individuals and I think it will be more interesting to put in a party setting so collective efforts can be paid and the interactions and competition between players also adds to the experience. But since I find existing games relatively easy and assume it will be easier in a group setting, we change the picture to present with dots. So this to some extent makes the game harder and more entertaining.