Social Impact

By bringing the CartoonGAN model into the browser, we make it possible to transfer the real image into the target style. That could bring the users and their loving images into the cartoon they like. And even more, if it is a GIF or video, we can directly convert it into the cartoon style. It makes possible for users to directly get the styled GIF or video. In a word, we are trying to blur the boundary of the cartoon world and the physical world by putting the model running on the browser.

Future Development

In terms of the future plan, we have three things primary aspects, realtime performance, more input formats, and ml5 function wrapping.

Realtime Performance:

There are some potential solutions for realtime performance support. One is to deploy the model and inference service over the server-side. However, with a powerful computer, we can do the generation process within seconds. However, if we are trying to realtime generating a video or long gif, such a solution will not best fit in the scenario as users might need to wait longer to upload and fetch videos.

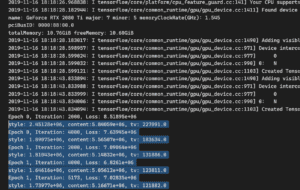

Another solution for that is to use native hardware acceleration to boost the edge inference procedure. Thus, here we can use tfjs-node or TensorFlow Lite to play the game. However, we still need to see if such native hardware acceleration will handle real-time inference.

More input formats:

As we have proposed in the previous section, it will make more sense if more formats of input could be supported like Gif or video. As for the gif, it is possible to unpack a gif into a slice of images and do inference over them. After that, we can pack the styled gif back.

However, as for the video, we may do not want to support such functionality over the browser as it will easily crash the system due to a large number of frames a video could contain. We need to seek solutions from the server-side and the native side.

ML5 wrapping:

As the CartoonGAN itself is interesting, we can also export the model into the ml5 structure. In this way, it will best benefit the community by bringing more diverse models.