Title

Wild失控

Project Description

Our project’s name is Wild. We got the inspiration from the movie Blade Runner. In the movie, artificial intelligence is designed to be nearly the same as human, it is really hard to distinguish the difference between humans and artificial intelligence. They are built by humans, and they are stronger than humans. When they come to fight with humans for limited resources, humans get hurt by the creature created by ourselves. Just like the first project I have done in the interaction lab, we designed a short scene play which described a situation that there would be a memory tree in the future which people can upload and download memory and knowledge from. But when one is too greedy and get too much from the memory tree, he is hurt by the memory tree. In this project, we want to present human’s over-dependence on technology. The technology is a double-edged sword, it can make our life more convenient but when we depend on it too much, it will hurt us on the other hand. The “Wild” is named after the scene in which all objects as well as the music lose control and go wild.

Perspective and Context

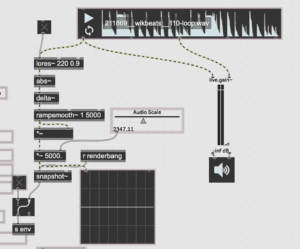

Our project is consists of three parts. The first part is the music, Joyce made the music using garage band. Because we wanted to make a contrast between how people rely on the technology and how would the situation be when we lose the control of the technology in the whole project, so we decided to make the first part of the music more peaceful and mild, and at the turning point, the music crashes. It turned crazy and wild. In this way, we create a connection between music and the visual scene. And the second part is about the Kinect. I got the inspiration for using Kinect to create the interaction between the performer and the project from this contemporary ballet dance.

https://www.youtube.com/watch?v=NVIorQT-bus&feature=youtu.be

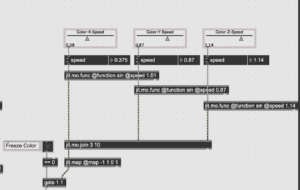

I really love how the dancer’s movement influence the objects and projection next to him, and at the same time, the objects influence him as well. Rather than just simply controlling, the performer he himself has become a part of the project, he and the project become as a whole. Therefore, I decided to put myself into the performance. I originally planned to use the depth function in the Kinect to control the movement of the object in the max, but because my computer is Mac and many functions including detecting the depth can not be used. So, at last, I just use the shape capture to capture the shape of my body. And in this project, I use my hands to receive the 0s and 1s, which represents that humans can receive digits information through technology(because the technologies we have developed till now are all based on the binary system). The digits disappear where I am, but later on, with an explosive sound in the background music, the digits lose the control and randomly move on the screen.

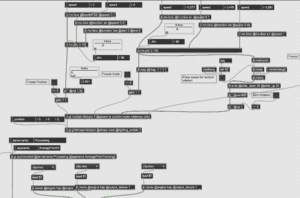

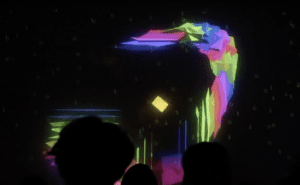

The third part is the max patch. In the beginning, we want to design a changing object from regularly changing to crazily changing, in order to represent the control-losing. But later we found it was too boring and dull to just use a single object. So we decided to use more videos to enrich the content. We use a scene of a bustling city as the beginning of our project to indicate the development of the city, then there are green 0s and 1s dropping from the sky, the whole city is under the control of digits. By this, we want to show that the city is running with the help of technology. And then we use several short clips of how we depend on technology in our daily life. Then the main object appears. It changes and moves regularly according to the music. Later, we add the scene from Kinect, and with a sudden glass-broken sound, we cover the whole screen with the white canvas very fast, which represents the exploiting point. After this, our core object goes wild. It turns from smooth to poignant. The whole scene goes crazy. We add all different videos to show the situation that when we rely on technology too much and it crashes.

Development & Technical Implementation

In the group of me and Joyce, basically, she finished the part of the music and I was in charge of the Kinect and processing part. And then we developed the max part together. Through this process, I learned to connect different ideas together to create a more diverse project, and I really enjoy cooperating with her.

While dealing with the Kinect, one biggest issue we met is the data transmission from Kinect to the computer. The amount of the data is too big for the adapter from USB to TYPEC, so when we try to connect Kinect through the adapter, there was nothing on the screen. We tried several different adapters, but none of them can transfer the data from Kinect to my computer. There was only one that works. So at last, we switch the midi mix to the Kinect during performing, and after the part of Kinect, we switch back to the midi mix.

Developing the max patch is the most challenging part. According to Ana Carvalho and Cornelia Lund (eds.), the VJ is just playing the videos and music that already exist. We want to avoid using too many video shots from the real world. Thus, I created videos of smog and random numbers by processing.

The code I use for connecting Kinect with processing and Max

The code I use to create random numbers and smog scenes

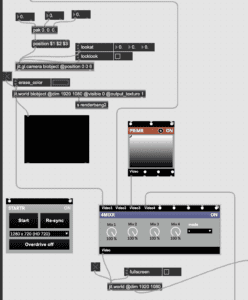

To create an adjustable object, we decided to use jit.gl to create a 3D object immediately. We learned from the tutorial on the Youtube, and then improve it. Performance

It was the first time we went to the club for performing. We got a bit nervous at first, but we found it was normal and everyone got nervous. During the performance, I was in charge of the movement of the center cube while Joyce switches the videos and the music. Basically everything smoothly, but before starting, the processing could not work normally, it kept reporting the error. I guessed that it was because we have run both the processing and max for too long, so we restart it immediately. Luckily, it worked. And in the middle part of the performance, the music became a little loud. Such unexpected things happen a lot during performances, so learning how to deal with them calmly is needed for every live performer.

Another important thing is to organize the patch in a clear way. It is dark in the club, therefore, when we want to use the MIDIMIX to control the patches, it is hard to see what we write on the tag. So assigning the notes on the MIDIMIX according to the arrangement of the objects on the patch is important.

At the end of the performance, when we adjust the position and shape of the cube in the center, it did not change as we practiced. We planed to only have the circle rotating, but there was a small yellow cube in the center, which make the project seems even better. So, although we have no idea why it would appear here, we are still satisfied with it.

Conclusion

I think this is an interesting project which broadens my eyes, I learned to produce music by garage band and I explore the use of Kinect. But there are a lot of things we can do to make the project better.

First, about the Kinect, the first thing is I want to try to use p5 or runaway to capture the movement of the body because I found that the control stage was not as dark as I thought. Or I can try depth and other functions of the Kinect on a windows laptop. And I also want to turn the Kinect to the audience so that there will be more interaction. Maybe an underground club is not a very proper place for these kinds of interaction, but this is a fun way to think from to develop the project.

Second is about the max part, we still use too much real scene videos in our project. I think there is more we can do on the patch itself. For now, our developments on the objects in the max simply rely on the functions inside the max. And it is hard for us to make a controllable and beautiful object in the max directly. I asked my classmates about their way of using max, I found that both of the two projects I love the most have used the .js. Therefore, I want to try more different ways of combining the max with other resources to create a more diverse project.

One biggest issue of our project is that we are trying to convey something “meaningful” to the audience, but actually there is no need for us to do so. Just like what we learned at the beginning of the semester, the synaesthesia varies because everyone has different life experiences and thinking. Therefore, their feeling toward the same piece of music may be different. The meaning of art is the same. The meaning of an art piece can vary from person to person, and it doesn’t need to be educational. We can jump out of traditional thinking and create something more crazy and abstract. So, in the future, rather than just put the street views on the screen, I want to discover more forms of presenting the idea I think. Also, while designing the music, we should consider more about the place we are going to present the project is a club, so the music with stronger beats and rhythm will be more suitable.

In all, even though there I think the connection between our music and visual art is strong, and audiences’ feedback tells us that we have made an exciting live performance.

Work Cited

Live Cinema by Gabriel Menotti and Live Audiovisual Performance by Ana Carvalho from The Audiovisual Breakthrough (Fluctuating Images, 2015).