Final Project:

Concept and Design:

Our first idea for the final project was to create an instrument that requires little to no understanding of music to play. There would be no note reading or unfamiliar mouth or hand positions. The instrument would be controlled by simple hand movements and a mouthpiece that measures the air pressure. Here is a clip of Daniel simulating what he hoped our project’s experience would look like:

Here Daniel is using two hands and his breath to simulate the control of amplitude, frequency, and modulation. But we quickly discovered that much like playing the piano, using two hands is much more difficult than it looks. In essence, we wanted users to be able to easily understand and play this without the hassle of extensive learning. In order to simplify our design, we decided not to include the modulation feature and instead spend more time perfecting the other two inputs. As far as specific input methods, we used a gyroscope and pressure sensor. One of the other inputs methods we considered was a slide potentiometer, which would allow the user to be much more accurate with the pitch. But we agreed that measuring the angle of the gyroscope located on a ring would enhance the experience much more than a slide potentiometer.

Fabrication and Production:

As far as physical fabrication, there was not much to do. I created a ring out of velcro strips that would hold the gyroscope, as well as a ring stand to keep it steady for calibration before use.  Initially, there was no ring stand and the user was expected to calibrate the device. During the user testing session, we quickly realized how confusing and complicated this was and built it from a box with chopsticks. Marcela also gave us an idea that we hadn’t considered before: if we are focusing on the experience, why should we have an interface at all? Instead, we would blindfold the user and limit them to the headphones to completely focus on the experience of making music.

Initially, there was no ring stand and the user was expected to calibrate the device. During the user testing session, we quickly realized how confusing and complicated this was and built it from a box with chopsticks. Marcela also gave us an idea that we hadn’t considered before: if we are focusing on the experience, why should we have an interface at all? Instead, we would blindfold the user and limit them to the headphones to completely focus on the experience of making music.

Now, I will go more into detail about the process of fabrication. The first step after receiving the pressure sensor in the mail was to build a mouthpiece prototype for testing purposes.

We used this to test out (a) whether the sensor worked well and (b) the range so we could calibrate it properly.

After we checked if everything worked (it did), we finished the mouthpiece.

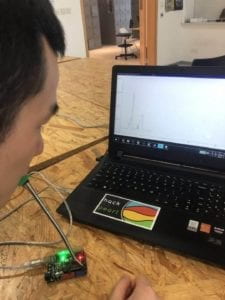

The next step was to write the code, which included processes functions neither Daniel nor I had ever even heard of. This was by far the hardest part of the project. Calibrating the gyroscope required a lot math and online resources. Take a look at parts of the process:

After coding, we built the ring with the gyroscope in it, hoping to allow users to move their wrists up or down to control the pitch. Surprisingly, this worked quite well!

Here is the ring, designed to fit any finger size:

As a side note, we created two versions: easy and expert. Easy mode plays a track in the back and limits the user to perfect chords, meaning they cannot sound bad even if they tried. The other mode allows the user to freestyle with any note available, making it much harder to produce a pleasing melody.

Here I will include all the user tests, including some during the user testing sessions. Unfortunately, most were done with headphones, since the experience is best when the sound fills your ears.

^^ Featuring us amazed that it works properly for the first time

In the final version, we also included the blindfold to give a more authentic experience and make the user not worry about his or her surroundings. In the next clip, Daniel begins with the expert mode and then I activate the easy mode (to showcase both). It is easier to hear how the easy mode pushes the pitch to the closest chord.

Conclusion:

In the end, we are very proud of what we managed to accomplish. The interactive experience is composed of the user inputting a certain pitch and amplitude, and once he or she receives the feedback from the machine, can continue to change the inputs to create a melody. The user can also try and match the beat of the background on easy mode, which makes the experience much more interactive than the freestyle. Additionally, we believe the hand movement altering the pitch adds a level of interactivity. Ultimately, our audience reacted to our project in the way we hoped they would. During the tests, it was hard for people to get the full experience because our code would stop working, or the gyroscope calibration would fail. However, near the end, we asked Kyle to test it once more when it was fully operational, and he couldn’t stop using it (didn’t get footage of that, just an image).

If we could improve this project, we have a few ideas in mind. Thus far, this experience has been all about the individual: the user is only performing for himself. However, if we were to use loudspeakers and longer wires, the blindfolded user could stand up and effectively “perform” a piece. Other modifications we might have added were a recording feature to play back the user’s music and the implementation on other instruments. It feels weird to blow into a mouthpiece and hear a string ensemble, but it was the cleanest sounding instrumentals we could easily acquire. All in all, I think this project was successful because it strays from mainstream music. It invites any and all individuals, regardless of musical background to immerse themselves in their own music.