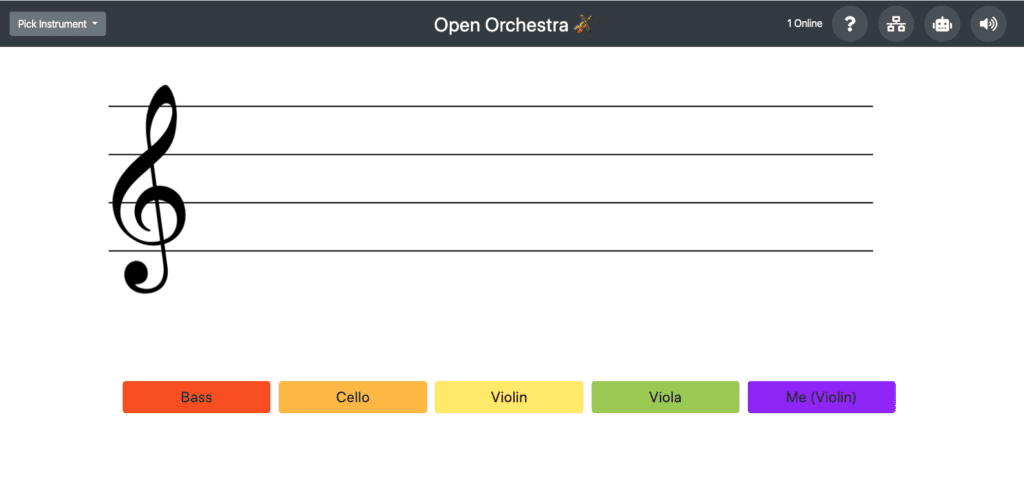

Demo: http://thomastai.com/space/

Introduction

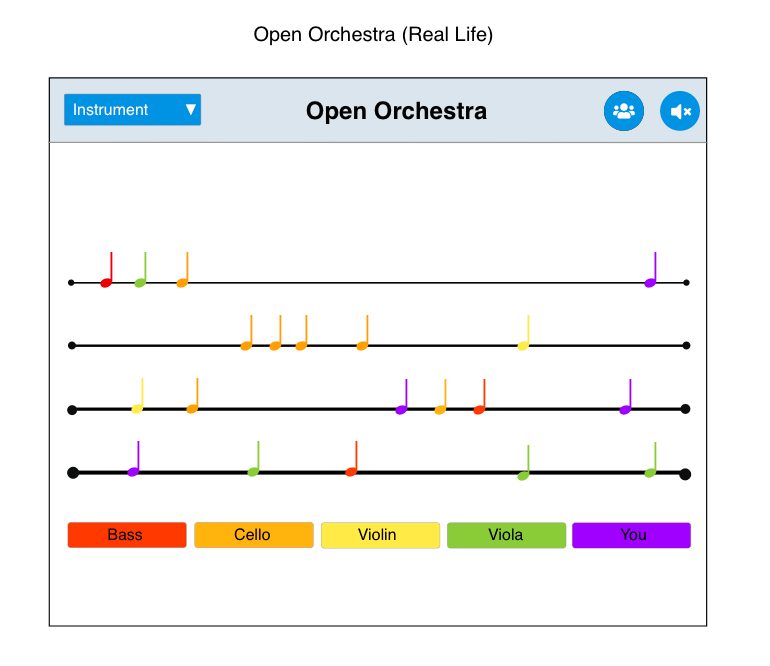

For our final project, we were asked to create a generative / interactive musical piece that is web based, physical, or exists in space. Since we do not have access to any physical equipment, I decided to make a generative music piece that anyone can access on the internet. The inspiration from this interface came from Brian Eno’s Music for Airports, a generative piece released in 1978.

What I found amazing was that this existed prior to the invention of digital audio workstations, and was made by layering tape loops of varying lengths to produce an infinitely generating piece. I took some of the notes from his piece that I found online and used loops to create my own interface. Using the NASA API I was able to pull data from nearby asteroids and use it to generate a unique loop for any specific date.

Process

I wanted to work with the concept of space for this project, so I found some neat CSS transformations that allowed me to blink stars and simulate an Earth rotation effect without a 3D graphics library. The Earth rotation is done by transforming a flat map of the Earth into a circular div. The code for that can be found here. I may try to explore a different route in the future to create more realistic simulations. By moving a background with circles we can simulate a twinkling effect.

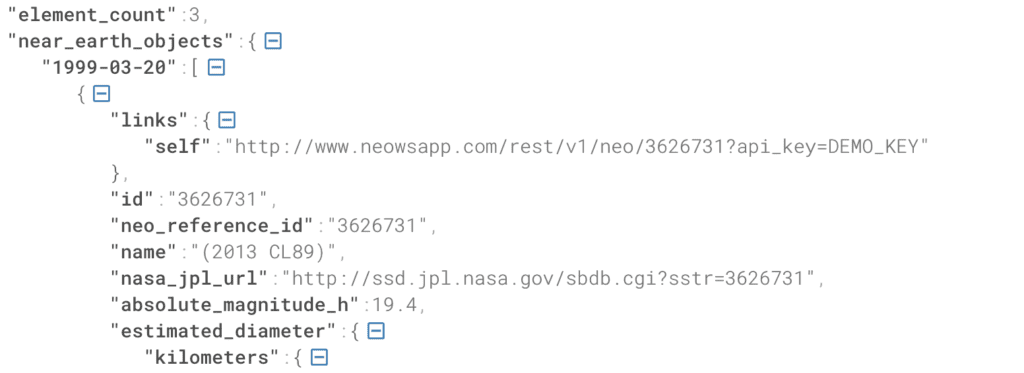

Next I implemented the REST API calls using a promise, which is essentially a callback function that returns data when it is available. Here is a sample JSON file that is returned back from the server:

I then iterate through the asteroids and create a new Tone.JS loop which will return a callback every few meters depending on the current tempo. The user can change this tempo using the forwards and backwards buttons on the browser. The Tone.JS player will play a sound file when the loop time has arrived. In addition a random note will be played using a Poly Synth that I created. Another global loop will play sound files from NASA that consists of radio signals caused by plasma waves found in the solar system. However, this plays at random intervals with a probability of 20% for every 10 meters.

Future Work

If I had more time, I would love to create a more interactive interface, as the only thing that you can do is watch asteroids come by and change the bpm. I have several issues that I would like to figure out in the future, including some graphical glitches. Overall, I had a great time designing this interface and learning more about simple generative music techniques. Thanks for a great semester!