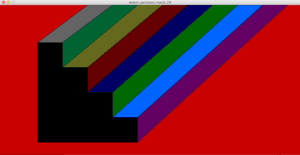

In both exercises, there is plenty of multi-layered interaction involved. This includes that between the two programs/softwares: Arduino and Processing, which need to communicate in order to establish the successful correspondence between the physical Arduino circuit and the result of the Processing program on the computer’s screen and keyboard. Additionally, there is the interaction that comes from the user: in the first exercise, the user controls the line on screen by manipulating the physical potentiometers. In the second exercise, the user manipulates the pitch of the speaker’s sound by moving their fingers across the mousepad, or if there were a physical mouse, by moving it around horizontally. During this interaction, the programs/softwares need to simultaneously and continuously communicate in order to receive and send the messages from the user’s manipulation and for the system to act accordingly.

During the first exercise, I used the example program given to us, added the line() function, and could not figure out why I could move it around but the previous line would disappear. I then realized that the background was being redrawn every single frame change so I moved the function out from within the continuously working code so that the line’s previous position would not be covered by the newly redrawn background every time it was moved. The second exercise went smoothly once I noticed that I had not changed the port index to my specific one.

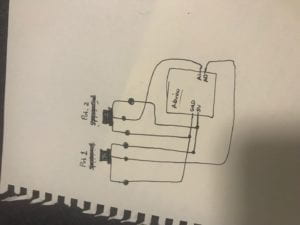

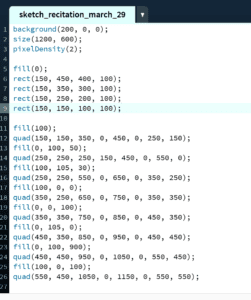

Exercise 1: Sketch using potentiometer to control x and y position of a line.

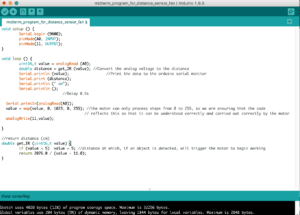

Arduino code:

// IMA NYU Shanghai

// Interaction Lab

// For receiving multiple values from Arduino to Processing

/*

* Based on the readStringUntil() example by Tom Igoe

* https://processing.org/reference/libraries/serial/Serial_readStringUntil_.html

*/

float posX2 = 0;

float posY2 = 0;

import processing.serial.*;

String myString = null;

Serial myPort;

int PORT_INDEX = 1;

int NUM_OF_VALUES = 2; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/

int[] sensorValues; /** this array stores values from Arduino **/

void setup() {

size(500, 500);

background(0);

setupSerial();

}

void draw() {

updateSerial();

printArray(sensorValues);

// use the values like this!

// sensorValues[0]

// add your code

float posX = map(sensorValues[0], 0, 1023, 0, width);

float posY = map(sensorValues[1], 0, 1023, 0, height);

stroke(255);

line(posX, posY, posX2, posY2);

posX2 = posX;

posY2 = posY;

}

void setupSerial() {

printArray(Serial.list());

myPort = new Serial(this, Serial.list()[ PORT_INDEX ], 9600);

// WARNING!

// You will definitely get an error here.

// Change the PORT_INDEX to 0 and try running it again.

// And then, check the list of the ports,

// find the port “/dev/cu.usbmodem—-” or “/dev/tty.usbmodem—-”

// and replace PORT_INDEX above with the index number of the port.

myPort.clear();

myString = myPort.readStringUntil( 10 ); // 10 = ‘\n’ Linefeed in ASCII

myString = null;

sensorValues = new int[NUM_OF_VALUES];

}

void updateSerial() {

while (myPort.available() > 0) {

myString = myPort.readStringUntil( 10 ); // 10 = ‘\n’ Linefeed in ASCII

if (myString != null) {

String[] serialInArray = split(trim(myString), “,”);

if (serialInArray.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray.length; i++) {

sensorValues[i] = int(serialInArray[i]);

}

}

}

}

}

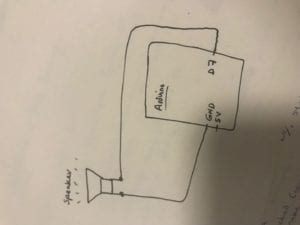

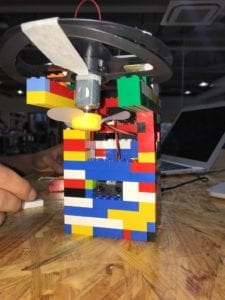

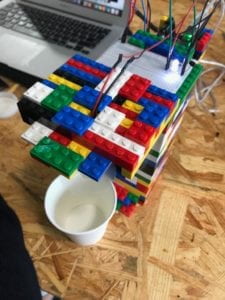

Exercise 2: Musical Instrument

Arduino Code:

#define NUM_OF_VALUES 1 /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/

/** DO NOT REMOVE THESE **/

int tempValue = 0;

int valueIndex = 0;

/* This is the array of values storing the data from Processing. */

int values[NUM_OF_VALUES];

void setup() {

Serial.begin(9600);

pinMode(7, OUTPUT);

}

void loop() {

getSerialData();

// add your code here

// use elements in the values array

// values[0]

// values[1]

// if (values[0] == ‘H’) {

// digitalWrite(13, HIGH);

// } else {

// digitalWrite(13, LOW);

// }

tone(7, values[0]);

}

//recieve serial data from Processing

void getSerialData() {

if (Serial.available()) {

char c = Serial.read();

//switch – case checks the value of the variable in the switch function

//in this case, the char c, then runs one of the cases that fit the value of the variable

//for more information, visit the reference page: https://www.arduino.cc/en/Reference/SwitchCase

switch (c) {

//if the char c from Processing is a number between 0 and 9

case ‘0’…’9′:

//save the value of char c to tempValue

//but simultaneously rearrange the existing values saved in tempValue

//for the digits received through char c to remain coherent

//if this does not make sense and would like to know more, send an email to me!

tempValue = tempValue * 10 + c – ‘0’;

break;

//if the char c from Processing is a comma

//indicating that the following values of char c is for the next element in the values array

case ‘,’:

values[valueIndex] = tempValue;

//reset tempValue value

tempValue = 0;

//increment valuesIndex by 1

valueIndex++;

break;

//if the char c from Processing is character ‘n’

//which signals that it is the end of data

case ‘n’:

//save the tempValue

//this will b the last element in the values array

values[valueIndex] = tempValue;

//reset tempValue and valueIndex values

//to clear out the values array for the next round of readings from Processing

tempValue = 0;

valueIndex = 0;

break;

//if the char c from Processing is character ‘e’

//it is signalling for the Arduino to send Processing the elements saved in the values array

//this case is triggered and processed by the echoSerialData function in the Processing sketch

case ‘e’: // to echo

for (int i = 0; i < NUM_OF_VALUES; i++) {

Serial.print(values[i]);

if (i < NUM_OF_VALUES – 1) {

Serial.print(‘,’);

}

else {

Serial.println();

}

}

break;

}

}

Processing Code:

import processing.serial.*;

int NUM_OF_VALUES = 1; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/

Serial myPort;

String myString;

int PORT_INDEX = 1;

// This is the array of values you might want to send to Arduino.

int values[] = new int[NUM_OF_VALUES];

void setup() {

size(500, 500);

background(0);

printArray(Serial.list());

myPort = new Serial(this, Serial.list()[ PORT_INDEX ], 9600);

// check the list of the ports,

// find the port “/dev/cu.usbmodem—-” or “/dev/tty.usbmodem—-”

// and replace PORT_INDEX above with the index of the port

myPort.clear();

// Throw out the first reading,

// in case we started reading in the middle of a string from the sender.

myString = myPort.readStringUntil( 10 ); // 10 = ‘\n’ Linefeed in ASCII

myString = null;

}

void draw() {

background(0);

// changes the values

// for (int i=0; i<values.length; i++) {

// values[i] = i; /** Feel free to change this!! **/

// }

values[0] = mouseX;

map(values[0], 220, 2000, 0, 100);

// if (mousePressed) {

// values[0] = ‘H’;

// } else {

// values[0] = ‘L’;

// }

// sends the values to Arduino.

sendSerialData();

// This causess the communication to become slow and unstable.

// You might want to comment this out when everything is ready.

// The parameter 200 is the frequency of echoing.

// The higher this number, the slower the program will be

// but the higher this number, the more stable it will be.

echoSerialData(200);

}

void sendSerialData() {

String data = “”;

for (int i=0; i<values.length; i++) {

data += values[i];

//if i is less than the index number of the last element in the values array

if (i < values.length-1) {

data += “,”; // add splitter character “,” between each values element

}

//if it is the last element in the values array

else {

data += “n”; // add the end of data character “n”

}

}

//write to Arduino

myPort.write(data);

}

void echoSerialData(int frequency) {

//write character ‘e’ at the given frequency

//to request Arduino to send back the values array

if (frameCount % frequency == 0) myPort.write(‘e’);

String incomingBytes = “”;

while (myPort.available() > 0) {

//add on all the characters received from the Arduino to the incomingBytes string

incomingBytes += char(myPort.read());

}

//print what Arduino sent back to Processing

print( incomingBytes );

}