Introduction of Deepfake

Academic research related to Deepfake lies predominantly within the field of computer vision, a subfield of computer science often grounded in artificial intelligence that focuses on computer processing of digital images and videos. An early landmark project was the Video Rewrite program, published in 1997, which modified existing video footage of a person speaking to depict that person mouthing the words contained in a different audio track.[6] It was the first system to fully automate this kind of facial reanimation, and it did so using machine learning techniques to make connections between the sounds produced by a video’s subject and the shape of their face.

The modern researches focus on creating more realistic and more natural images. The term Deepfake originated around the end of 2017 from a Reddit user named “Deepfake”. It was quickly banned by YouTube and other websites. The reason was that people used materials maliciously to create porn videos while many celebrities were threatened by it. Deepfake then faded out from the entertainment area.

How Deepfake works?

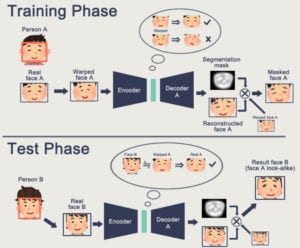

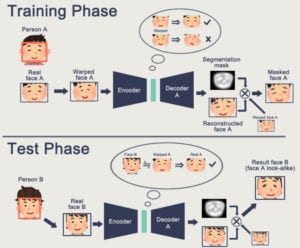

Principle: Training a neural network to restore someone’s distorted face to the original face, and expecting this network to have the ability to restore any face to the face of that person.

Problems:

-The sharpness of picture declines under the algorithm.

-Cannot recognize the face in some rare angles

-Quality of generated faces highly depends on the learning materials.

-The generated face cannot fit in the body in videos.

Improvement–Gan

Gan is a new training method developed by Tero Karras, Timo Aila, Samuli Laine, Jaakko Lehtinen.

In their website, they described the Gan as following “We describe a new training methodology for generative adversarial networks. The key idea is to grow both the generator and discriminator progressively: starting from a low resolution, we add new layers that model increasingly fine details as training progresses. This both speeds the training up and greatly stabilizes it, allowing us to produce images of unprecedented quality.”

Drawbacks: Gan brings many unpredictable factors.

Latest debate: ZAO

ZAO is an application that uses Deepfake technology to change faces. It maps the user-submitted positive selfies to clips of movie and TV programs, replacing the characters’ faces and generating realistic videos. The application became viral in a few days. However, public cast doubt on the user agreement and worry about the safety of personal information. One provision in the agreement said that the portrait right holder grants ZAO and its affiliates “completely free, irrevocable, permanent, transferable and re-licensable rights” worldwide, including but not limited to: Portrait rights of portrait rights holders contained in portraits, pictures, video materials, etc. Users have to agree with the treaty before using the app. In China, pornography was strictly limited, but the point is that facial payment was widely used. Thought the company quickly changed the agreement and acknowledged that the facial payment technology will not be broke by photo, there will be more potential threats.

presentation: https://drive.google.com/file/d/1CaJ6DsXWfC9OZxWvS1J8ed-MKRM6PZ70/view?usp=sharing

Sources

https://www.bbc.com/zhongwen/simp/chinese-news-49589980

https://en.wikipedia.org/wiki/Deepfake#Academic_research

https://github.com/deepfakes/faceswap

https://arxiv.org/abs/1710.10196