Concept

For my final project I intended to create a immersive viewing experience.

Machine Learning Model: PoseNet

Process:

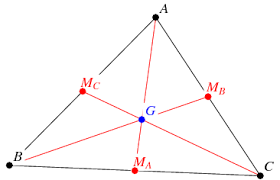

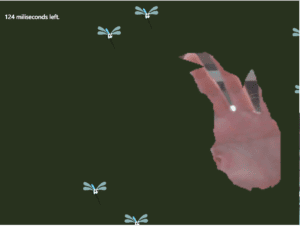

The final project is the extension of my midterm project idea. The prototype I created in the midterm project is to detect the position of the center of the triangle formed by two eyes and the nose. A big background picture will moved when the user’s face move. I received many suggestions from my classmates and instructors, so I decided to make a 3D version. So there will be two major parts in the project: the 3d model and the position detecting system.

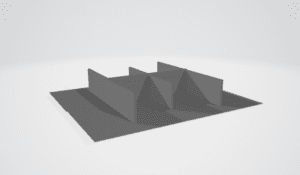

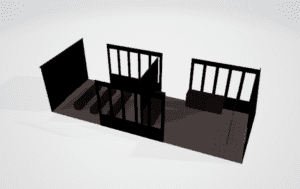

For the model part, the p5.js only accepts obj and stl file. So I made some drafts in MAYA and tried them with the loadModel() function. The room model works. I also tried some other kinds of figures such as stones and guns. I have found that the model size has the limitation: no more than 1M size. Another problem the p5.js has on loading the 3D model the render material. The surface of the model loaded on the screen could only defined by settled function in p5.js such as normalMaterial(), which means that the rendered color made in MAYA cannot be sent to the screen. In another class one of my classmate used Three.js to created a interactive model online, which can render the model with surface material and colors. I am considering of using another machine model in the future.

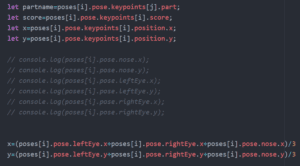

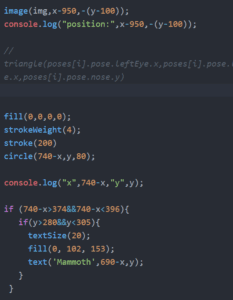

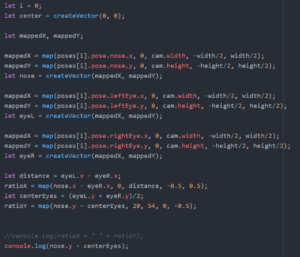

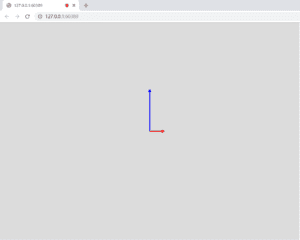

About the direction controlling part, instructor suggest me to use the vector. I searched the createVector() function and learned some traits of vectors online. We used the ratio of the x number of two eyes and the center point, and the vertical movement is controlled by the distance between the center point of eyes and the nose. The poseNet is sensitive and blinks when detecting the figures. Thus it is important to smooth the changing process. I utilized lerp() function that send analogy data to the screen. I tried few times and finally decided the number 0.1, the unit that best smooth the project but also ensure the accuracy and rotate speed.

Initially I used a model of a room to display, but the problem is that the screen is limited. In this case when the user is moving the focus to change the direction of the model, he or she cannot viewing the full screen. Also, when the model rotate same direction with the face, the viewing experience is not that immersive.

To make the pointing direction more clear. I changed the model to the gun. So the user can control it to point to any directions.

Output:

Reflection:

Since my project is very experimental, it is not easy to display. But doing this project makes me thinking about the design of user directed interface and how could it be in used in other areas. I have many ideas of improving my project or make it in use such as games. I hope to keep on working on this project.

Thanks to all the friends who helped me with the project and those who gave great suggestions. I have learned a lot this semester.