The concept comes from the manipulation of sound, in which I want to achieve an effect which can help people release their pressure. To achieve that goal, I think that we can deign a proper reason for the users to let out their tension, which they may have concerns and worries to express. In the actual game, we use a series of command to make the users experience its similarity with the tension they feel in the real life and end it with a final scene in which they can scream freely and release all the pressures they gain previously, whether in game or in reality. In selection of the materials, we used the cupboard to make the outfit as it is relatively easier to get and as a pure, colorful and visually impactful expression. The pity is that we could have used some more materials to cover all the devices in a whole shell and construct a more unified impression if time had permitted. In the brainstorming part we went astray for several times, as at first, we want both visual magnificence and interactivity, which leads us to a deviation to our original goal which should be our core. Soon we realized that and returned to our initials. Some comptonization is made, but the overall effect of the finished project is satisfying.

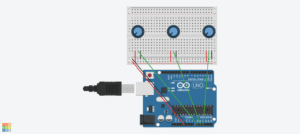

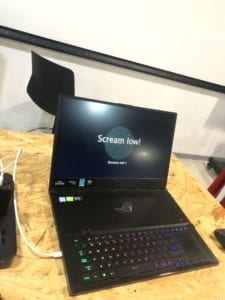

In the group project, I’m responsible for the programming part. The most difficult part is the shifting of stages on the basis of time, and I actually use a special variable to mark the stage time and increase its value at the end of every loop. The user text session provided us with valuable advises that we should be more informative, have more visual expression and add variation to the current process, like randomizing the sequence of the standard. Moreover, we redesigned our final scene and make it more delightful to enhance the comforting effect. The first scene is a test scene for the users to test their voice and the microphone and simultaneously understand the standard set of the game afterwards. We designed a red bar as the indicator of the amplitude and the rainbow bar below as the chart. The process accounts for the demonstration part. The second scene, which is the actual game scene, consists of 5 big stages and each stage have 10 minor levels for 0.8 seconds long each. This scene accounts for the mechanic and stressful repetitive work from which people may get their pressure. The final scene is a free-play scene designed to release the pressure of the users, which we use the colorful stripes and vortex to accomplish. In the IMA show, we also learned some deficiency of our project as the former stress-taking scene may be too long for some people and the whole intention of the program may not be easily understood. However, we are also pretty glad to hear some words of delightfulness and gratitude. We thank every user who had tried our devices. Your support is indispensable.

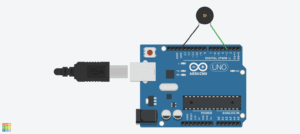

Our goal is to relieve people from everyday pressure. We define the sources of pressure, stimulate it with metaphor and provide a optimistic scene as the ending of the story. Everybody interacts with it differently, surprisingly. For example, a child picked up the speaker first as he thought that it should be the most important part of the project, while most of the adults stayed with the computer screen. But the reaction is similar. According to our audience, or to say, our “patient”, they may show baffled or at guard at first and leave with a sincere smile. I really wish that our project had brought the relief to people who really need it. The society can still function properly without the happiness of everybody, yet we still believe that as a society made up of living humans rather than machines, we should pay more attention on the benefit of people who are living in it, who may be troubled with the need of survival or the pressure of assignments. And I think though our attempt to reduce the burden which is artificially put on the back of people is not essential nor dramatically effective, it still worth a try and I shall follow that path.