Introduction

In this weeks recitation, we learned how to use functions and arrays.

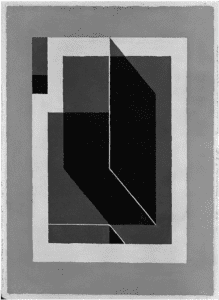

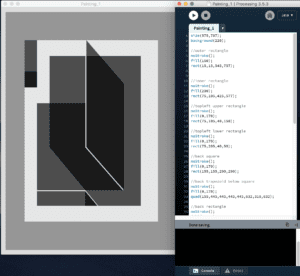

Part 1

For this part, we had to create our own design and use it as a function in order to reproduce the same design 3 different times with different variables.

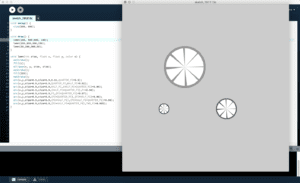

Part 2

In this part, we created a for loop in order to recreate different versions of the image 100 times. The loop I created was placed both into void setup() and void draw().

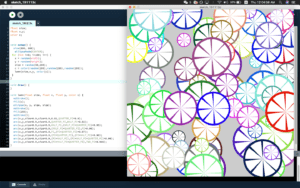

Part 3 and 4

For these part, I created arrays for the x, y, size, color data, as well as speed for each of the individual drawings. I set up and filled all the arrays in the void setup() section, and then used loop in void draw() to display the function I had made 100 times with movement.

[Video cannot be processed at the moment]Code

int lemn = 100;

float[] x = new float[lemn];

float[] y = new float[lemn];

color[] c = new color[lemn];

float[] size = new float[lemn];

float[] xD = new float[lemn];

float[] yD = new float[lemn];

void setup() {

size(800, 800);

ellipseMode(CENTER);

for (int i = 0; i<lemn; i++) {

x[i] = random(width);

y[i] = random(height);

size[i] = random(50,200);

c[i] = color(random(255),random(255),random(255));

xD[i] = random(-10,10);

yD[i] = random(-10,10);

}

}

void draw() {

ellipseMode(CENTER);

background(120);

for (int i = 0; i<100; i++) {

lemn(size[i],x[i],y[i],color(c[i]));

x[i]= x[i] + xD[i];

y[i]= y[i] + yD[i];

if (x[i] > width || x[i]< 0) {

xD[i] = -xD[i];}

if (y[i] > height || y[i]< 0) {

yD[i] = -yD[i];

}

}

}

void lemn(float size, float x, float y, color c) {

noStroke();

fill(c);

ellipse(x, y, size, size);

noStroke();

fill(255);

noStroke();

arc(x,y,size*0.9,size*0.9,0.01,QUARTER_PI*0.9);

arc(x,y,size*0.9,size*0.9,QUARTER_PI,HALF_PI*0.92);

arc(x,y,size*0.9,size*0.9,HALF_PI,(HALF_PI+QUARTER_PI)*0.95);

arc(x,y,size*0.9,size*0.9,(HALF_PI+QUARTER_PI),PI*0.96);

arc(x,y,size*0.9,size*0.9,PI,(PI+QUARTER_PI)*0.97);

arc(x,y,size*0.9,size*0.9,(PI+QUARTER_PI),(PI+HALF_PI)*0.98);

arc(x,y,size*0.9,size*0.9,(PI+HALF_PI),(PI+HALF_PI+QUARTER_PI)*0.99);

arc(x,y,size*0.9,size*0.9,(PI+HALF_PI+QUARTER_PI),TWO_PI*0.995);

}

Questions

- Setup makes the code run only once, whereas void draw() makes the function loops, similar to how void loop() in arduino loops the code.

- Arrays can help when working with a large number of separate elements and entities within an animation. In this case, I had 100 different objects with their own unique properties, and all these properties were controlled with the arrays that were created. In a future project, If I were to need to control many different elements with individual variables, I would now be able to simplify the code using arrays.