Technology is not enough.

Consider the technology as a tool which, in itself, could do nothing.

Treat the technology as something that everyone on the team could learn, understand and explore freely.

– Red Burns

I found this quote from Daniel Shiffman’s introduction video on ml5. Red Burns was a chair of the ITP program at ITP. Her work has inspired a lot of people. I really like this quote, because it tells us that don’t be too caught up in this shining technology and “we have to remember that without human beings on the earth, what’s the point?”, as Daniel Shiffman said. So whenever we are looking at the algorithms or rules, also think about how we can make use of it and how we can teach the technology.

After I explored a bit of the ml5.js website, I am really fascinated by all the possibilities that were brought to me. As someone who doesn’t really have a lot of knowledge about machine learning, I really like the way that ml5.js provides lots of pre-trained models for people to play around, and I can also re-train a model by myself.

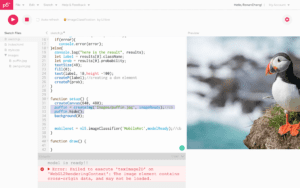

For example, when I was playing around with the image classifier example, I realized that it will return both a label of the picture as well as a confidence score. When I was watching Daniel Shiffman’s introduction on ml5.js, he used a puffin as the source image and the model didn’t get the right label. However, that was in 2018 and I wanted to try it out for myself to see whether they have updated the model. So I started to look at the example code provided on the ml5 website.

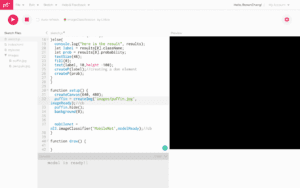

At first, the example code didn’t work out:

After examining the code, I realized there was a bug on the website. On line 32, it should be “Images” instead of “images”.

But again, now I can see the picture. However, I can’t really see the label and confidence score and there is another error on the website. I’m not sure how to fix this right now, but maybe I will look into later with more knowledge about this framework.

Besides, I’ve also learned that the image dataset that is used in the imageClassifier() example is the ImageNet.