With the development of Artificial intelligence, more and more attention has been drawn to the relationship between neural networks and human brains. What are the similarities? Why do we call it neural networks? Can computers actually “think” like human beings?

As we all know, there are a lot of things that a computer can do better than human beings such as calculating the square root of a number or browsing from the websites. At the same time, there are also things that human brains are better at: such as imagination and inspiration. Therefore, combining both of the strengths of computers and human brains, scientists have invented neural networks to simulate human brains and to help the machine behave more like us. Therefore, in my opinion, neural networks is a similar structure to human brain neurons for processing information and making decisions.

One of the reasons why we want machines to reason more like human beings is that we are developing technology for better life quality. Thus, we need to utilize the power of technology to think in the position of human beings and to understand human behaviors better. Second of all, human beings have the ability to learn and gain knowledge from previous experiences and we want computers to also have this ability so that they can have self-learning experiences at a high speed than us.

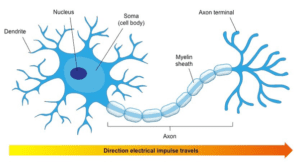

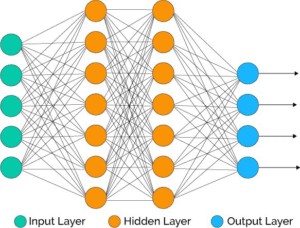

In human being’s neural networks, there are three key parts which are the dendrites (the input mechanism), the Soma (the calculation mechanism) and the axon (the output mechanism). Similarly, there is an equivalent structure in computer neural networks: Incoming connections, the linear calculation and the activation functions and the output connections. (see pictures below)

According to Yariv Aden, “Plasticity — one of the unique characteristics of the brain, and the key feature that enables learning and memory is its plasticity — ability to morph and change. New synaptic connections are made, old ones go away, and existing connections become stronger or weaker, based on experience. Plasticity even plays a role in the single neuron — impacting its electromagnetic behavior, and its tendency to trigger a spike in reaction to certain inputs.” This is also the key point for training computer neural networks.

However, although neural networks are inspired by human brains, the ML implementation of these concepts has diverged significantly from how the brain works.

First of all, neural networks’ complexity is much lower than human brains. This does not just mean the number of neurons but about the internal complexity of the single neuron.

Second of all , power consumption. The brain is an extremely efficient computing machine, consuming on the order of 10 Watts. This is about one third the power consumption of a single CPU. (Adan)

In a word, although neural networks are inspired by human brains and they are indeed a lot of similarities between two, there are still some key differences: human brains are far more complex and cost-efficient than neural networks.

Source:

Do neural networks really work like neurons?

https://medium.com/swlh/do-neural-networks-really-work-like-neurons-667859dbfb4f

What are Artifical Neural Networks?