Based on our understanding of “interactive experience”, my partner and I came up with three general ideas about our final project. All of them convey a specific theme, and we expect that the user could gain an interactive experience.

A. Tree

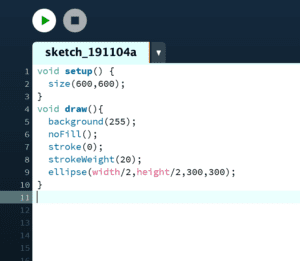

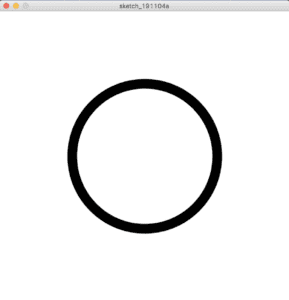

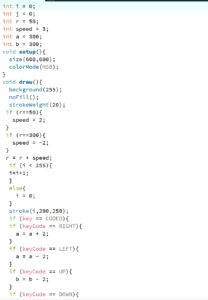

This project will be an art installation, which is inspired by an exhibit in WildLife Interactive Exhibition 2017. We will fill a real pot with soil and put it on a table. And we will draw a simulative image of the shadow of the pot with Processing, and then cast it on the wall with the projector to make it coincide with the real shadow of the pot. Besides, to represent the water and the light needed to make the tree grow, we will place a watering can with an Arduino button installed and a flashlight that would work with the photosensitive resistor. When the users use the watering can by pressing the button to water the empty pot in reality, an animation of the shadow of a growing sapling will appear on the Processing screen. When the users use a flashlight to simulate the sunlight shining on the empty pot, the amount of the leaves would increase. Every once in a while, the values of “moisture content” and “light amount” in the processing system would decrease, and the leaves of the tree would wither or fall off. Users need to have careful observation, adjusting water and light to make the tree thrive.

The potential challenges include how to draw a vivid image of the tree with Processing; how to connect Arduino components with the physical objects; how to transfer data between Arduino and Processing in real time…The theme of this project is “life”, and the targeted audience are those who seldom pay close attention to the subtle changes in the growth of natural life around us. We expect that through this simulated and accelerated way of conceiving life, the users can gain a sense of awe towards fragile lives and obtain an emotional impact when observing the growth of this simulative life.

B. Puppet

In this project, we will set a miniature stage with stage curtains and lighting. On the stage, we will place a marionette, which will be connected to the Arduino servos on the roof with strings. The gestures and movements of the marionette will be controlled by the Arduino part. On the wall behind the miniature stage, we will cast the Processing image with a projector. We will draw the shadow of the puppet with Processing, and the users can control the moveable joints of the puppet by the mouse to make it do different poses. Data of these gestures would be passed from Processing to Arduino. The real marionette on the stage would first quickly and randomly change a series of postures, and finally end up with the pose set by the user in Processing before. We will try to enhance the artistic appeal of the installation with music, lighting and so on.

Some of the problems we might encounter include how to accurately pass the data of the puppet’s poses from Processing to Arduino; how to connect servos with the puppet smoothly with the correct mechanism; how to let the users understand the work they need to do… The specific audience in this project are those who intentionally or compulsively cater to the social roles imposed on them by the forces in the society. In this project, the user represents the forces that decide our social images, while the puppet on the stage represents ourselves, who are constrained by our assumed roles. The “struggle” that the puppet has before it stops with its final pose represents our mental struggles when pondering whether to meet social expectations. However, we ultimately make the passive or initiative choice that satisfies the forces just as what the puppet does. We hope that through the artistic expression of this phenomenon, the users can have reflections or gain emotions in this interactive experience.

C. One Minute

In this project, the user will sit in a black box with a front opening, surrounded by a ring of lamp bulbs. We will use the projector to project the processing screen on the wall in front of the user. The image in Processing would be an animated starry night. The bulbs around the user will be connected to the Arduino, which controls whether they are on or off. The user will wear a noise-cancelling headphone, which will play soothing soft music. A series of problems will appear on the Processing screen. These questions will be relevant to the choices in busy life and they are designed to let the users reflect on themselves. The user will answer the Yes/No questions with Arduino buttons, which then directly affects the on or off of a bulb, as well as the increase or decrease of the amount of the stars in the Processing image. The logic goes like this: the more bulbs that are bright, the fewer stars there are. The experience starts with the all the bulbs on (which means there are no stars on the Processing screen), and the whole experience will last one minute. After a minute, no matter how many lights are on, all the lights would go out at the same time. Stars on the Processing screen will increase rapidly.

Our potential challenges include how to make more use of Processing functions to achieve better artistic effects; how to ensure a clear logical relationship between the user’s answers to the questions and the light and dark of the bulb; how to connect Arduino with the bulbs… The ideas about this project originate from a tagline for Earth Hour, which is “The fewer city lights, the brighter the stars will be”. In this project, the light bulbs stand for artificial lights, which represent people’s busy and impetuous life; the stars stand for the natural lights, which have the meaning of meditation and tranquility, and they represent the time for thinking that people leave for themselves. In the project, the contradictory relationship between these two is reflected in a quantitative way. We want to utilize the black box and the noise-cancelling headphone to allow the users to have a minute of quiet meditation, which help form a physical isolation from the surroundings. We hope that by asking questions to users, we can enable them to have deep reflection and active thinking. The message we want to convey is, to have a period of time alone for mediation, to think actively, to explore the true meaning of life. Our intended audience are those who are unconscious of the fact that they lack the time for being alone, thinking, and making real powerful choices.

Conclusion

As I wrote in the preparatory research and analysis, a successful interaction experience should contain the following four parts, which could be seen in our plans for the final project.

- The process of interaction should be clear to the users, so that they can get a basic sense of what they should do to interact with the device. (plan A,B,C)

- The design of the interactive experience should provide the user with various means of interacting with the device. The “contact area” between the user and the device should be large. For example, the users can change gestures or move to other locations, instead of only pressing a small button. (plan A)

- Various means of expression is needed, such as visuals and audios. The user can be truly engaged when receiving information with different senses. (plan B,C)

- The user should gain some senses of accomplishment, pleasure, surprise or entertainment from the experience. In addition, the experience could be thought-provoking, which may reflect the facts in the real life. (plan A,B,C)