title:

opus 1

Link to GitHub:

https://gist.github.com/gabrielchi/b91dac2eef3e0b33d1feb1e34c871305

Project Description

For my project, unlike some of the other students, I wanted to take a very abstract and contemporary take on the audio visual performance art form. I wanted to make an immersive audio visual experience that would reminiscent of my favourite artists in the field, such as Ryoji Ikeda, but with my own twist. By incorporating my prior knowledge with music production, and my somewhat moderate understanding of Max8, I wanted to challenge myself to create something original and artistically unique, pushing myself out of my own comfort zone.

When thinking of a concrete concept behind the project, I approached this less as an art piece with a fully fleshed out plot and story, rather, I wanted to take this head on as a creative exercise in a discipline I had never worked with prior, hence the name: opus 1 (work 1). The name itself not only presents itself, quite straightforwardly, as my first work, but also connotes that this is merely the first piece, and there will be more “opuses” in the future.

Perspective and Context:

In terms of opus 1 and its relationship to the wider spectrum of audiovisual history, one can look at its relation to contemporary styles of the art form. As we have mainly been looking back to traditional artists within the field for our previous projects, such as Thomas Wilfred’s Lumia, I thought it would be an interesting creative exercise to focus exclusively on contemporary influences.

Thus, the stylistic elements of the project are heavily influenced by abstract visual artists such as Ryoji Ikeda, who, in certain projects, makes use of strictly black and white when performing. Additionally, piece such as loudthings by TelcoSystems, played an important influence for certain portions of the audio that plays throughout the earlier and latter half of the project.

Development & Technical Implementation

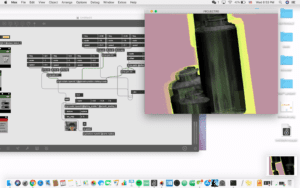

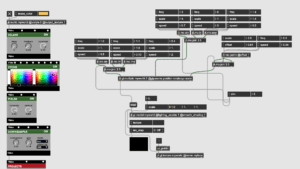

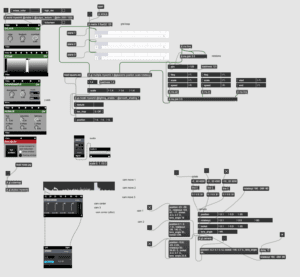

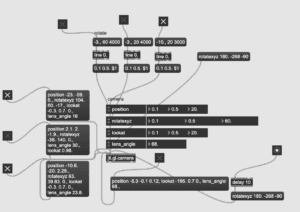

For my project, I had already had an idea of how I wanted to execute the visuals. After having a talk about how TelcoSystems made loudthings with Professor, I decided to make a large grid cube of 3d objects, and place different cameras within the space, and trigger them live during the performance. With help from professor, I realized that one could incorporate .js directly into Max8, which allowed for me to easily make such a large amount of 3d objects in a grid arrangement, mainly with the use of for loops and nested for loops.

Additionally, by looking into the jit.gl.camera, I was able to place different positions of the camera within and around the cube, allowing for me to switch between perspectives of the cube within the 3d space. I also used jit.gl.skybox and cubemap in order to make a larger cube, which would have a texture inside of it, mainly to give the 3d space more depth and visual texture (I used to a texture of static to emulate an almost deep-space/ starry universe effect to the world).

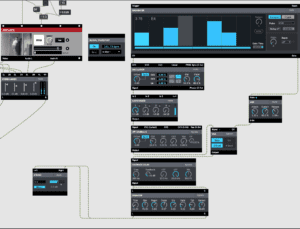

Finally, the method to create and control the 3d objects were based off the 3d object exercise we did in class. This allowed for me to upload my own sourced 3d object, and control the different rotations it made on both the x and y axis. For the rest of the patch, it mainly was the different Vizzie effects to affect the visuals during the performance.

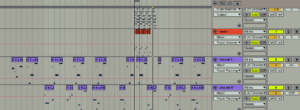

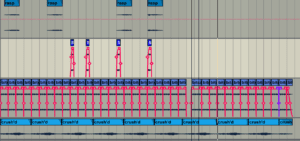

For the audio, which was a large part of the project, I used Ableton to produce the entire 10 minute score. When trying to accomplish this task, I found extremely hard, not only to create a 10 minute score, but to find a way to make it not sound boring and completely uniform. Therefore, the first and last half of the project sound very similar to projects such as loudthings, a very abstract and surreal soundscape, making use of unconventional samples such as locust noises. However, when reaching the middle of the audio, I decided to add my own personal twist to the sound. I decided to make a chord progression, later accompanied by a drop and percussion, just to differentiate the different sections of the project. This was mainly done by using a midi keyboard and different beat packs that I use for my other music production.

I think the hardest aspect of the audio, besides the aforementioned issues, was trying to master a 10 minute soundscape. Not only was there already a chord progression and drum sequence to master and mix, but there was also so many small elements throughout the soundscape, which took a lot of time and effort, and I still think I can improve on it. Making the soundtrack for this project was definitely a challenge, and forced me to step outside my comfort zone.

For the performance patch, I wanted to make automating the patch in real-time as easy and intuitive as possible. When considering the different elements I was going to be automating during the perfomance, I realized that I actually did not need as many things as I previously had thought. I only added different bangs and triggers for each camera angle, making sure to make not of the different camera names (eg. camera 1, 2, 3, etc.) Additionally, I added in my controls for the speed of movement for the x axis and y axis of the 3d objects. Finally, I also included the audio for the project itself, the stereo mixer, and the visual effects such as DELAYR and 2TONR for making visual shifts within the project.

Performance

Overall, I really enjoyed performing the project in a setting that was off campus. Not only did it make it feel as if we were wholeheartedly showing our pieces to the public, but it also brought a certain pressure that pushed me to make sure everything was perfect during the performance. I thought that by having a show that was outside of school grounds, we could all challenge ourselves to step outside our comfort zones.

I think for my performance specifically, I felt that it went smoothly, in terms of the lack of any technical difficulties. However, looking back at the recording of the performance, I definitely could make some improvements. Mainly, I noticed that there was some latency/ lag time between the projection and the buttons I was triggering. Although I did not notice this because the small display had no such latency, I did not account for the slight delay that would be projected on the larger screen, which affected the portion of my project that had percussion.

Conclusion

I think that this project was an extremely eye opening experience for me, both in a creative and technical sense. I realized that after this project, audio visual performance was indeed something that I would want to further pursue, as it has endless applications within countless artistic mediums and disciplines. By making and performing this final project, I was able to realise that there is still much I have to learn, and there are countless technical skills I must master to better perfect my work.

I think that in the future, I would like to look into different audio/visual softwares and programs that could offer a more in-depth and intuitive perspective on the art form, as I sometimes found Max8 to be unnecessarily complicated. By taking into consideration the mistakes and accomplishments I had made in this final project, I hope to be able to apply my focus further to continue making more audio visual works.