For our soundscape project, we try to recreate a crime scene. The idea just popped up in my head at first. I thought the crime scene could be a really unique space to present the collection of sounds. On the other hand, these different pieces of audios can reflect emotion activities from different people on the spot. The podcast Homecoming inspired me in some way. I found that how characters express themselves by audio can convey rich emotions which helps to enhance the general atmosphere for listeners. The theme of a crime scene could also be attractive for listeners because it is not normal or common to see in real life. I believe it will bring more shock and surprise to listeners.

At first, we wanted to build our project based on one main crime scene image with buttons to trigger different audios. However, we found it a bit inappropriate because there is an order for the emergence of audios in every specific crime scene. For instance, the cause, like the sound of the explosion in our project should be the first before the sound of the police and everything else. With the template offered by Professor Moon, we decided to make our project with the scrolling function so that one fixed order can be set by us first. My partner, Christy suggested that we could design the scene under one specific story background so that we chose the movie Leon, the Professional in which the famous hitman Leon was killed in one explosion in order to keep the little girl Mathilda safe. After having the basic stricture of our project, we started data collecting. We collected the audios both by recording on our own and searching online. For the parts with characters talking, I found one of my friends in the theatre club who’s good at acting to help recording the Mathilda part. By this way, I hope listeners could feel the message we are trying to convey in the audio. I also used Audacity to add sound effects when editing the beginning sound and making the sound of the crowd as the background when the policeman is talking, etc. In order to make the project more dramatic, I edited both the beginning and ending audios with Audacity. I hope listeners would have the “whoa” feeling when listening. For the visual part, we didn’t spend much time. we found images online and photoshopped them.

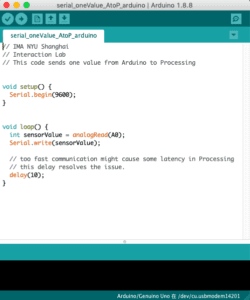

For this project, I did all the coding and didn’t encounter any major problem because we were offered the coding template which helped us a lot. The only thing that troubled me during the process is that the audio started to play every time it detected that the “scrollPercentage” was larger than the threshold value while I only wanted it to play once the first time the percentage is larger than the threshold value. I talked to Professor Moon about it. He simply added one boolean function in js code and it worked. I remember using it last semester for interaction lab but didn’t think of utilizing it here. By defining true and false, the audio could only play once. Another small thing is that I was afraid that if the listeners scroll too fast so that different audios mix together so that I added an alert to ask them to scroll after finishing listening to each piece. For the recording part, it took some time but I was quite satisfied with the pieces. The Tascam and Audacity are not hard to use.

I think our project turned out to be similar to what we expected but the content is not that rich because we didn’t have much time and it is a bit difficult to gather relative audios for our project. I had one idea that didn’t achieve in the project, but I made an attempt in the project. I wanted to break every piece of audio down with every according visual element and pile them up to build a whole image of the crime scene, just like building puzzles. We utilized different elements and had the whole image but didn’t design it as I expected because it might take more time to design the visual elements and code. So we just focused on the audio part and kept others simple. If I have more time, I’ll add more audio to the page and probably work on the visual part.