What is this?

RePictionary is a fun 2 player game (read: Reverse Pictionary) in which users type in descirptions of images that are generated by an attnGAN and guess each other’s image descriptions. The scoring is done using sentence similarity with spaCy‘s word vectors.

How it looks like

Here are some images of the interface of the game. I used some retro fonts and the age-old marquee tags to give it a very nostalgic feel. Follow along as two great minds try to play the game.

Choose basic game setting to begin

First player gets to type in a caption to generate an image

Second player must guess the image that was GANarated

Scores are assigned based on how similar the guess was to the original caption

After repeating, the winner is announced. Hooray!

How does it work?

Image generation

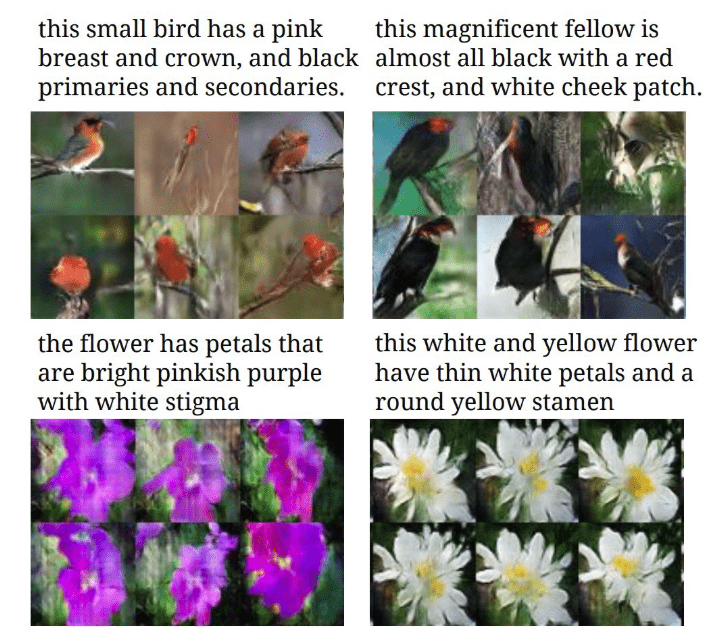

The image generation is done using an attnGAN, as described in AttnGAN: Fine-Grained Text to Image Generation with Attentional Generative Adversarial Networks by Tao Xu, Pengchuan Zhang, Qiuyuan Huang, Han Zhang, Zhe Gan, Xiaolei Huang, Xiaodong He. It uses a pytorch implementation of the model as done in this repo with additional modifications from this repo. A high level architecture of the GAN is described in the image below. (source)

The model uses MS CoCo dataset, a popular dataset of images used for object and image recognition along with a range of other topics related to computer vision.

The model uses MS CoCo dataset, a popular dataset of images used for object and image recognition along with a range of other topics related to computer vision.

The scoring

For the scoring part, I used the age-old sentence similarity provided by spaCy. It uses word vectors to provide cosine similarity of the average vectors of the entire sentence. To avoid giving high scores to semantic similarity and place more importance of the actual content, I modified the input to the similarity function as described in this StackOverflow answer. The results looked pretty promising to me.

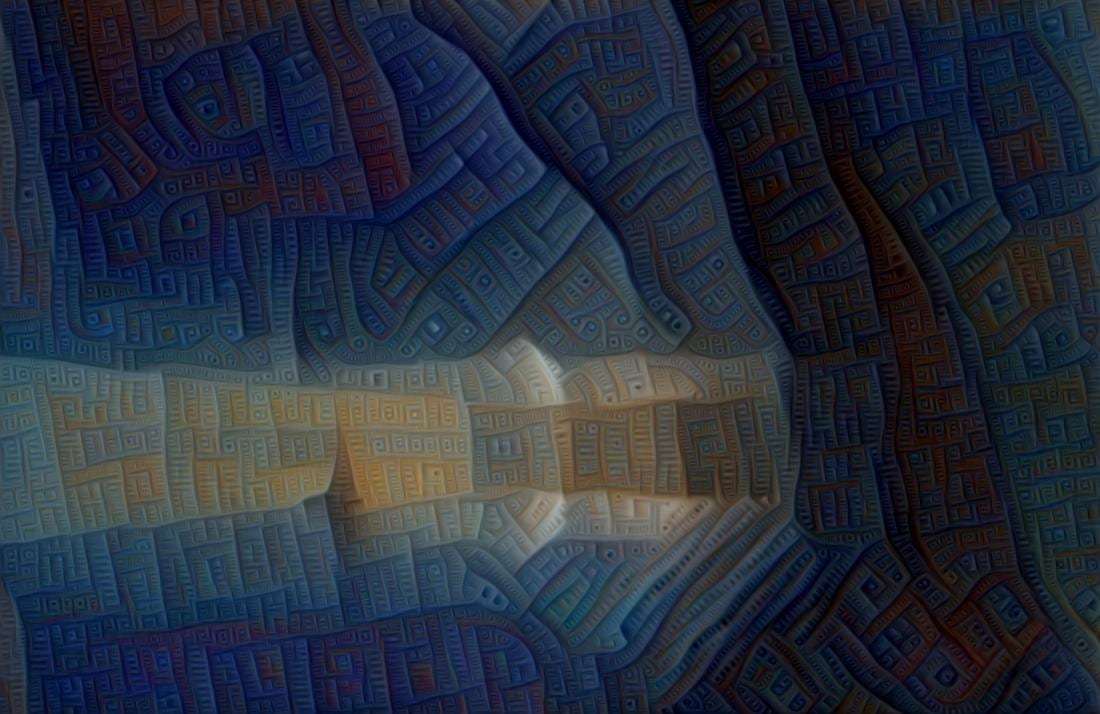

The web interface

The entire web interface was created using Flask. The picture below is a rough sketch of the artitecture and the game logic specific to the web application.

Details that are lacking here can be found by reading through the code, most of the relavant stuff is written in

Details that are lacking here can be found by reading through the code, most of the relavant stuff is written in app.py. There are various routes and majority of the game data is passed using POST requests to the server. There is no database and game data such as scores are store as global variables, mostly since the project was a short term project for which I did not really need to create a database to do many things.

Why I did this

I knew I wanted to try and explore the domain of text-to-image synthesis. Instead of making a rudimentary interface to just wow users with the fact that computers nowadays are making strides in text-to-image generation with GANs, I decided to gamify this. It’s a twist on a classic game we’ve all played as kids. Although the images generated are sometimes (highly) inaccurate, I’m happy that I’ve created a framework to potentially take this game further and make the image generation more accurate using a specific domain of images. Since the images were a bit off, I obviously followed the age old philosophy “it is not a bug, it is a feature” and came up with the whole jig about the AI being trippy.

Also, if you remember, I had the idea of generating Pokemon with this kind of model. After much reading and figuring out, I came to realise it might actually be possible. I intend to pursue this project on my own during the summer. I couldn’t really do much for the final project in this direction because it was too complex to train and I did not have a rich dataset. However, I’ve found some nice websites from which I could scrape some data and potentially use that to train the pokemon generator. I’m quite excited to see how it would turn out.

Potential Improvements

- Obviously, the image generation is very sloppy. I would like to train the model using a specific domain of images. For example, only food images would be a fun way to proceed, given that I am able to find a decent dataset or compile one on my own.

- The generation of the image takes roughly 2 seconds or so. I don’t think there’s a way to speed that part up but maybe it’d be nice to have a GIF or animation play while the image is being generated.

- Add a live score-tracking snippet on the side of the webpage to let users keep track of their scores on all pages.

- Try out other GAN models and see how they perform in text-to-image generation. Of particular interest would be the StackGAN++, an earlier iteration of the AttnGAN.

All the code is available on GitHub. The documentation is the same as the one on GitHub, with additional details and modifications.