Exercise 1: Make a Processing Etch A Sketch

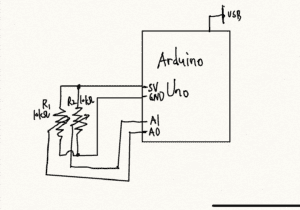

Components:

1 * Arduino Uno

1 * USB-B to USB-A cable

2 * 10kΩ Potentiometer

Several jumper cables

Video:

Process:

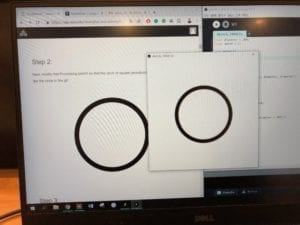

In this week’s lecture, we have already learned how to transfer values between Arduino and Processing. Therefore, I didn’t encounter many problems when trying to understand and modify those codes in the template. However, during my first trial, it seems that Processing failed to receive the exact values from Arduino because the numbers in Arduino looks fine but all the readings were zero in Processing. After consulting Leon, he told me that I need to pay special attention to the format of values transferred from Arduino. What I should do is to use println instead of print so that Processing can read two numbers at each time. After correcting this, the entire project successfully began its operation.

Exercise 2: Make a musical instrument with Arduino

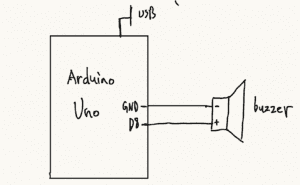

Components:

1 * Arduino Uno

1 * USB-B to USB-A cable

1* Buzzer

Several jumper cables

Video:

Process:

From my perspective, although the circuit of this project is easier, it is still a little bit harder in general than the previous one because the code is not very clear this time. The first thing to pay attention is that in the template, there is space for 10 values but during the exercise, we only need two. We have to remember to change the number in the array according to the specific needs of each program. Then, in Processing there was a for loop in void draw(), I could not think of why does this exercise requires us to use for loop so I deleted it and changed altogether. I simply let values[0] = mouseX and values[1] = mouseY. Thankfully, this action did not cause errors. Finally, I made the buzzer to give out sound but the tone was so low and we really could not make any pleasant rhythm within such a setting. As a result, I raised the frequency by twice and this problem was partially solved. I hope in the future, I can replicate the effect of showing the path of my mouse’s movement, just as what the person did in the original version of the video. Maybe I should refine the settings of frequency as well to bring truly pleasant sounds and make the instrument more practical.