Playing with ml5.js example

This was my first time using the ml5 library. I started with the imageClassifier() example. In my daily life, I’ve seen and used many applications of the image classification/recognition technology, such as Taobao, Google translator, Google image recognition and so on, and I’ve been curious about how it works for some time. Luckily, this ml5 example has shed some light on this question.

Technical part

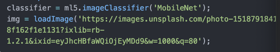

The ml5.imageClassifier() contains a pre-trained model that can be used to classify different objects, and the ml5 library accesses this model from cloud. The In the example code of the sketch.js, I can see that the first thing the program does is to declare a callback used to initialize the Image Classifier method with MobileNet, and then declare a variable to hold the image that needed to be classify.

![]()

After that, it initializes the image classifier method with MobileNet and load image.

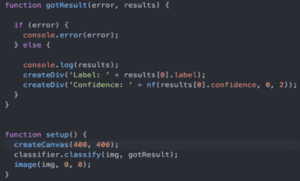

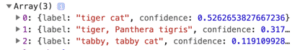

It then defines a function that can get the result of the classification or display some of the errors in the program in the console. (I’m not sure if this function counts as a promise though.) The output of this function not only shows the results of the classification but also the confidence on how accurate the classification is. Finally, there is a function that displays the output of the function using p5.js.

I assume this example project has concluded many machine learning technologies such as deep learning, image processing, object detection and so on. I think the pre-trained model of this example must concerned a great deal of the machine learning technologies. I will try to look into it in the future.

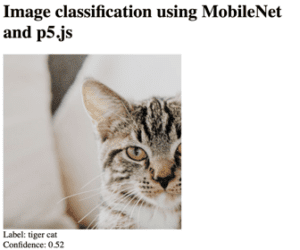

My output:

Questions:

One of the problems I encountered when trying this example that I still don’t know how to solve is that the program always seems to crash when I use an image from my local file. It failed to load the image and often give wrong classification. I still don’t understand why this is happening. The output of the grogram often looks like this when I use an image from my computer instead of online:

My code: ![]()

Everything seems to be fine when I use an image from online with an url though…

Thought on potential future application:

Even though today there are already many applications of image classification algorithm similar to imageClassifier(), I still believe the great potential of this example haven’t been fully exploited. Most application of this technology focus on shopping or searching, but I believe it can also be used in both the medical area and the art field. For example, maybe this technology can be used to classify different symptoms of patients’ medical report images like CT or ultrasonic image reports in hospital to adds to the development of future AI doctor…Or it can be used to help fight against plagiarism in art field, or other creative art projects like “which picture of Picasso you look like the most”…The imageClassifier() example, along with other outstanding ml5.js example, have showed me the great potential of ml5 in the future in all walks of life.

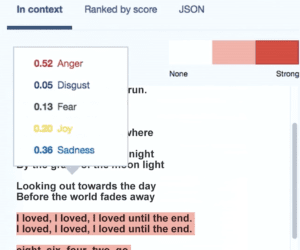

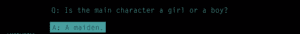

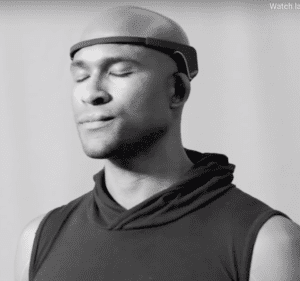

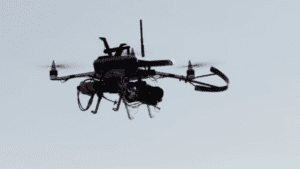

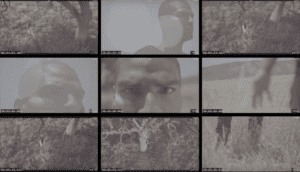

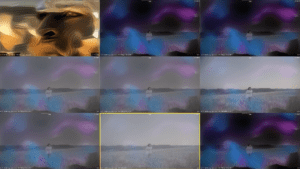

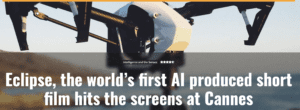

The film put together a different kind of “film crew” comprising A.I. programs including IBM’s Watson, Microsoft’s Ms_Rinna, Affectiva facial recognition software, custom neural art technology and EEG data. Together, they produced the film “Eclipse,” a striking, ethereal music video that looked like a combination of special effects, photography and live-action. The movie is conceived, directed, and edited all by machine. In the behind the scenes video, we can hear the team members explain how they teach the machine to tell a story.

The film put together a different kind of “film crew” comprising A.I. programs including IBM’s Watson, Microsoft’s Ms_Rinna, Affectiva facial recognition software, custom neural art technology and EEG data. Together, they produced the film “Eclipse,” a striking, ethereal music video that looked like a combination of special effects, photography and live-action. The movie is conceived, directed, and edited all by machine. In the behind the scenes video, we can hear the team members explain how they teach the machine to tell a story.