I’ve always felt that the relation between artificial neural network and human brain stuff is just like the relation between planes and birds, motorcycles and horses, or radars and bats. The former inventions are all in a way inspired by the latter organisms, however, they do not actually conform with all the features or abilities of those organisms. In my opinion, even though the invention of neural network is inspired by the structure of human brain neurons, there are still fundamental differences between how the artificial neurons and biological neurons work. Thus, despite the amazing development of the AI technology and the great potential of how it will aid humans in various fields in the future, the artificial intelligence will never be as same as the intelligence of a human brain, nor will it replace or beat humans in the future. Because they are fundamentally two different things.

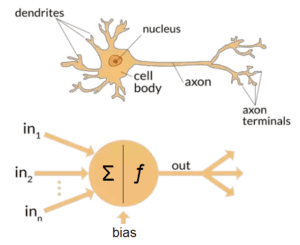

The way the AI work with artificial neural network is a simplified mathematical model of how human brains work with our neural network. While human neural network receives signals from dendrites and sent signals down the axon to stimulate other neurons and trigger them to react accordingly, artificial neural network mimics such processes by coming up with a function that receives a list of weighted input signals and outputs some kinds of signal if the sum of these weighted inputs reaches a certain bias (Richárd). However incomplete the model is, it still provides the chance for a computer to mimic learning from experience still offers a great deal of innovative applications to help improve our lives in different ways.

However, just because the model is inspired by human brain network, it doesn’t mean the way they work is same. As far as I’m concerned, the learning of artificial neural network and the learning of human brain stuff are fundamentally two matters. In terms of human brains, the neuron network in our mind is not set for once, instead, it changes all the time. When we learn, our brain is able to add or remove neuron connections, and the strength of our synapse can be altered basing on the task at the present. Artificial neural networks in the other hand, have a predefined model, where no further neurons or connections can be added or removed. Even if it’s learning, or thinking, it’s doing that based on one predefined model. It needs to go through each and every condition set in the model every time, without getting new connections or riding of old connections. Even if the output it gives may seems “creative” or “intelligent”, it’s clear that the artificial intelligence is only finding an optimal solution to a set of problems basing on one set model.

To sum up, in my opinion, since the way the artificial neural network and human brains “learn” and act upon their “intelligence is fundamentally different, the artificial intelligence today is still a completely different matter from human intelligence. However diverse or creative its works may appear to be, it’s still just finding an optimal solution to a set of problems basing on one set model. It’s not truly intelligent. Nonetheless, there is no denying that such technology still can aid humans to improve our lives in various fields to a great extent.

Source:https://towardsdatascience.com/the-differences-between-artificial-and-biological-neural-networks-a8b46db828b7

(Japenese Shuriken)

(Japenese Shuriken)