Overview

For the midterm project, I developed an interactive two player combat battle game with a “Ninjia” theme that allows players to use their body movement to control the actions of the character and make certain gestures to trigger the moves and skills of the characters in the game to battle against each other. This game is called “Battle Like a Ninjia”.

Demo

Code:

https://drive.google.com/open?id=1WW0pHSV2e-z1dI86c9RLPnCte3GflbJE

Methodology

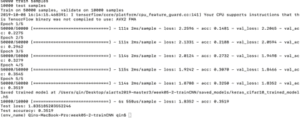

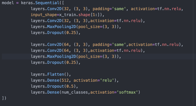

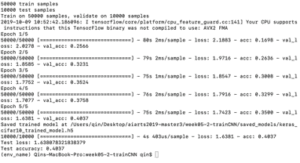

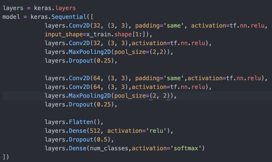

Originally, I wanted to use a hand-tracking model to train a hand gesture recognition model that is able to recognize hand gestures and alter the moves of the character in the game accordingly. I spent days searching for an existing model that I can use in my project, however, I later found that the existing hand tracking models are either too inaccurate or not supported for CPU. Given that I only have one week to finish this project, I didn’t want to spent too much time on searching for models and ended up with nothing that can work. Thus, I then turned to use the PoseNet model. But I still do hope I can apply a hand-tracking model in the project in the future.

(hand gesture experiments ⬆️ didn’t work in my project)

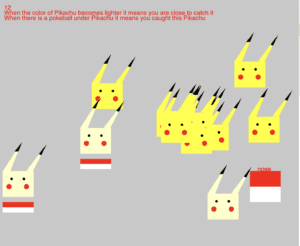

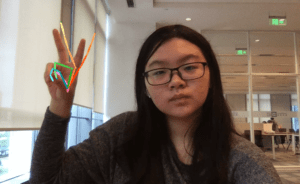

In order to track the movement of the players’ body and use them as input to trigger the game, I utilized the PoseNet model to get the coordination of each part of the player’s body. I first constructed the conditions each body part’s coordination needs to meet to trigger the actions of the characters. I started by documenting the coordination of certain body part when a specific body gesture is posed. I then set a range for the coordination and coded that when these body parts’ coordinations are all in the range, a move of the characters in the screen can be triggered. By doing so, I “trained” the program to recognize the body gesture of the player by comparing the coordination of the players’ body part with the pre-coded coordination needed to trigger the game. For instance, in the first picture below, when the player poses her hand together and made a specific hand sign like the one Naruto does in the animation before he releases a skill, the Naruto figure in the game will do the same thing and release the skill. However, what the program recognize is actually not the hand gesture of the player, but the correct coordination of the player’s wrists and elbows. When the Y coordination of both the left and right wrists and elbows of the player is the same, the program is able to recognize that and gives an output.

(use the correlation of coordination of wrists as condition to “train” the model to recognize posture)

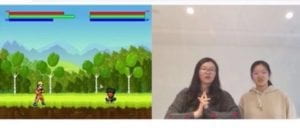

As is shown in the above pictures, when the player does one specific body gesture correctly, and the program successfully recognize the gesture, the character in the game will release certain skills, and the character’s defense and attack (the green and red chart) will also change accordingly. The screen is divided into half. PlayerA standing in front of the left part of the screen can control Naruto’s action with his body movement and PlayerB standing in front of the right part can control Obito. When the two characters battles against each other, their HP will also change according to the number of their current attack and defense.

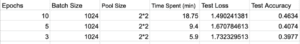

Experiments

During the process of building this game, I encountered many difficulties. I tried using the coordination of ankles to make the game react to the players’ feet movement. However due to the position of the web cam, it’s very difficult for the webcam to get the look of the players’ ankle. The player would need to stand very far from the screen, which prevents them from seeing the game. Even if the model got the coordination of the ankles, the numbers are still very inaccurate. The PoseNet model also proves to be not very good at telling right wrist from right wrist. At first I wanted the model to recognize when the right hand of the player was held high and then make the character go right. However, I found that when there is only one hand on the screen the model is not able to tell right from left so I have to programmed it to recognize that when the right wrist of the player is higher than his left wrist, the character needs to go right…A lot of compromise like these were made during the process. If given more time, I will try to make more moves available to react to player’s gesture to make this game more fun.I will also try to build an instruction page to inform the users of how to interact with the game, such as exactly which posture can trigger what moves.

.

.

.

.