Conception and Design

Our original understanding of the interaction in our project is that in order to create an immersive experience, users need to use their body to control the movement of the whale in the game. The final design decision we made was to use a helmet whose front is red while opening the OpenCV in processing to track the specific kind of red within the view of the webcam. The center of the red area would then be mapped as the head position of the user. We chose red instead of other colors because it could be most easily recognized by the camera, thus the recognition would be the most stable and accurate. The other option we also had was putting numerous infrared LEDs on the head and around the wrists of the user, then use light tracking to track the position of the user. The major problem involves the recognition being disturbed by numerous infrared noises in the environment, which makes the corresponding mapping less accurate.

In order to make the whole experience even more immersive, we decided to make a whale suit to let the user put on. The suit was made from several IKEA shopping bags. We chose them because they first resemble the color of whales, and second are recycled materials, which caters to the theme we set for this game. In addition, the suit is used in case some players dress in red, which might greatly influence the accuracy in this game. There surely were other options, one is to buy retailed whale costume online. We turned this down, for buying new materials wouldn’t cater to the environmental protection theme we set for this game.

Fabrication and Production

The general development process of our project involves two stages, game development, implementation of interaction/costume.

In the first session, I was mainly in charge of coding the game. The game itself was literally developed from scratch. The first success we achieved was prototyping the game. In this version, all the objects, from whales, garbage, to jellyfish, were represented by a series of shapes in different colors. All the garbage and jellyfish would flow from the right of the screen to the left of the screen, while the player needs to use the arrow keys to control the movement of a circle and avoid colliding with other objects. The game would be over when the circle crashes into the rectangle or other garbage. Everything works perfectly until this point. In the next step, I tried to let the amount of garbage and jellyfish increases over time. The first option I had was using arrays. Though I managed to find a way to append new objects to an array through “obs =(Obstacles[])append(obs, newObstacles(width+random(40), random(100, height), random(4, 6)));”, the game would be slowed down when more objects are appended into the array. I then unwillingly switched to the ArrayList. After images of the objects and background were added into the game, the game became incredibly slow. It took me a while to google the answer, which says the image() function would be greatly slowed down when five parameters are passed into. Instead, I should use the resize() function at the beginning of the program to adjust the size at one time.

In the implementation part, it took us a while to find the appropriate object to be tracked. As mentioned above, we considered using Infrared LEDs, but it could be easily influenced by other infrared light within the environment. Then I accidentally discovered the OpenCV library in processing also supports color tracking by choosing a specific color, before finding a helmet which has red in front. After measuring the hue value of the red on the helmet, I was able to track this specific kind of color using OpenCV. However, the position of the recognized dot wasn’t stable, and the whale in the screen might be jumping from here to there. Eric helped me out by implementing an easing function which would ignore changes brought by instability.

During the user testing, lots of people expressed great interest in our project and think highly of the concept. However, most of them also complained that they don’t know the objectives of the game, especially what to do and what not to do. They also think there lacks feedback from the game, either in the form of visual or audio. To address the first problem, we put in a tutorial page at the beginning of the game, labeling which need to avoid eating and which need to eat. However, the tutorial doesn’t seem to be very effective, especially during the IMA Show, as I still have to verbally explain the rules. Most users also don’t know how to correctly control the whale using their head. As for the second feedback, we added two vibration motors to our helmet. When the whale eats garbage, these motors would vibrate and provide physical feedback to the user. This went pretty well during the final presentation. But on the IMA Show, some of the wires went off and the vibration part couldn’t function during that time.

Conclusions

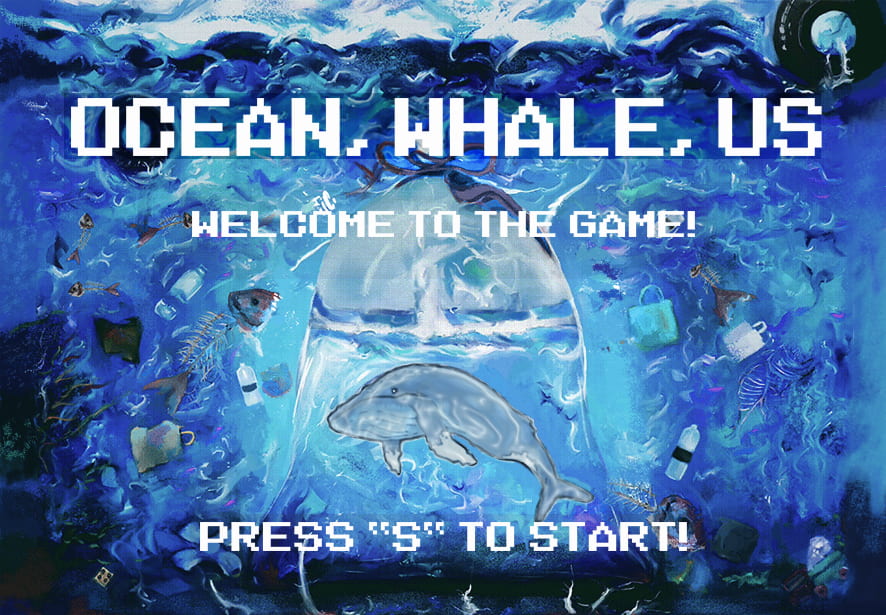

As I mentioned previously, the goal of my project is to create a motion-sensing game, through which the user would feel like a whale struggling to avoid eating garbage in the ocean. It aligns with my definition of interaction, as it allows multiple ways of input and output, where users need to use their body to control the movement of the whale; the user would get output from the game visually, physically(vibration). It doesn’t align with my definition in the way that users sometimes are confused about how to interact with the device. Ultimately, my audience interacts with my device almost in the same way as I anticipated. However, some of them didn’t really know how to move their heads to control the whale in the game, while some other of them were confused about what to do and what not to do. If I were to have more time, I would first, add a tutorial session before the game officially starts, which teaches users how to properly move their heads, as well as what to eat and what not to eat. I would also try to alter the game mechanism by adding more objectives to the game. What I learned through setbacks is that never lose hope, there must be other approaches if one doesn’t work. As for the takeaway from accomplishments, the game mechanism is really the foundation of a game, which needs to be carefully thought of before coding, otherwise, it would be so hard to change later.

So why should anybody care? In terms of the concept and the background of the game, it is set on the plastic pollution issue in the ocean, the user is no longer a bystander as a human, but instead, a whale in the ocean struggling to avoid eating plastic. Being in the shoes of a whale is truly the spirits of the game. The motion sensing interaction also boosts the user’s immersive experience in being a whale. Most importantly, the cute whale suit could not be more appealing to users, especially kids. In other words, the significance of our game is already written in the title of our project, Whale, Ocean, Us.

Appendices

GitHub Repo: https://github.com/nh8157/Whale-Ocean-Us

################PROCESSING CODE######################

PImage bag;

PImage introBG;

PImage firstBG;

PImage endBG1;

PImage endBG2;

PImage [] whales = new PImage [65];

PImage [] garbage = new PImage [4];

PImage [] food = new PImage [2];

import gab.opencv.*;

import processing.video.*;

import java.awt.Rectangle;

import processing.serial.*;

import processing.sound.*;

SoundFile file;

Capture video;

OpenCV opencv;

PImage src, colorFilteredImage;

ArrayList<Contour> contours;

Serial myPort;

int valueFromArduino;

int gameState = 0;

//initiating ball

Ball ball1;

// initializing two arrays\list

ArrayList<Obstacles> obs = new ArrayList<Obstacles>();

ArrayList<Subsidy> subs = new ArrayList<Subsidy>();

ArrayList<Background> bg = new ArrayList <Background>();

ArrayList<PImage> seaFood = new ArrayList<PImage>();

ArrayList<PImage> bgReserve = new ArrayList<PImage>();

float present;

float previous0 = 0;

float previous1 = 0;

float previous2 = 0;

int counter0;

int counter1 = 0;

int counter2 = 0;

int count0;

int count1;

int subCount = 0;

int seaLevel;

float[] brePara = new float[2];

float[] hunPara = new float[2];

int x;

int y;

int vibration;

void setup() {

size(1280, 720);

// initializing two arrays

//video = new Capture(this, 1280, 720);

video = new Capture(this, 640, 360);

video.start();

opencv = new OpenCV(this, video.width, video.height);

contours = new ArrayList<Contour>();

seaLevel = 230;

background(0);

count0 = 0;

myPort = new Serial(this, Serial.list()[ 1 ], 9600);

whales [0]= loadImage(“0.png”);

whales [1]= loadImage(“0.png”);

whales [2]= loadImage(“0.png”);

whales [3]= loadImage(“0.png”);

whales [4]= loadImage(“0.png”);

whales [5]= loadImage(“1.png”);

whales [6]= loadImage(“1.png”);

whales [7]= loadImage(“1.png”);

whales [8]= loadImage(“1.png”);

whales [9]= loadImage(“1.png”);

whales [10]= loadImage(“2.png”);

whales [11]= loadImage (“2.png”);

whales [12]= loadImage (“2.png”);

whales [13]= loadImage (“2.png”);

whales [14]= loadImage(“2.png”);

whales [15]= loadImage(“3.png”);

whales [16]= loadImage(“3.png”);

whales [17]= loadImage(“3.png”);

whales [18]= loadImage(“3.png”);

whales [19]= loadImage(“3.png”);

whales [20]= loadImage(“4.png”);

whales [21]= loadImage (“4.png”);

whales [22]= loadImage (“4.png”);

whales [23]= loadImage (“4.png”);

whales [24]= loadImage(“4.png”);

whales [25]= loadImage(“5.png”);

whales [26]= loadImage(“5.png”);

whales [27]= loadImage(“5.png”);

whales [28]= loadImage(“5.png”);

whales [29]= loadImage(“5.png”);

whales [30]= loadImage(“6.png”);

whales [31]= loadImage(“6.png”);

whales [32]= loadImage(“6.png”);

whales [33]= loadImage(“6.png”);

whales [34]= loadImage(“6.png”);

whales [35]= loadImage(“5.png”);

whales [36]= loadImage(“5.png”);

whales [37] =loadImage(“5.png”);

whales [38] =loadImage(“5.png”);

whales [39] =loadImage(“5.png”);

whales [40] =loadImage(“4.png”);

whales [41] =loadImage(“4.png”);

whales [42] =loadImage(“4.png”);

whales [43] =loadImage(“4.png”);

whales [44] =loadImage(“4.png”);

whales [45] =loadImage(“3.png”);

whales [46] =loadImage(“3.png”);

whales [47] =loadImage(“3.png”);

whales [48] =loadImage(“3.png”);

whales [49] =loadImage(“3.png”);

whales [50] =loadImage(“2.png”);

whales [51] =loadImage(“2.png”);

whales [52] =loadImage(“2.png”);

whales [53] =loadImage(“2.png”);

whales [54] =loadImage(“2.png”);

whales [55] =loadImage(“1.png”);

whales [56] =loadImage(“1.png”);

whales [57] =loadImage(“1.png”);

whales [58] =loadImage(“1.png”);

whales [59] =loadImage(“1.png”);

whales [60] =loadImage(“0.png”);

whales [61] =loadImage(“0.png”);

whales [62] =loadImage(“0.png”);

whales [63] =loadImage(“0.png”);

whales [64] =loadImage(“0.png”);

firstBG = loadImage(“firstBG.JPG”);

firstBG.resize(width, height);

introBG = loadImage(“Instruction.png”);

introBG.resize(width, height);

endBG1 = loadImage(“endBG1.JPG”);

endBG1.resize(width, height);

endBG2 = loadImage(“endBG2.JPG”);

endBG2.resize(width, height);

for (int i = 0; i < 2; i ++) {

food[i] = loadImage(“C” + str(i + 1) + “.png”);

food[i].resize(50, 60);

}

for (int i = 0; i < 4; i ++) {

garbage[i] = loadImage(“G” + str(i + 1) + “.png”);

if (i == 0) {

garbage[i].resize(54, 58);

} else if (i == 1) {

garbage[i].resize(55, 35);

} else if (i == 2) {

garbage[i].resize(50, 52);

} else {

garbage[i].resize(64, 37);

}

}

for (int i = 0; i < whales.length; i ++) {

whales[i].resize(200, 115);

}

count0 ++;

myPort.write(‘L’);

//file = new SoundFile(this, “bgm.mp3”);

}

void draw() {

// the state of the game stays at the first

// when the user clicks

// the state will change again

//image(img2, width / 2, height / 2);

present = millis();

if (gameState == 0) {

counter0 = 0;

imageMode(CORNER);

image(firstBG, 0, 0);

fill(255);

textSize(26);

if (keyPressed && key == ‘s’) {

gameState ++;

delay(100);

}

} else if (gameState == 1) {

imageMode(CORNER);

image(introBG, 0, 0);

fill(255);

text(“Press B to continue”, width – 300, height – 100);

if (keyPressed && key == ‘b’) {

previous0 = present;

previous1 = present;

previous2 = present;

brePara[0] = previous1;

brePara[1] = counter1;

hunPara[0] = previous2;

hunPara[1] = counter2;

gameState ++;

ball1 = new Ball(0, 450, 7);

imageMode(CENTER);

if (count0 != 0) {

obs.clear();

subs.clear();

bg.clear();

bgReserve.clear();

seaFood.clear();

}

seaFood.add(loadImage(“C1.png”));

seaFood.add(loadImage(“C2.png”));

bgReserve.add(loadImage(“BG1.jpg”));

bgReserve.add(loadImage(“BG2.jpg”));

bgReserve.add(loadImage(“BG1.jpg”));

bgReserve.add(loadImage(“BG2.jpg”));

bgReserve.add(loadImage(“BG1.jpg”));

bgReserve.add(loadImage(“BG2.jpg”));

bgReserve.add(loadImage(“BG4.jpg”));

bgReserve.add(loadImage(“BG3.jpg”));

bgReserve.add(loadImage(“BG4.jpg”));

bgReserve.add(loadImage(“BG3.jpg”));

bgReserve.add(loadImage(“BG4.jpg”));

bgReserve.add(loadImage(“BG3.jpg”));

bgReserve.add(loadImage(“BG4.jpg”));

bgReserve.add(loadImage(“BG3.jpg”));

bgReserve.add(loadImage(“BG5.jpg”));

bgReserve.add(loadImage(“BG6.jpg”));

bgReserve.add(loadImage(“BG5.jpg”));

bgReserve.add(loadImage(“BG6.jpg”));

bgReserve.add(loadImage(“BG5.jpg”));

bgReserve.add(loadImage(“BG6.jpg”));

bgReserve.add(loadImage(“BG5.jpg”));

bgReserve.add(loadImage(“BG6.jpg”));

bgReserve.add(loadImage(“BG5.jpg”));

bgReserve.add(loadImage(“BG6.jpg”));

for (PImage bgR : bgReserve) {

bgR.resize(width, height);

}

for (int i = 0; i < 2; i ++) {

PImage img = bgReserve.get(0);

if (i == 0) {

bg.add(new Background(img, width / 2));

} else {

bg.add(new Background(img, width * 3 / 2));

}

}

// adding class objects into arrays

for (int i = 0; i < 2; i ++) {

int[] classifier = classifier();

int obIn = classifier[0];

int subIn = classifier[1];

obs.add(new Obstacles(random(40) + width, random(seaLevel, height), random(5, 10), obIn));

subs.add(new Subsidy(random(40) + width, random(seaLevel, height), random(5, 10), subIn));

}

}

} else if (gameState == 2) {

imageMode(CENTER);

if (video.available()) {

video.read();

}

// <2> Load the new frame of our movie in to OpenCV

opencv.loadImage(video);

//opencv.flip(OpenCV.HORIZONTAL);

// Tell OpenCV to use color information

opencv.useColor();

//src = opencv.getSnapshot();

// <3> Tell OpenCV to work in HSV color space.

opencv.useColor(HSB);

// <4> Copy the Hue channel of our image into

// the gray channel, which we process.

opencv.setGray(opencv.getH().clone());

// <5> Filter the image based on the range of

// hue values that match the object we want to track.

opencv.inRange(176, 180);

// <6> Get the processed image for reference.

//colorFilteredImage = opencv.getSnapshot();

// <7> Find contours in our range image.

// Passing ‘true’ sorts them by descending area.

contours = opencv.findContours(true, true);

if (contours.size() > 0) {

// <9> Get the first contour, which will be the largest one

Contour biggestContour = contours.get(0);

// <10> Find the bounding box of the largest contour,

// and hence our object.

Rectangle r = biggestContour.getBoundingBox();

float easing = 0.05;

float targetX = width – ((r.x + r.width/2)*2);

float dx = targetX – x;

x += dx * easing;

float targetY = (r.y + r.height/2)*2;

float dy = targetY – y;

y += dy * easing;

//x = r.x + r.width/2;

//y = r.y + r.height/2;

}

// background management

for (int i = 0; i < 2; i ++) {

Background back = bg.get(i);

back.display();

back.move();

if (back.xpos == -width / 2) {

bg.remove(i);

if (bgReserve.size() > 0) {

PImage new_img = bgReserve.get(0);

bgReserve.remove(i);

bg.add(new Background(new_img, width * 3 / 2));

} else {

PImage new_img = back.reimg();

bg.add(new Background(new_img, width * 3 / 2));

}

}

}

// need to use graphics to show the demonstrate the blood left

ball1.move(x, y, seaLevel);

// attempting to introduce a rising difficulty

// use the millis()

// when the program executes to a point

// the objects in the obs array will increase

// the objects in the subs array will decrease

previous1 = moreBlocks(present, previous1, seaLevel);

// display ball, obstacles, subsidy

subCount = displayMove(counter0, subCount);

noStroke();

fill(0, 200, 20, 220);

rect(width / 4, height * 15 / 16, 6.4 * ball1.hp, 100, 7);

// generate new obstacles and subsidy

// once out of the screen

generate(seaLevel);

// user control the ball through keyboard

// collision determination

vibration = collision(previous2, seaLevel);

fill(0);

textSize(26);

if (counter0 < 64) {

counter0 ++;

} else {

counter0 = 0;

}

} else if (gameState == 3){

imageMode(CORNER);

image(endBG1, 0, 0);

fill(255);

textSize(26);

text(“press S to continue”, width/2, height – 20);

myPort.write(‘L’);

if (keyPressed) {

if (key == ‘s’ || key == ‘S’) {

gameState ++;

}

}

} else {

imageMode(CORNER);

image(endBG2, 0, 0);

if (keyPressed){

if (key == ‘b’ || key == ‘B’){

gameState = 0;

}

}

}

}

////////////////////////////BACKGROUND////////////////////////////

class Background {

PImage img;

int xpos;

int speed;

Background(PImage image, int x) {

img = image;

xpos = x;

speed = 5;

}

PImage reimg() {

return img;

}

void move() {

xpos -= speed;

}

void display() {

image(img, xpos, height / 2);

}

}

////////////////////////////BALL////////////////////////////

class Ball {

// initializing the ball

// if the player hit the block for once

// its hp will decrease by 25%

int xpos;

int ypos;

int speed;

int r;

float hp;

int G1;

int G2;

int G3;

int G4;

Ball(int x, int y, int spe) {

xpos = x;

ypos = y;

speed = spe;

r = 100;

hp = 100;

G1 = 0;

G2 = 0;

G3 = 0;

G4 = 0;

}

void move(int x, int y, int seaLevel) {

xpos = x;

//ypos = y;

if (y >= seaLevel){

ypos = y;

}

}

// if the player hit the block for once

// its hp will decrease by 25%

// the number of each type of garbage eaten

void garbageUp(int i){

if (i == 0){

G1 ++;

} else if (i == 1){

G2 ++;

} else if (i == 2){

G3 ++;

} else if (i == 3){

G4 ++;

}

}

void hpDownC() {

hp -= 5;

}

void hpUpC() {

if (hp <= 95) {

hp += 5;

} else if (hp < 100) {

hp = 100;

}

}

int liveOrDie(int gameState) {

if (hp <= 0) {

gameState ++;

}

return gameState;

}

void display(PImage i) {

image(i, xpos – 55, ypos +10);

}

}

////////////////////////////OBSTACLES////////////////////////////

class Obstacles {

float xpos;

float ypos;

float speed;

int r;

int obClass;

int displayCount;

Obstacles(float x, float y, float spe, int obIn) {

xpos = x;

ypos = y;

speed = spe;

obClass = obIn;

r = 35;

displayCount = 0;

}

void move() {

xpos -= speed;

}

int reClass(){

return obClass;

}

void display() {

if (displayCount == 0) {

if (obClass == 2) {

ypos = random(seaLevel – 40, seaLevel);

speed = random(3, 5);

displayCount ++;

} else if (obClass == 0) {

speed = random(8, 10);

}

}

image (garbage[obClass], xpos, ypos);

}

}

////////////////////////////SUBSIDY////////////////////////////

class Subsidy {

float xpos;

float ypos;

float a;

float speed;

Subsidy(float x, float y, float spd, int sub) {

xpos = x;

ypos = y;

a = 20;

speed = spd / 2;

}

void move() {

xpos -= speed;

}

void display(PImage i) {

image(i, xpos, ypos);

}

}

////////////////////////////FUNCTIONS////////////////////////////

int displayMove(int counter, int subCount) {

for (Obstacles ob : obs) {

ob.display();

ob.move();

}

for (Subsidy sub : subs) {

sub.display(food[subCount]);

sub.move();

}

if (subCount == 0) {

subCount = 1;

} else {

subCount = 0;

}

ball1.display(whales[counter]);

return subCount;

}

void generate(int seaLevel) {

for (int i = 0; i < obs.size(); i ++) {

Obstacles ob = obs.get(i);

int[] classifier = classifier();

int obIn = classifier[0];

if (ob.xpos < 0) {

obs.remove(i);

obs.add(new Obstacles(width + random(40), random(seaLevel, height), random(5, 10), obIn));

}

}

for (int i = 0; i < subs.size(); i ++) {

Subsidy sub = subs.get(i);

int[] classifier = classifier();

int subIn = classifier[1];

if (sub.xpos < 0) {

subs.remove(i);

subs.add(new Subsidy(width + random(40), random(seaLevel, height), random(5, 10), subIn));

}

}

}

// differnet gargage might be generated

// at this moment

// it will generate different kinds of garbages

int collision(float previous2, int seaLevel) {

vibration = 0;

int time = 0;

for (int i = 0; i < subs.size(); i++) {

Obstacles ob = obs.get(i);

Subsidy sub = subs.get(i);

float ob1 = dist(ob.xpos + 26, ob.ypos, ball1.xpos, ball1.ypos);

float ob2 = dist(ob.xpos – 26, ob.ypos, ball1.xpos, ball1.ypos);

int[] classifier = classifier();

int obIn = classifier[0];

int subIn = classifier[1];

if (ob1 + ob2 <= 60 + ob.r) {

// still necessary to keep this function?

myPort.write(‘H’);

ball1.garbageUp(ob.reClass());

for (int m = 0; m < 2 * (4 – ob.obClass); m ++) {

ball1.hpDownC();

myPort.write(‘H’);

}

myPort.write(‘H’);

obs.remove(i);

obs.add(new Obstacles(width + random(40), random(seaLevel, height), random(4, 6), obIn));

} else {

myPort.write(‘L’);

}

if (dist(ball1.xpos, ball1.ypos, sub.xpos, sub.ypos) <= 30) {

ball1.hpUpC();

subs.remove(i);

subs.add(new Subsidy(width + random(40), random(seaLevel, height), random(4, 6), subIn));

}

gameState = ball1.liveOrDie(gameState);

}

return vibration;

}

// garbage is according to the random number generated

// the obstacles will be generated more

// the subsidy will be fewer and fewer

float moreBlocks(float present, float previous, int seaLevel) {

if (present – previous > 10000) {

for (int i = 0; i < 2; i ++) {

int[] classifier = classifier();

int obIn = classifier[0];

obs.add(new Obstacles(width + random(40), random(seaLevel, height), random(4, 6), obIn));

}

int[] classifier = classifier();

int subIn = classifier[1];

subs.add(new Subsidy(width + random(40), random(seaLevel, height), random(4, 6), subIn));

println(subs.size());

previous = present;

}

return previous;

}

float[] hunger(float present, float[] Para) {

float count = Para[1];

float previous = Para[0];

int[] intervals = {5000, 4000, 2000, 1000};

if (present – previous >= intervals[int(count)]) {

ball1.hpDownC();

}

Para[0] = present;

Para[1] = count;

return Para;

}

void breathe(int seaLevel) {

if (ball1.ypos <= seaLevel + 5) {

ball1.hp += 0.05;

}

}

// random generator for the determination of garbage type

int[] classifier() {

float obRandom = random(10);

float subRandom = random(10);

int obIn;

int subIn;

if (obRandom <= 10 && obRandom > 8) {

obIn = 0;

} else if (obRandom <= 8 && obRandom > 6) {

obIn = 1;

} else if (obRandom <= 6 && obRandom > 4) {

obIn = 2;

} else {

obIn = 3;

}

if (subRandom <= 10 && subRandom > 9) {

subIn = 0;

} else if (subRandom <= 9 && subRandom > 7) {

subIn = 1;

} else if (subRandom <= 7 && subRandom > 4) {

subIn = 2;

} else {

subIn = 3;

}

int[] classifier = {obIn, subIn};

return classifier;

}

################ARDUINO CODE######################

// IMA NYU Shanghai

// Interaction Lab

// This code receives one value from Processing to Arduino

char valueFromProcessing;

int ledPin = 3;

void setup() {

Serial.begin(9600);

pinMode(ledPin, OUTPUT);

}

void loop() {

// to receive a value from Processing

while (Serial.available()) {

valueFromProcessing = Serial.read();

}

if (valueFromProcessing == ‘H’) {

digitalWrite(ledPin, HIGH);

delay(1000);

} else {

digitalWrite(ledPin, LOW);

}

// too fast communication might cause some latency in Processing

// this delay resolves the issue.

delay(10);

}