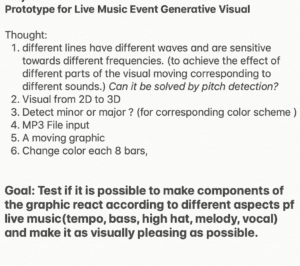

My final project is a continuation of the midterm project. With a goal in mind, I wanted to improve on the midterm based on the feedback I received from the judges.

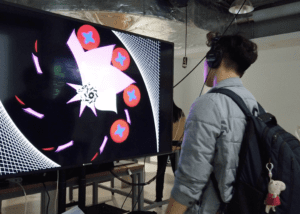

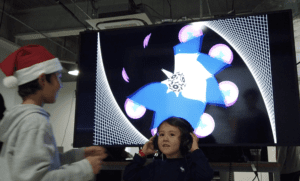

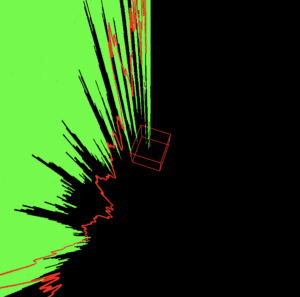

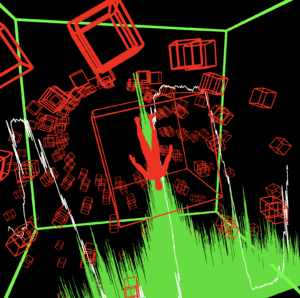

First, stylistically I should pin down if I should do 3D or 2D visuals. I found that it is hard to create a lot of change visually with 3d visuals because of limiting 3d capacities in p5.js. So I decided to create a 2D graphic as the main visual. Right about this time is also when I was creating designs for my Programming design systems class, I really enjoyed the idea of having a system of design that can output different products that visually represent the same distinct style. So I decided to directly implement some of my designs to the audio visualization project.

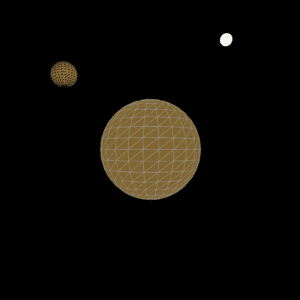

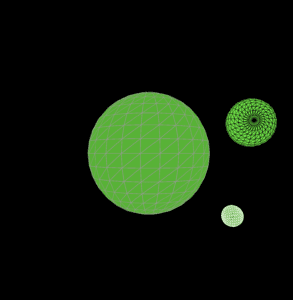

Second, I was also instructed to look into more inspiration from other audio visualizers. I recognized that there most of the graphics that I really liked have motion in the 3d background that makes the graphics look more impressive. So I added a 3D background that complements the main 2D visual.

Third, I also received feedback on the style of the graphic only fitting EDM music and hip-hop and not love songs and ballads. So I made a much softer floral design that complements softer songs.

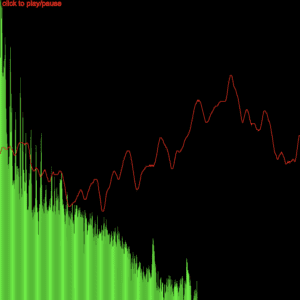

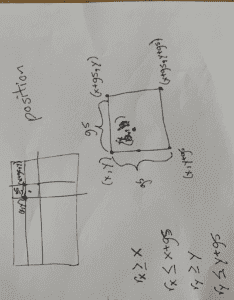

My last and most important suggestion was that my midterms graphics were too centered around the waveforms and spectrum of the audio itself and lacked complexity in utilizing the data from the audio file. To address this issue, I was instructed to take a deeper look into how the spectrum interacts with the music. What part of the sound wave is triggered by bass and what part is triggered by snares and what part by vocals? After observation, It took some time to calibrate and find the right range of data to select and use. After that, we added further data manipulation to map the value into a usable range for the most effective visualization needs.

Also, I gave the project a name. I called it META. Because I want it to always change.

To sum it up, I am so glad to have been able to take MLNI with Moon. Looking back, I am so surprised that I can take part in creating something like this, both visually pleasing and technically complex. I loved the fact that we presented our ideas at the beginning of our semester as pitch after learning a little about what ML technology can do. It was just enough information for us to recognize the power of the technology and be creative with imagining its capacities. After that, I was excited to see what kind of prototype I can make to test my idea. In order to create something I enjoy, I was more motivated to work than any of my other previous IMA classes. Big big thanks to Moon for being so patient with me and helping me so much both creatively and technically.