The objective of the lab was to investigate potential differences in patterns of circadian rhythm among genotypically different specimen of male Drosophilia flies. The circadian cycle is a biological feature of animal species, including humans, in which daily activity is chemically administered by a mechanism that corresponds with the daily cycle (for example the light intensity of the sun).

In this lab, we collected 10 specimen of male fruit flies without any genetica mutations into separate test tubes, and then proceeded to collect 10 specimen of male fruit flies with a genetic mutation. We incapacitated the flies using CO2, then used a metal rod to isnert them into tubes partially filled with sugar paste, then covered the tubes in cotton to let the flies breathe.

Over the duration of one week, every hour, on the hour, the number of flies active vs asleep has been measured.

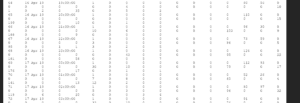

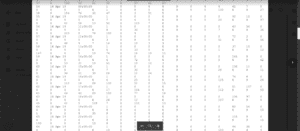

The sample results are available here:

The aforementioned results clearly show that there is a difference in terms of how many flies are active between different times of the day. Looking further into the results, it does not seem to be an anomaly, as many more flies tend to be visibly active early in the morning. However, this did not apply to all test tubes, as in some no activity has been detected for a long time (potentially some fruit flies died), and in others the patterns have been not as clearly visible as in columns 1. and 4.

This lab has provided us with a useful experience of following clear laboratory procedures, as well as of collecting and processing results. Even though further research could show the differences more clearly, it seems that our preparations enabled to at least partially show how various organisms follow circadian rhythms.