In my perspective, “interaction” is defined as a complicated and creative procedure of “input-process-output” between two actors. Within this definition, there are two components that should be emphasized. The first is that this “input-process-output” procedure is conducted by two actors. As mentioned in the essay “The Art of Interactive Design”, interaction is “a cyclic process in which two actors alternately listen, think, and speak” (Crawford 5). “Listen” here stands for input, “Think” represents process and “Speak” is regarded as output. Besides this procedure within single entity, the term “two actors” is also essential since interaction requires two actors to communicate with each other, serving as the other’s source of input and receiver of output. The second component is “complicated and creative”. The process of interaction is supposed to contain some sort of complexity and creativity. According to the essay, the observation like “People jump out as they hear the sound of branches crashing to earth” should not be considered as interaction but merely reaction (Crawford 5). In this scenarios, although the “input-process-output” procedure technically exists , it can not be seen as interaction since the “reaction” is too natural without complexity and creativity.

After I generated my initial definition of “interaction” from the assigned readings, I went on looking up for some projects that can help me better understand my own definition. The most interesting one I found on the “Creative Applications Network” website is a project called “Weather Thingy”. Basically it is a “sounds controller that uses real time climate-related events to control and modify the settings of musical instruments”. More specifically, it can automatically make changes to the music pieces that you are playing regarding the current climate it recognizes. If it’s windy, then your music will be played in a loop between left and right channels in either your speakers or headphones. If it’s rainy, your music will sound like you’re under the water. I think this project well aligned to my definition of interaction. There is “input-process-output” procedure between players and this project. For the project, it receives information of the climate and the music pieces, then it identifies what kind of climate it is and how it should integrates the climate information and music pieces together with creativity and intelligence, lastly it outputs the final work. For the player, he/she listens to the pieces produced by the project and thinks about if the pieces match with his/her expectations, then he/she can choose to change certain parameters to make the pieces sound better. As we can see, the interaction happens here contains some sort of complexity and is overall unexpected. The project uses its own logic and intellectuality to produce pieces with special characteristics.

The second project I examined that are less aligned to my definition is the one called “Scribb”, which is a “Pen-Paper-Mouse interface”. In this project, the mouse functions as a scanner that can transform the drawings in the paper into the equivalent digital visual information that can be presented on the screens. Here the interaction process is overall complete and explicit. For the mouse, it scans the drawings, creates a equivalent digital 2D model as processing and presents the drawing on the screens as output. For the drawer, he/she sees the drawing on the screen, then decides if the drawing satisfies his/her goals and then makes responding changes. However, the problem laid underneath is that this kind of interaction is too primary and natural without enough creativity and complexity. When we think of scanning, we naturally expect the mouse to scan the drawing we created and present it on the screen. During this process, the mouse doesn’t contribute to the improvement of the drawing with its own intelligence and autonomy, instead it just functions as a coping machine.

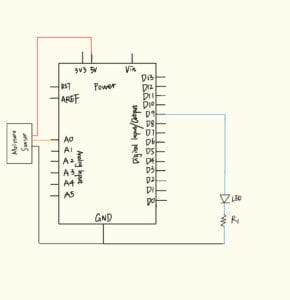

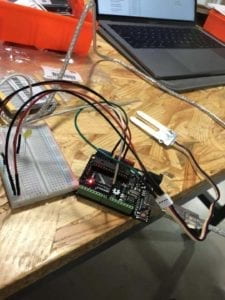

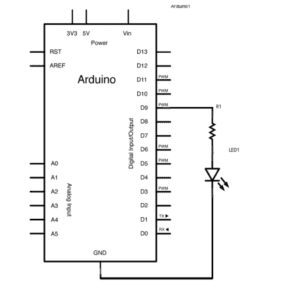

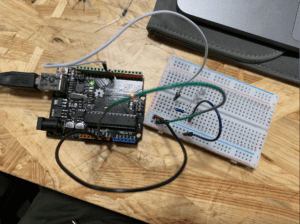

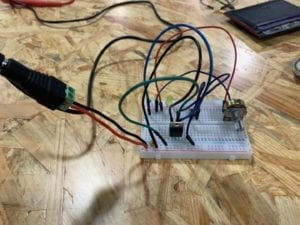

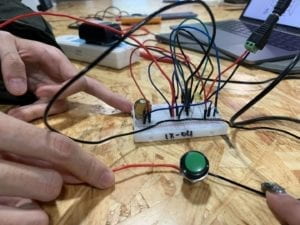

The product our group created is an intellectual glove that can translate sign languages into voices for people with speaking difficulties. The glove tracks the movement of the users’ hands with a huge amount of sensors. Then it relates the movements with specific sign languages in its storage and translates those sign languages into standard writing paragraphs in a reorganized order with its own intelligence and capability for machine learning. Lastly, it outputs in a smooth voice to help the users better communicate with other people. The users can hear the outputting voice and change its tunes ot word choice preferences based on its personal demand. We think the interaction of our products is really complete, creative and complicated since it overcome the “language barriers” for the minority group in the society and can only be achieved with a lot of sensors and high techniques like machine learning.

Poster:

Work Cited

Crawford, Chris. “What Exactly is Interactivity.” The Art of Interactive Design, pp. 1-6.