Spacetime Symphony

Concept and Design

Once my partner Robin and I thought of our main concept of creating an interactive audiovisual experience, we wanted to first find the most effective design that would enhance the interactive experience. We knew that we had wanted to use some kind of sensor values to put into the Arduino and then send to the processing sketch, but we did not know exactly which sensor to use. We debated using buttons, tilt sensors, light sensors and much more to control the movement of the animation. We decided that we didn’t want the interaction to be too obvious, which would occur if we had used a very basic sensor such as a button, so we decided to use a distance sensor.

This was intuitive enough to allow our audience to think more about the interaction, but also not complicated enough to isolate our audience from the possibilities of interaction.

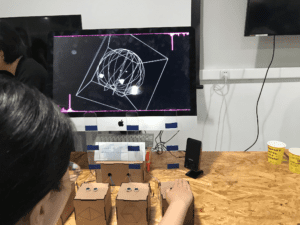

In the making of both our physical and digital elements, we decided to have three main components to our project. The physical setup which included the Arduino and the sensors, the projected animation, and the soundscape. While the soundscape and animation were implemented in Processing, we used the laser cutting fabrication process in order to construct boxes.

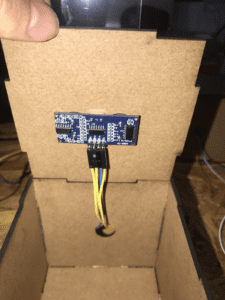

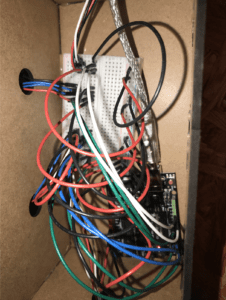

These boxes contained the Arduino, circuit board, and the wiring in order to create a clean aesthetic and inviting setup to bring in users who wish to interact. This simplicity of the laser cut boxes allowed for no distractions for the users other than the sensors that we wanted them to interact with.

We both believed that using the laser cut boxes were the most effective materials to contain the Arduino and circuit board. We had other options for the construction of our physical elements such as 3d printing, however, the laser cutting process was a more efficient method of fabrication for our purposes intended.

Fabrication and Production

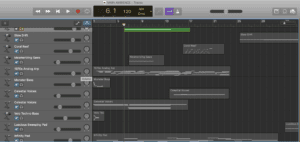

Our production process consisted of a couple of main steps. The first step was the sampling and cutting of the soundscape audio files. I worked in Garage Band and sampled every sound the system offered and grouped them into separate categories based on their sound style. These categories included base, twinkly sounds, ambient, and drum beats. Then, I grouped together a bunch of audios into the main song file and tested how they fit all together. Then, once I made sure all the intended sounds fit together well, I isolated them so that they could be added and subtracted from the song in the final product. Then once I isolated all the extra sounds, I was left with the main ambient background track

.

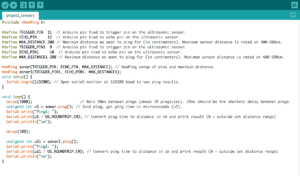

The next step was testing different sensors in order to find out which one could most accurately communicate our desired values. We tested both the infrared sensor and ultrasonic ranger, however, the ultrasonic ranger provided the most consistent values. Although we had wanted to work with the infrared sensor because we were afraid the ultrasonic sensors would interfere with each other, we were proved wrong when the ultrasonic sensors provided a greater consistency. Another step of the process was the coding of the processing animation. Robin did the coding for this step and we had cycled through a number of designs for the layout of the animation but settled on the 3d cube and sphere. Another step was the coding of the serial animation in order to take the values from Arduino to Processing.

Both Robin and I worked on this aspect of the coding, and it didn’t take long to figure out how to transfer the values. However, one aspect of this step that took a while was figuring out which range of values from the distance sensor we wanted to apply to each sound. The final step was the creation of the physical model. First I had laser cut four boxes. Each one had its own ultrasonic ranger which had three different ranges in order to play three different sounds.

We had also laser etched different shapes into each box in order to indicate which aspect of the animation would be altered once each specific sound was added. We also at first forgot to laser cut a box to house the Arduino and main circuit board. However, we were able to laser cut it at the last second in order to finish the main construction.

One more design element we added at the very end were four long acrylic sticks, which we attached vertically onto each box. We marked each acrylic stick with three different lengths of tape. Each length of tape indicated where the user would place their hand in order to play a different sound.

Our user testing session went entirely well. Communication between everyone was very clear so we were able to take a lot of the feedback and implement it directly into our design. We had not yet fabricated any physical components because we wanted to have feedback before deciding on a final design. During user testing, we were able to come to many conclusions about specific design questions we had. One thing that we were unsure of in relation to the physical setup was whether to have the ultrasonic rangers positioned horizontally or vertically. However, based on the majority of the feedback, placing the ultrasonic rangers so they face vertically provided the most comfortable experience for the users to interact. This also answered another main design question that we had pondered. Robin and I could not decide whether or not to encourage the users to use their body to interact with the distance sensors, or whether to encourage them to use their hands. However, when they are oriented vertically, users only have the options to use their hands. Another thing we noticed during the user testing is that many users did not realize that each ultrasonic ranger could accompany the production of multiple sounds. We found a way to make this more obvious by laser cutting the acrylic sticks marked with tape at the location where they should hold their hand in order to trigger each specific sound. Another thing noticed during user testing is that we should make the feedback more obvious as to when the users have triggered certain sounds. In order to accomplish this, Robin adjusted the coding for the animation to make it more obvious when certain sounds had been triggered. I also added etchings of certain parts of the animation to the design of the laser cut boxes in order to signify which part of the animation would be altered.

All of these adaptations were very effective in the presentation of our project, and its appearance at the IMA show. Users not only enjoyed the vertically placed sensors, but they were also able to make the connection of how each sound was linked to certain parts of the animation. In addition, the placing of the acrylic sticks and tape was very helpful in alerting the users of the location in which they should place their hands to play certain sounds. I believe all of these production choices were very wise and I’m so grateful for the amount of honest feedback we received because it allowed for our project to thrive in the best way possible.

Conclusion

In the end, our goal for this project was to create an interactive soundscape that itself interacts with a Processing animation. This artwork would allow user interaction and also find a crossroads between music and art. This project aligns with my definition of interaction, a two-way street of communication between two or more machines/beings. I believe it aligns with such because the user is able to use sensors that send values to the animation to create both sound and art. The user will then see their interaction flourish in the sound and art, and once they respond to the artistic output, they will be inclined to interact with the sensors even more. While I believe our project was very in line with my definition of interaction, I believe that we could even greater improve it by possibly adding more elements that signal when a user plays with the sensors. This would strengthen the two-way street of communication. Compared with my expectations, a real-world audience interacted very well with the project. They would first look at the animation, and then look at the physical setup. They would then wave their hands around and notice a change in the audio or a change in the animation. Then they would possibly connect the audio and the visuals.

The most invested of audiences would also notice that the etchings on the boxes match the parts of the animation that would change. While not all audiences would become that invested, in general, I was very delighted with the way people would react. One of the main goals of our project was to create an environment in which many people would be encouraged to interact with the sensors at once. This would allow the artwork to also become a medium for bonding between multiple people. After our final presentation, and based on Marcela’s feedback, I decided to lengthen the wires that connect the boxes in order that the sensors could be placed further apart. When they were placed further apart, this encouraged the different audiences to feel comfortable with interacting with the project simultaneously, because they had more space to do so.

Given that we had more time to work on the project, I think I would like to really invest time in producing the most immersive soundscapes. A lot of the sounds and musical combinations I produced were due to simple experimentation and luck, however, I think by using a more professional musical process of working with keys, time signatures, and maybe even a more professional audio editing software, I could create a better soundscape. Other than what I could improve given more time, during my actual project there was one main setback that occurred. Right before the final presentation, as I was taking the project off of the shelf, one of the boxes became disconnected from the circuit board, fell on the ground, and broke into separate parts. With no time to spare, I had to run to the IMA lab and glue the parts back together, reconnect the wires, and retest the code. This setback meant that before our presentation started, we had not fully set it up. We had to spend a small amount of time at the beginning of our presentation uploading the load and connecting to the TV. Due to this occurrence, we had constructive feedback regarding how we should have been fully prepared for the presentation. At first, the feedback was difficult to accept as it was a very unlucky situation, but after this learning experience, I feel encouraged to be extremely prepared for all of my future projects. To be prepared for situations like this will prevent any unlucky situations from affecting my performance as a student. In contrast, I believe Robin and I accomplished something amazing, which was the ability to make people feel. At the IMA show, seeing people have fun with another while interacting with the project, and seeing their faces light up when they were able to connect with the project’s meaning is enough accomplishment for me. All of the hard work spent in the IMA lab felt entirely worth it.

This connection felt between people and also between the art and ourselves is exactly why one should care about my project. Most of us go to the art museum and learn certain things. However, when the audience is given the task of making themselves a part of the art, their empathetic capability is enhanced as they can see their own personality uniquely impact the audio and visual expressions.