My definition of interaction:

To me, interaction requires a back and forth between the user and the machine — more notably I feel it has to respond to function with its own unique reaction, separate from simply the commands fed into it. In “The Art of Interactive Design” by Chris Crawford, Crawford defines interactivity as: “a cyclic process in which two actors alternately listen, think, and speak.” The key word is cyclic, because in order for the process to loop back the machine has to be capable of thinking for itself, not just respond simply to the user’s requests.

Interactivity and Projects:

So by following my definition of interaction, I looked at two projects: Open Highway and Alias the “teachable” parasite.

Open Highway illustrates my problem with defining “interactivity” simply as command and response — I find “Physical Computing” by Dan O’ Sullivan and Tom Igoe’s definition, as “input, processing, and output” too vague in that it allows for simulations such as Open Highway to be defined as interaction when there is no “cyclical” interaction. While technically Open Highway is an active simulation that is able to respond and change when highway factors change, it is still a simulation because the user is not able to respond back to it once the machine reacts. It is a demonstration that follows simply what the user tells it to do, not a cyclical process.

On the other hand, Alias the “teachable” parasite aligns more with my definition of interactivity because of how much room it allows for customization. The article states: ” the user can train Alias to react on a custom wake-word/sound, and once trained, Alias can take control over your home assistant by activating it for you.” This opens up a dialogue between the user and the machine, and not only that but it allows for a cyclical process of endless interaction. The alias itself is even set up to respond and act like a living thing, as it is based on how a fungus is able to control and manipulate insects. It acts as the middle man between an Alexa-like smart device and responds to sound and stimuli — if the response is not to the user’s liking, Alias immediately reacts and is able to control it without needing the user to directly feed the command into it.

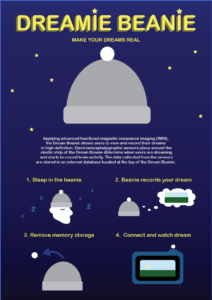

Dreamie Beanie: Our Group Project

Our group project is called the “Dreamie Beanie” in which we use a beanie, something comfortable and non threatening, to record your dreams. This idea came up because we thought it would be interesting to be able to watch some our dreams back and make sense of them. The Dreamie Beanie aligns with my definition of interaction because it is a cyclical process of sensation, interaction, and response — by sensing which stage of the sleep cycle you are in, the Dreamie Beanie is able to respond and record, bridging the interactive gap between you and your subconscious. In allowing a continuous conversation with you, the device, and your brain, the Dreamie Beanie forwards communication between the user and the machine.

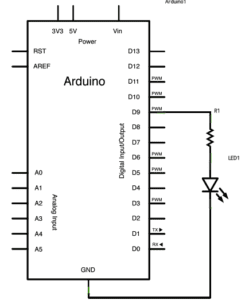

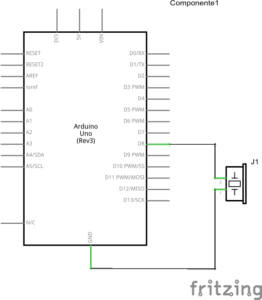

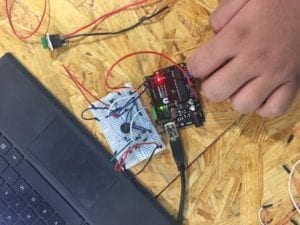

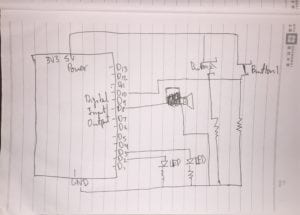

Here is a photo of the product and the poster below: