Project Reflection

Link: http://imanas.shanghai.nyu.edu/~js9686/net-art/index.html

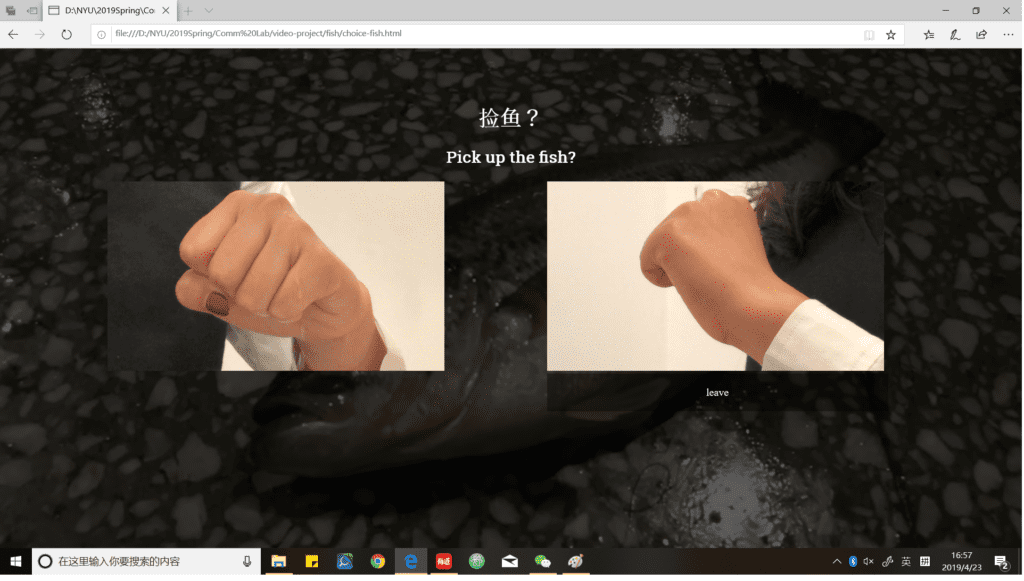

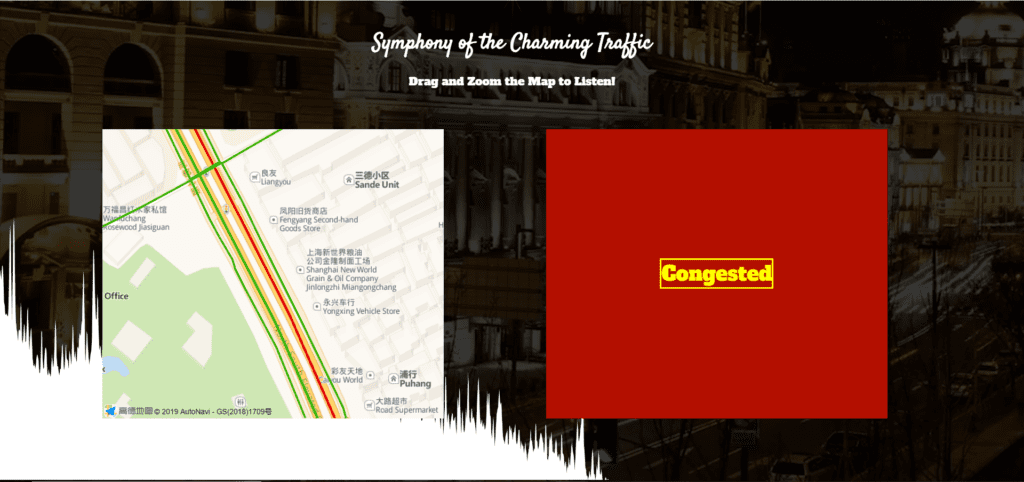

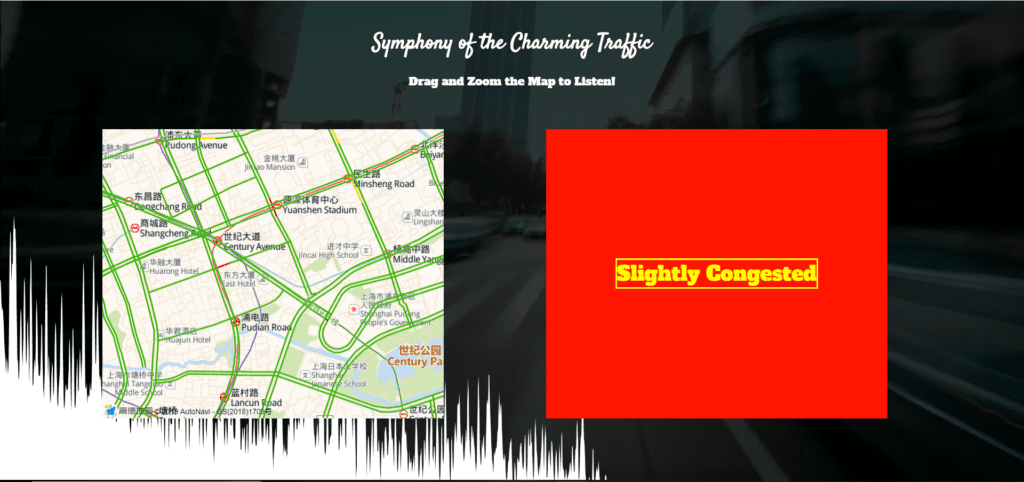

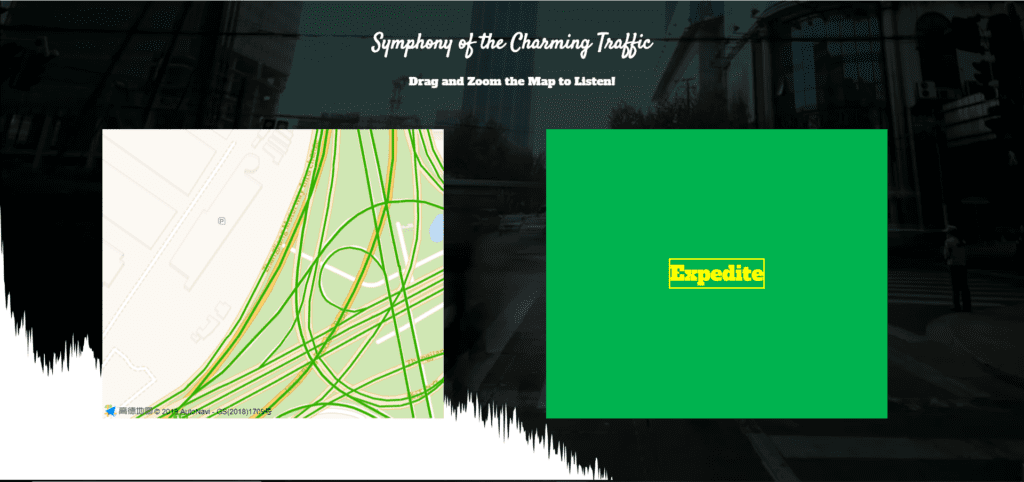

My net art project, Traffic Symphony, presents the traffic status of 40 cities in China in an audio-visual form with API from Gaode map. On the interface of this website. there are two squares in parallel with each other. The one on the left is a map where the user can zoom or drag to locate the portion that he or she wants to see and listen. The one on the right can visualize the traffic status using colors and phrases (green-expedite; red-slightly congested; dark red-congested). When the traffic is expedited, the background sound would be full of cars passing by. When the traffic shows congestion, there would be horns and whistles from the police. The frequency of horns would reflect how congested it is on the road. Meanwhile, there is a layer that is behind the two squares visualizing the traffic sound. The main background is video clips from the traffic.

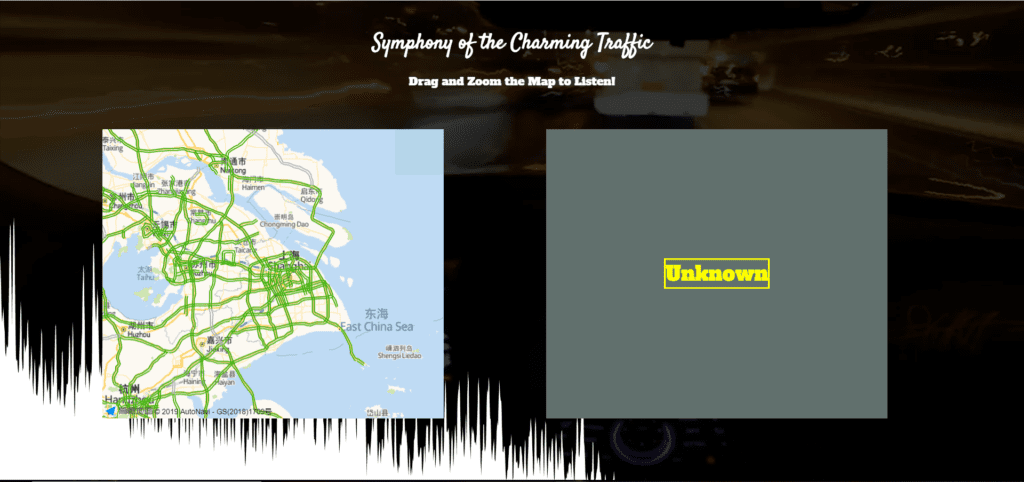

Also, if one moves the map into a place or scale that I couldn’t get information from the API, the square on the right would say “unknown.”

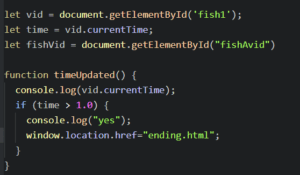

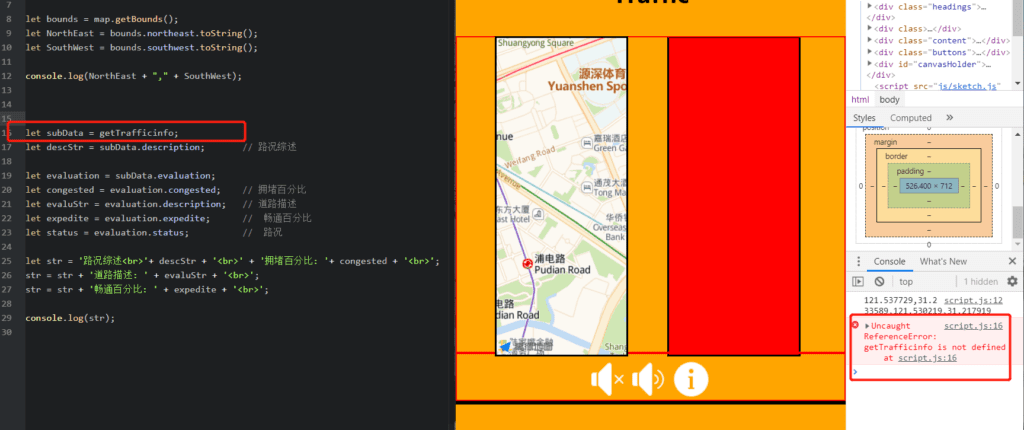

Since I want to use API, I get information from Gaode to see if there are any tutorials that I can follow. However, I only get instructions on how to call the functions using the phrases that they provide. Then I tried by myself to see how to retrieve data from Gaode. This was my first attempt:

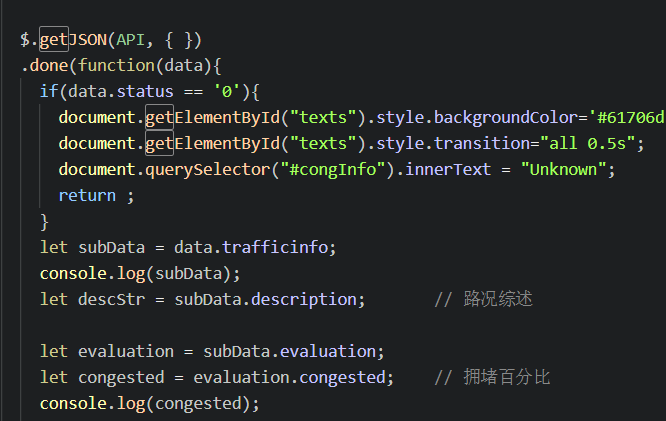

After I put the map on the website, I found that I could not get any data. After a reference to these projects (https://blog.csdn.net/theArcticOcean/article/details/68950692; getBounds: https://lbs.amap.com/api/javascript-api/example/map/map-bounds), I knew that I need to use JSON here, and I revised my code accordingly:

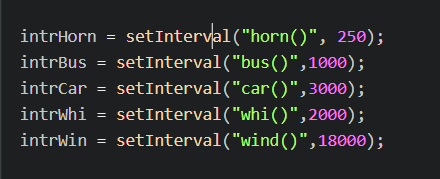

Since my intention is to change the beats according to the traffic status, I tried to use setInterval(“playSomething(), frequency”) to achieve it, and I put the frequency in a format like this in every traffic status:

But then I found that if I do this, the functions wouldn’t stop no matter how the traffic status change. In other words, the functions wouldn’t stop after changing the frequency. Then, I tried “return” function, and if I use “return,” the webpage would make no sound. Finally, for the sake of convenience, I only declare frequencies inside each traffic status and created a new function out of the if statements using the frequency generated, and tried to use clearInterval(). However, the functions would still not stop when a new frequency is declared. This confused me for a long time until I met Leon. I realized that I need to declare the variables at the top of the page out of every other functions to let them refresh according to the frequency. I realized that a variable would only work within one function, and it would not be applicable to other functions. I need some global variables to let my functions run.

Then it comes the p5.js part that I want to use to visualize the sound (reference: https://p5js.org/examples/sound-frequency-spectrum.html). By referring to the sounds examples on p5.js reference, I found that the audio files could only be loaded within p5 otherwise nothing would be recognized. Therefore, I came up with an I idea that I could read all the sounds coming from the computer to be the source of sounds. However, I found that it is impossible. I later used the mic sounds instead. But, if the user plays with my project with an earphone, the sound visualization would be nothing. After consulting Leon, I know that I can declare all the things in the script.js (map, squares, audios) using one function and then run this function within p5. By doing so, I am able to load sound files within p5 without needing to use the microphone. In order to fill the p5 canvas to the full screen instead of creating one with certain pixels, I also referred to this example: https://p5js.org/reference/#/p5/windowResized.

Due to my limited sense of aesthetics, I didn’t realize how the colored square is distracting because my main point is to show the traffic symphony. If there’s anything to change if I could redo it, I would definitely redesign the layout of the page. In the future, I would play with the API more to see what it could offer, and extend the project without the limit of traffic. If I could read the land use arrangement (which I think I could), I would find other ways to make sounds.