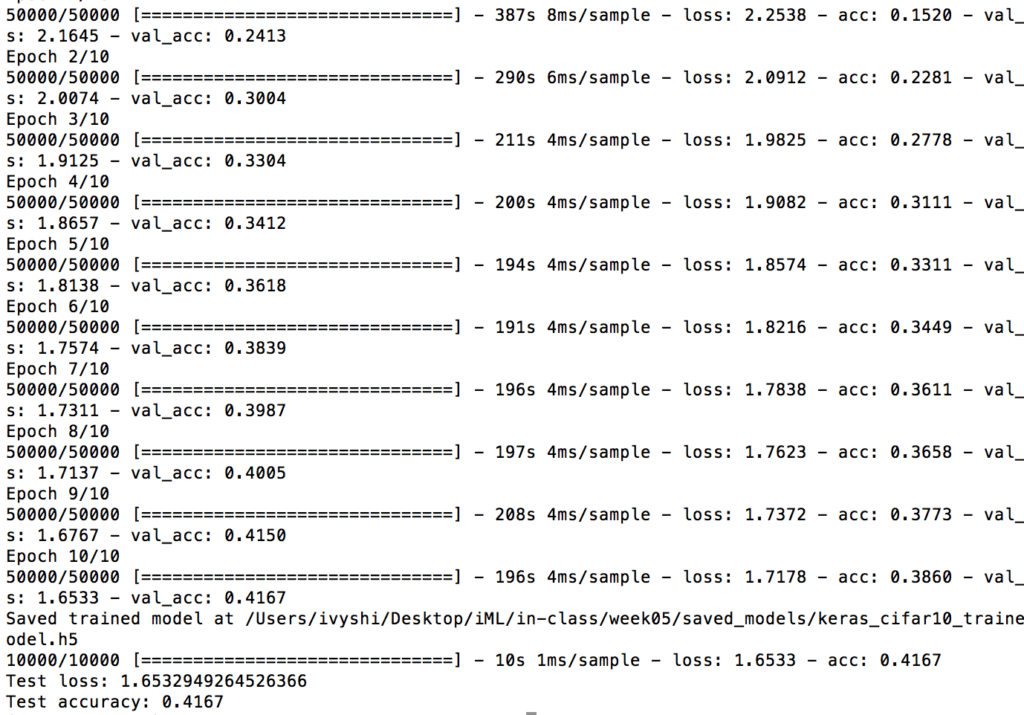

iTo train CIRFAR-10 CNN, I started with default batch size 2048 and ran it with 10 epochs on my 2015 MacBook Pro. During the process, the loss goes down and the accuracy increases as more epochs are trained. In the end, I got a test loss of 1.65 and test accuracy of 0.4167. For each epoch, the training time is around 200s.

Project 1: How Far Are You? – Depth Perception Glasses By Ivy Shi

Project Description

This project “How Far Are You?” plays with depth and distorts people’s perception of distance with a pair of half-inverted telescope glasses. Two sets of concave-convex lenses are attached to a normal pair of glasses with them installed in opposite directions. As a result, you see a magnified world in one eye and a minificated world in another. This creates a sense of confusion as people can no longer accurately perceive the distance of an object using their sight.

I was first intrigued by the looking-backward glasses we experienced in class. While wearing those glasses, I noticed that I was able to see backwards but could not tell how far behind things were. This experienced inspired to explore the interplay between vision and depth perception. My other inspiration came from cameras. As we know, many camera lenses can zoom in an out. What if we give the capabilities to human eyes? I am interested in exploring these two concepts and see how they may interfere or support each other.

Perspective and Context Continue reading “Project 1: How Far Are You? – Depth Perception Glasses By Ivy Shi”

iML Week 03: ml5.js WebCam Image Text Generation – Ivy Shi

For this week’s project, I experimented with different ml5.js models and made several attempts to implement them in interesting and/or useful ways.

My first idea was related to the KNN classifier. I was playing with the two examples: KNN Classifier with Feature Extractor and KNN Classifier with PoseNet on ml5js’s website. The fact that they are able to distinguish with different poses really interested me. I thought about the possibility of using this to classify gestures from sign languages. Next, I trained my own data with some simple hand signs like “Hello” and “Thank you”. However, as I discovered, it was difficult for the algorithm to identify subtle differences in my hand movement. It is only when there were major body movements that the classifier became really accurate.

After some more brainstorming, I ended up deciding on the idea of turning webCam images to random text. This combines two ml5.js models – image classifier and charRNN. It works like this:

Continue reading “iML Week 03: ml5.js WebCam Image Text Generation – Ivy Shi”

Kinetic Light: Color Studies – Ivy Shi

Assignment 1

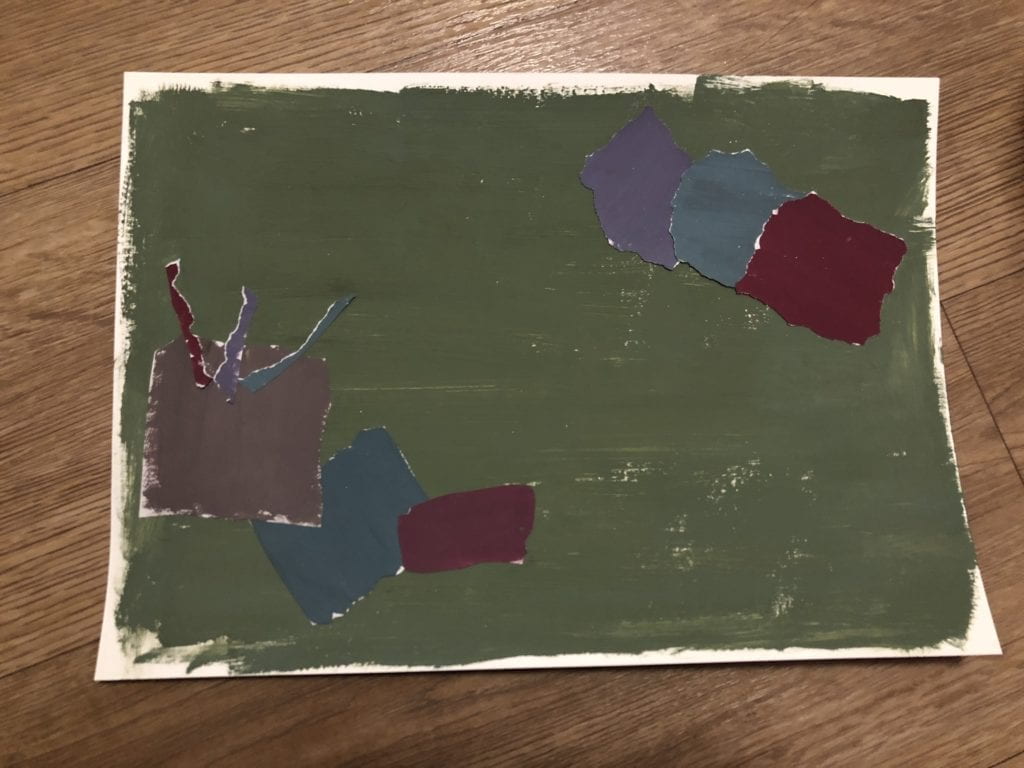

In order to create colors in the chromatic gray saturation range, I mixed different bright(prismatic) colors with gray. There is no specific design to the collage. I mostly just cut random shapes and assembled them together. Because of the color scheme, the collage looks quite dark and depressing.

Assignment 2

For this assignment, I mostly used the original prismatic colors from the paint bottles. The colors are purer and the college looks brighter. However, all the colors still appear to be more muted than I envisioned. This is because the paint color turned darker when they dried on paper. From this case, we can see the difficulty when working with colors physically as they do not turn out exactly the way we wanted.

Assignment 3

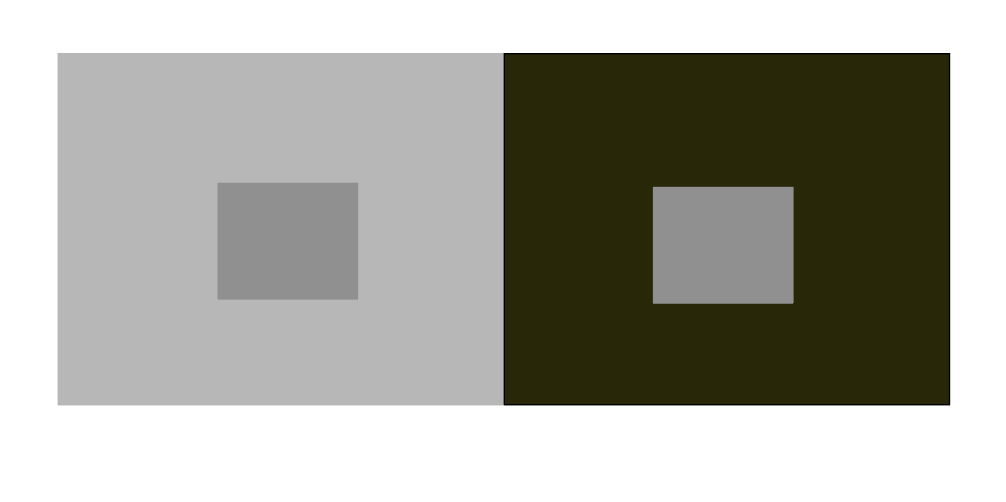

1) Use only grays try to alter the value (brightness) of the the single center color.

This is quite easy to achieve. In order to alter the brightness of the center color, I just changed the brightness of the background color. Light gray makes the central gray appear to be darker and vise versa.

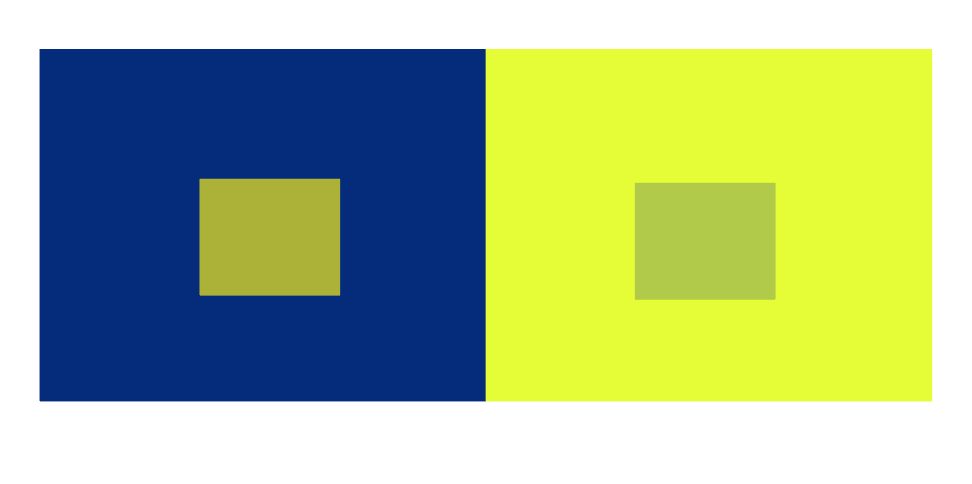

2) In color, alter the hue of the single center color.

It was a bit difficult to figure out how to only change the hue in the morning. As I looked the hue progression more, I came with up the idea of choosing a center color that is already in between two hues (or a mixed of two colors). In thie case, I selected the center color to be somewhat in between yellow and green so it can go either directions. On the left side, I used blue as background. Because yellow and blue are contrasting colors, the center color appears more yellow. On the right side, I put a more pure yellow to contrast the center color so it appears more green.

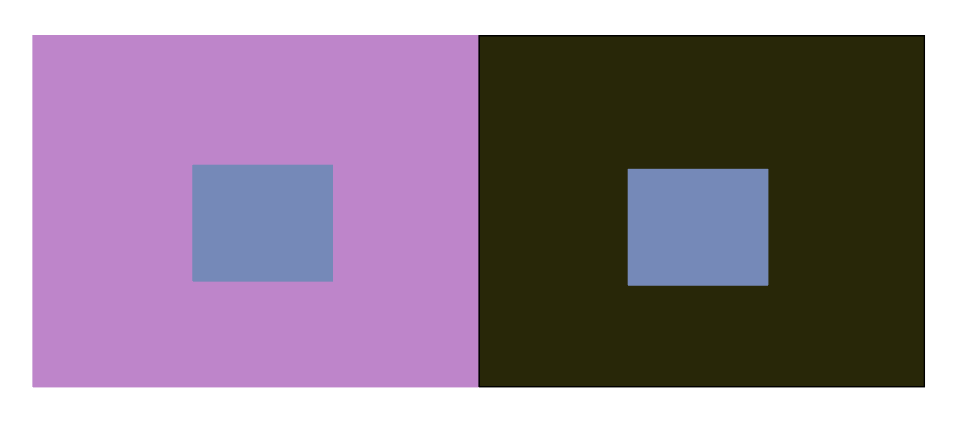

3) Try to alter only the saturation of the single center.

To alter the saturation of the center color, I thought about changing the saturation of the background colors. A more saturated background will make the center color to appear less saturated. After experimenting with some different shades of purple and gray, I settled on this color combination.

4) Try to alter the hue, saturation, and brightness.

I basically combined what I summarized and used for the previous three parts to alter the hue, saturation and brightness. The hue of the background colors are different as well as the saturation and brightness. I fine tuned the parameters until the results appear to be the most prominent.

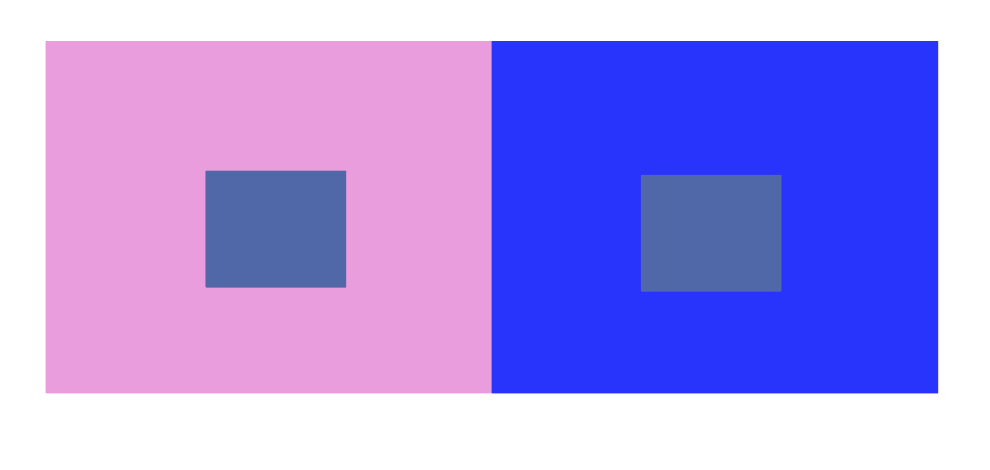

5) Make two different colored center squares look like the same color by modifying the surround squares.

After some failed attempts, I realized the key to make this work is to the center colors very similar. I used the same hue but slightly alter the saturation. In order to make the colors appear the same, I made the backgrounds to be in blue hue but one is less saturated and one is more.

After some failed attempts, I realized the key to make this work is to the center colors very similar. I used the same hue but slightly alter the saturation. In order to make the colors appear the same, I made the backgrounds to be in blue hue but one is less saturated and one is more.

Assignment 4

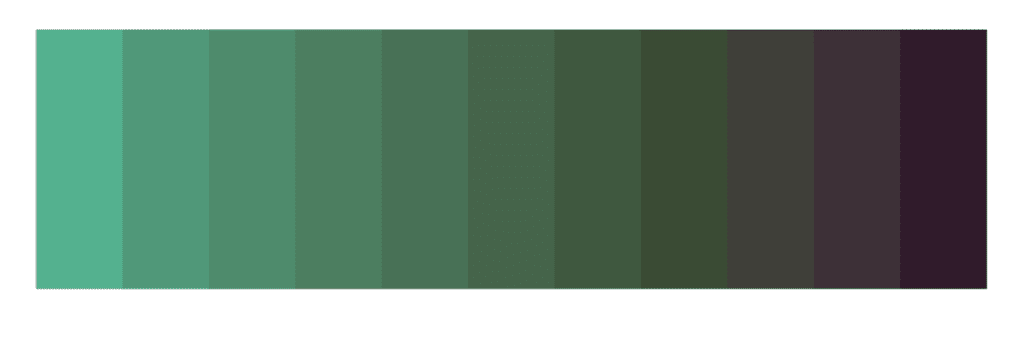

Create two separate sequences of color:

Change in Brightness and Saturation

Brightness: light green to very dark green (almost black)

Saturation: more saturated to less saturated

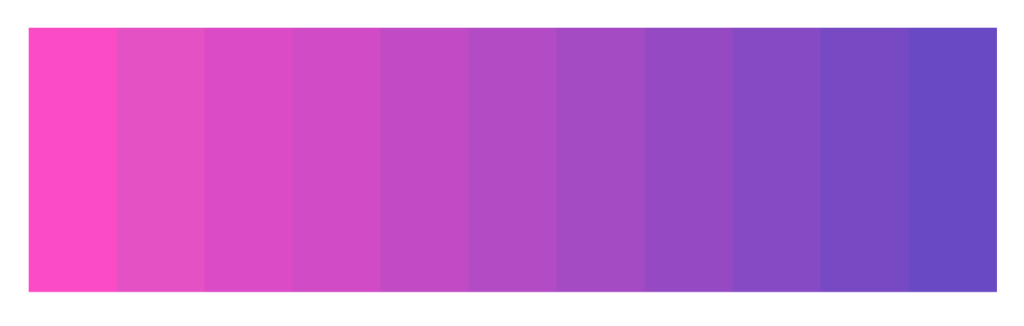

Hue and Saturation

Hue: pink to yellow

Saturation: more saturated to less saturated

Two sequences interspersed:

Instead of just combining the two sequences, I tried to add more dynmics to the final product by decreasing the size of one of the sequences. As a result, it is not only changing in color properties, but it also contains some movement.

Assignment 5

Before putting together new shapes and color combinations for this assignement, I first considered the possibilies of recreating and combining existing ones I created in the earlier parts. I used the color blocks from assignment 3.4 and dupliacted them to fill the entire frame. There is a very strong after image effect. Especially on the blue strips, positive afterimage of the purple color is very prominent. I also feel dizzy when staring at it for a period of time.

I got really interested in creating kinetic artworks so I decided to do more. The next one is inspired by Walter LeBlanc’s work Mobile-Static M 0 2.

It reminds of the color sequences I just did. I built upon the interspersed sequence and created this:

I made three copies of sequence and placed them from top to bottom. Then I inverted the middle stripe to create some contrast in motion. The resulting image is quite interesting. The middle strip seems to be sitting above the plane bottom, creating a sense of depth even though they are technically all in the same dimension. The contrast in motion is very obvious with the changing size of the pink-purple rectangles.

After these assignments, I experience significant differences between physical and digital color studies. Physical studies are more hands-on but are restricted the colors available to us. Colors also change when they encounter various medium just as what I discussed in assignment two. In terms of digital colors, there are more flexibilities playing with them. It is easy to see the subtlety of color changes and see the effects immediately. However, this also suffers similar problem in that colors might appear differently on different screens.

iML Week 02: Case Study – Ivy Shi

Style Transfer – Real-Time Food Category Changer

One interesting project I came across on the internet involving machine learning and artificial intelligence is called “Real-time Food Category Change”. It is a food style transfer project lead by Ryosuke Tanno and presented at the European Conference for Computer Vision in 2018. The idea of is nothing grandiose, it simply allows users to change the “appearance of a given food photo according to the given category [among ten typical Japanese Food].” For example, you can transfer a bowl of ramen noodle to curry rice by exchanging the texture while still preserving the shape.

Here are some sample image results:

More at: https://negi111111.github.io/FoodTransferProjectHP/

The results are achieved by using a Conditional Cycle GAN on a colossal food image database. 230,000 food images were collected from Twitter stream which were grouped into 10 categories for image transformation. The algorithm – Conditional Cycle GAN is an extension of CycleGAN with the addition of some conditional inputs. This modification is necessary to overcome CycleGAN’s disadvantage on only learning image transformation between two fixed paired domains. More algorithmic method and technical considerations can be found in this paper: Magical Rice Bowl: A Real-time Food Changer.

As someone who enjoys taking photos and eating food, I personally found this implementation to be a lot of fun. Additionally, the fact that such food image transformation can be done in real time on both smartphones as well as PCs is quite impressive. There are even practical future applications of this idea which is to combine this with virtual reality to unlock new eating experience. An example would be if people are on diet and try to restrict high-calorie food intakes, they can eat low-calorie foods in reality while still enjoying high-calorie foods in their VR glasses.