Midterm Project: You’ll NEVER catch me Mom!

Instructor: Marcela

Partners: Roger, Justin Wu

CONTEXT AND SIGNIFICANCE

My midterm project proposal was really inspired by my personal definition of interaction. In my Group Project documentation, I define interaction as “a continuous relationship between two actors that is composed of communication through verbal, physical, and/or mental feedback. I chose to include mental feedback because if something or someone makes you feel something inside, then there is a relationship there, even if it isn’t a visible relationship.” I strongly believe that interaction should incorporate and reside in the experience of emotional feelings. Only if the interaction yields the aspect of “feeling”, then it can be defined as a kind of purposeful interaction. I also agree that there is a span/level of interaction. For example, in class we discussed the simple interaction of opening the refrigerator and the light turning on. While this is a very simple interaction, it still falls under my definition of interaction because it makes you feel something inside. You may be curious to see if there is any food you enjoy inside because you are hungry. And if you find food, you will be happy you have food stocked in your fridge that you can enjoy! If you don’t find food, you’ll be sad and disappointed, left hungry and having to resort to other options. I liked this discussion in class because it shows that this simple interaction can have an effect on your emotional feelings, even if you don’t realize it.

During my research of “interactive projects”, I encountered a few projects that really captured my attention and helped me form my definition of interaction. Kyle McDonald’s Sharing Faces was one of the first projects I encountered that made a mark on me. It formed an instant connection that made me feel excited and interested in learning more about why McDonald decided to create this. I used this feeling to help drive the thought process of our midterm project. We wanted to create something that we all cared deeply about and made us feel some type of way.

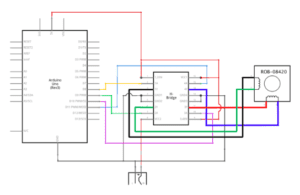

Essentially, our project is a light sensor and a “night light”, located on opposite walls in a hall way in between a child’s room and their parent’ room, that automatically triggers the main light and tv (sound and screen) to turn off and on if the sensor senses a blockage of light (ie when the parents walk past). Originally, we had designed our project to be a life size thing, however, we thought it would be easier to create a prototype model that architects typically design and create first before implementing the real thing.

Our project embodies a special feeling: nostalgia. There already exists the technology that turns lights on and off if it senses your presence, but our project is more specific and for the unique situation of simply just wanting to stay up late to play video games. We wanted to take this aspect of automatically light sensors to the next level and create a project that we personally would have wanted to have back in our childhood! Our project is intended for children who want to play video games throughout the night without the added pressure of their parents catching them and being disappointed with them. We want to offer these kids an opportunity for something that we wished we had the knowledge of when we were younger. When we think of the concept of our project, we internally think back to those flashes of fear or relief when we were a child and compare it to now and are reminded of simpler time of our childhood. Back then, in the moment, we probably were annoyed, but looking back now, we can’t help but smiling a little bit to ourselves.

CONCEPTION AND DESIGN:

We struggled a lot with project development. Since we switched from a real-life model to a mini prototype we had to adjust our original idea of using an infrared sensor to a light sensor since we weren’t sure if the infrared sensor would detect a small figurine. From here, our idea went through many stages of product development, and we constantly tried to link it back to the real-life model we had originally envisioned.

We started out with a very raw model, using materials purely at our disposal. We had half a cardboard box, paper, 2 LED lights, a light sensor, a motor, a straw, and our Arduino and breadboard. Everything was essentially held together by hot glue and tape. It was not pretty, but it was what we had in the moment, so we just went with it. We needed the LED for the “night light” and “lamp” in the kid’s room, and we incorporated the motor as a way of telling the thoughts of the child to the user. We didn’t particularly have criteria of what material we wanted and just wanted to create a very simple model for user testing. Due to a time crunch, we weren’t able to truly consider all of our options. We anticipated that the user would want to move the mom from her room to the child’s room to see what would happen. So, we attached a straw as a “handle” to move the mom back and forth across the hall. Roger coded the light sensor function and range to trigger the LED to turn off and the rotation movement of the motor, which had a bit of story context to set up the scene. Each time, we had to manually reset the project.

We originally had the light in the room attached to the wall as a “lamp,” but after discussing with Marcela, we realized it might be more effective to see the light turn on and off if it were hanging from the top of the room as a main room light.

FABRICATION AND PRODUCTION:

After the User Testing session, we all had a number of ideas to improve our project on ALL aspects. We really wanted to raise the bar and make the project the best we could in the amount of time we had. We tackled issues on the following aspects:

Manual reset upgraded to self-reset through the naming of “stages”:

We found it extremely frustrating when the user would begin to move the mom before we had reset the Arduino and also found that a lot of people liked to move the mom back and forth to see what would happen on the way back, but nothing did. This led us to improve the code to allow the previous state where everything was on in the kid’s room to turn back on when the mom left. After much research and assistance from our wonderful fellows, we were able to do this through the function of stages. Essentially, Roger coded the original resting state of the mom in front of her room as “stage 1”, the state where the mom was blocking the LED night light as “stage 2” to trigger everything to turn off, the state where the mom reaches the child’s door as “stage 3, and the state where the mom walks back to her room and blocking the light again as “stage 4”. When stage 4 would return back to the initial stage 1, the whole thing would essentially “reset”. By creating these different states, we were able to create a holistically working model where the user would be able to move the mom back and forth the hall and see the changes.

However, there was one loophole we found. Because the light sensor takes time to emit information about the stages, we found one scenario where the mom was able to “catch” the kid (ie everything was still on). If the user moved the mom to the “stage 2”, turning everything off in the kid’s room, went back to “stage 1” and then very quickly moved the mom to “stage 3”, the light sensor wouldn’t be quick enough to register the movement and turn everything on in the kid’s room, enabling the mom to “catch the kid”. Considering the time constraints, we decided to just leave the loophole for the final project showing as an almost realistic tricky scenario that your parents could pull on you, giving you a false sense of security thinking you got away with playing games all night, but then they still end up outsmarting you because your parents know everything. We found we could solve the loophole by adding an additional light sensor across from the kid’s room to allow quicker registration that the mom was there instead of only having one light sensor in the middle.

Addition of music, TV light, and child movement via servo to emphasize the change:

User testing showed us how we needed a grander effect of change to make a point. Lots of people didn’t even notice the light in the kid’s room turn off because they were so focused on reading the rotating comments attached to the motor. This led us to implement a number of additions/changes to our child’s room. After Dave came by to test out our project, he suggested including video game sounds as a way to emphasize the change when the parent triggered the light sensor. So, we added a buzzer and looked online for help to code the Super Mario Bro’s theme song (THANKS to Dipto Pratyaksa for making the Sketch code and sharing it with us!) We adjusted the code to fit our model by removing the constant loop of music included and have it only play when the correct stage was triggered. It took us a while to figure out how to adjust the code to stop the music when the stages changed and start the music from the beginning when it was triggered. We also added an extra light for a TV to further emphasize change. We decided we wanted our model to have a roof as well, so we could hang the light from the “ceiling” and show a clear change with the light turning off. By having the light dangling, it was easier for users to see the shut-off moment and the light was emitting brightness to the whole room. We also added a servo to show the child’s movement from sitting to laying down to reflect what we would have done in that situation. If the light sensor was triggered and everything turned off, we would have laid down immediately and pretended we were asleep. While this wasn’t and aspect that was truly triggered by the light sensor, we decided to include it to further emphasize change.

Forward handle/figurines to easier indicate usage to user:

Another aspect we decided to improve upon was user visibility and usage. After Tristan tested out our project, and with him being a very tall person, he voiced how he wasn’t able to see the paper picture of the mom and was confused what to move. With this feedback, we decided to make our model include more 3-D components (ie Legos) and change our handle to the front side instead of from the side. By implementing these changes, we were able to improve user functionality and understanding of our project.

Upgrade cardboard box model to laser-cutter box:

In addition to the changes with the handle and figurines, we also decided to make our model resemble one of those dollhouses little kids play with. We wanted to create a 2-story house, where the first floor was used to hide the “back-end” mess of Arduino, the breadboard and all the jumper cables. We used makerspace to design a box with finger edges and the laser cutter/printer to assemble the whole thing. We had a slight hiccup in the process of laser cutting because we thought we broke the laser cutter for second, but Leon came and saved the day! He just moved the table down (LOL). This enhanced our project by 100x because it gave our project design a much sleeker look, even if it was just for the prototype model. We realized that architects still build their miniature building models to the best of their ability as a way to demonstrate their credibility to create the real thing, even if it all goes to waste after. We laser printed 2 open boxes, with the top box a little smaller than the bottom box, and a roof. From there, we also made cutouts in the places of the wood that we wanted to thread cable through, plug the Arduino in, and allow the handle through.

Usage of Serial Monitor to convey story text instead of physical comments:

We had a lot of feedback regarding a story board/more context. We removed the element of the motor because we thought it would be too distracting for the user to have to read comments and register the change in the model, so we decided to have the comments printed on Serial Monitor. This way, the user wouldn’t be as distracted focusing on so many different things at a time in such a condensed space. In addition, we had a lot of questions on what perspective the user was playing in because the user would control from the perspective of the mom, but the comments would be from the kid’s perspective. With this feedback, we decided to have the text on Serial Monitor indicate who was saying what and be mainly from the mom’s perspective but include thoughts from the child to add a little bit of humor.

A number of people suggested having a story book or something additional to tell a story, but we decided to keep it simple because implementing that idea took our project away from our original life-size model. We didn’t want to keep people interested by telling a separate story, but instead by a connection or a common understanding/experience from their childhood. We felt that if we told a story, the project wouldn’t invoke personal thinking/memories that would rise from the user themselves. Instead, they would be taking the mindset of the story characters.

Testing:

Since we weren’t able print the box yet, we weren’t able to test and code the model using the real thing. Instead, we used a potentiometer in place of the mom to mimic the states of the mom moving. After we printed the box, we realize that the potentiometer was much more accurate than the model of the mom. Based on the changing environments and the amount of external light that was let in, we had to change the range of LED light within the stages before each testing session to adapt to the particular situation. This is something we would like to further improve upon and consider for adaptability to children in different locations with different amounts of external light.

CONCLUSION:

The goal of our project was to create something joyful and meaningful that the three of us would have a connection with. Considering my definition of interaction, I feel that our project aligns closely my definition, especially with the portion that talks about making you feel something inside. However, the strength of having a continuous relationship isn’t too strong because it receives the same set of information and displays the same feedback, making it a bit more difficult to want to come back to or be further intrigued with. But that isn’t to say it isn’t interactive. Like my example above with the refrigerator, while it is a simple interaction, it still generates a kind of unconsciously tied feeling within you when you interact with it. This is what our project is a bit like. And, it really depends on the user and if they have a connection with this project. Our project brings feelings of nostalgia and entertainment. We are reminded of what our lives used to be and what our problems used to be. And from that, we can laugh back at ourselves and reminisce on a period where things were much simpler.

Looking at audience interaction, it definitely helped that we were able to explain where the passion for our project came from first along with our demonstration. The backstory is what makes the project have more depth and meaning. Without it, we just have a functional and humorous project. The user will move the handle and see the different stages being triggered and things turning off, maybe having a bit of a laugh because of our pictures or just how funny the concept is. But, when we attach the aspect of nostalgia, the user is able to connect a bit deeper. If we had more time, I would try to incorporate more backstory to reach out and connect to more users, maybe through Serial Monitor? I would also try and figure out how to make the LED ranges adaptable to all environments, so we wouldn’t have to set up each time according to the external light.

I think we also struggled a bit with straying from our original life-size model to adapt to a miniature model. We were trying to think of things as if it were still the life-size model but had to change out mindset to see things through the perspective of a project rather than a product. I’ve realized that adapting your mindset is really important to creating a project. It’s super easy to think of things just from your perspective as the maker, but much harder to actually place yourself in the shoes of your user. I think this is why user testing was so helpful to us. All three of us were caught up in the functionality of the project, we forgot to take a step back and think things through from the user’s perspective. And as you can see, our project was able to improve so much by simply considering more aspects from the user’s side.

Overall, I am so happy with where we started and where we came to. Initially, I wasn’t too big of a fan of the idea because I wasn’t sure about the feasibility of it as a project. But now, I have a lot of pride regarding it, even if it’s not perfect, yet. I’m very proud of my team. We had a few bumps in the road regarding the ideas and the ways to approach certain aspects, but in the end, we were all able to converge and create something that we all connect with and has meaning to us. We are all strongly grounded with our project because each of us has a connection with the ideas that created it and can’t help but feel a sense of pride of how far we’ve come from our first meeting where we were all completely in the dark about what to do.

This midterm project was more than just a simple group project. The experience was the most rewarding part and something I think everyone should have a chance to experience. The experience was just as important, if not more important, than the final product. The traditional methods of exams or working alone don’t bring the unique, added benefits of working in a group from scratch, brainstorming ideas, getting to know your teammates better, and creating something you are all proud of, despite the many frustrations along the way. Each person brings something amazing to the table and seeing them excel or get frustrated at it allows you to reflect upon yourself and your position in the group and how you can help the group flourish. You learn more about what your strengths and weaknesses are and use them to help other members or have other members help you. Along with this exchange of knowledge, we all experienced times where you just want to stop and give up for a bit. There was a lot of failure and not working of code in each stage of development. But we learned that to get to the next stage, you must persist through. And this can be done through the encouragement from your teammates. I’ve learned, your teammates are a huge contribution to a successful project. But not in the way of how good someone is at drawing or coding, but in the way of positively bouncing off each other and encouraging each other to be better and learn more to create something you all have profound pride for.

CODE:

#define NOTE_C1 33

#define NOTE_CS1 35

#define NOTE_D1 37

#define NOTE_DS1 39

#define NOTE_E1 41

#define NOTE_F1 44

#define NOTE_FS1 46

#define NOTE_G1 49

#define NOTE_GS1 52

#define NOTE_A1 55

#define NOTE_AS1 58

#define NOTE_B1 62

#define NOTE_C2 65

#define NOTE_CS2 69

#define NOTE_D2 73

#define NOTE_DS2 78

#define NOTE_E2 82

#define NOTE_F2 87

#define NOTE_FS2 93

#define NOTE_G2 98

#define NOTE_GS2 104

#define NOTE_A2 110

#define NOTE_AS2 117

#define NOTE_B2 123

#define NOTE_C3 131

#define NOTE_CS3 139

#define NOTE_D3 147

#define NOTE_DS3 156

#define NOTE_E3 165

#define NOTE_F3 175

#define NOTE_FS3 185

#define NOTE_G3 196

#define NOTE_GS3 208

#define NOTE_A3 220

#define NOTE_AS3 233

#define NOTE_B3 247

#define NOTE_C4 262

#define NOTE_CS4 277

#define NOTE_D4 294

#define NOTE_DS4 311

#define NOTE_E4 330

#define NOTE_F4 349

#define NOTE_FS4 370

#define NOTE_G4 392

#define NOTE_GS4 415

#define NOTE_A4 440

#define NOTE_AS4 466

#define NOTE_B4 494

#define NOTE_C5 523

#define NOTE_CS5 554

#define NOTE_D5 587

#define NOTE_DS5 622

#define NOTE_E5 659

#define NOTE_F5 698

#define NOTE_FS5 740

#define NOTE_G5 784

#define NOTE_GS5 831

#define NOTE_A5 880

#define NOTE_AS5 932

#define NOTE_B5 988

#define NOTE_C6 1047

#define NOTE_CS6 1109

#define NOTE_D6 1175

#define NOTE_DS6 1245

#define NOTE_E6 1319

#define NOTE_F6 1397

#define NOTE_FS6 1480

#define NOTE_G6 1568

#define NOTE_GS6 1661

#define NOTE_A6 1760

#define NOTE_AS6 1865

#define NOTE_B6 1976

#define NOTE_C7 2093

#define NOTE_CS7 2217

#define NOTE_D7 2349

#define NOTE_DS7 2489

#define NOTE_E7 2637

#define NOTE_F7 2794

#define NOTE_FS7 2960

#define NOTE_G7 3136

#define NOTE_GS7 3322

#define NOTE_A7 3520

#define NOTE_AS7 3729

#define NOTE_B7 3951

#define NOTE_C8 4186

#define NOTE_CS8 4435

#define NOTE_D8 4699

#define NOTE_DS8 4978

#define melodyPin 3

#include <Servo.h>

int melody[] = {

NOTE_E7, NOTE_E7, 0, NOTE_E7,

0, NOTE_C7, NOTE_E7, 0,

NOTE_G7, 0, 0, 0,

NOTE_G6, 0, 0, 0,

NOTE_C7, 0, 0, NOTE_G6,

0, 0, NOTE_E6, 0,

0, NOTE_A6, 0, NOTE_B6,

0, NOTE_AS6, NOTE_A6, 0,

NOTE_G6, NOTE_E7, NOTE_G7,

NOTE_A7, 0, NOTE_F7, NOTE_G7,

0, NOTE_E7, 0, NOTE_C7,

NOTE_D7, NOTE_B6, 0, 0,

NOTE_C7, 0, 0, NOTE_G6,

0, 0, NOTE_E6, 0,

0, NOTE_A6, 0, NOTE_B6,

0, NOTE_AS6, NOTE_A6, 0,

NOTE_G6, NOTE_E7, NOTE_G7,

NOTE_A7, 0, NOTE_F7, NOTE_G7,

0, NOTE_E7, 0, NOTE_C7,

NOTE_D7, NOTE_B6, 0, 0

};

//Mario main them tempo

int tempo[] = {

12, 12, 12, 12,

12, 12, 12, 12,

12, 12, 12, 12,

12, 12, 12, 12,

12, 12, 12, 12,

12, 12, 12, 12,

12, 12, 12, 12,

12, 12, 12, 12,

9, 9, 9,

12, 12, 12, 12,

12, 12, 12, 12,

12, 12, 12, 12,

12, 12, 12, 12,

12, 12, 12, 12,

12, 12, 12, 12,

12, 12, 12, 12,

9, 9, 9,

12, 12, 12, 12,

12, 12, 12, 12,

12, 12, 12, 12,

};

////////////////////////////////////////////////////////////////////////////////////////////////////

Servo servo1; //servo for kids

Servo servo2; // servo for comments

int sensor = A0;// Analog pin for reading sensor data

float light;

int light_value;

int state = 1;

float a = 1;

float b = 1;

float x =1;

float y = 1;

float z =1;

int bal1 = 160;

int bal2 = 160;

////////////////////////////////////////////////////////////////////

void setup() {

Serial.begin(9600);

pinMode(3, OUTPUT);//buzzer

servo1.attach(8);

servo2.attach(9);

pinMode(sensor, INPUT); //data pin for ambientlight sensor

pinMode(13, OUTPUT); //sensor’s LED

pinMode(12,OUTPUT); // house LAMP

pinMode(7,OUTPUT); //TV LED

}

////////////////////////////////////////////////////////////////////

void loop() {

analogWrite(12,230); // the light of sensor

if(state == 1){

Serial.println(“Roger: I want to stay up late for playing games”);

Serial.println(“Roger: But I’m afraid about my Mom coming to my room” );

Serial.println(“Mom: I am going to see whether Roger is asleep right now…”);

Serial.println();

Serial.println();

Serial.println();

Serial.println();

Serial.println();

Serial.println();

//Serial.println(state);

servo1.write(0); // still need to adjust

servo2.write(0);

digitalWrite(12,1); // LED of the house on

digitalWrite(7,1); // TV on

while(a == 1){

sing(1);

float light_value = analogRead(A0);

// Serial.println(light_value);

delay(100);

if(light_value < bal1){ // false to a == 1, and everything will turn off

a = 0;

x = 1;

sing(0);

servo1.write(85); // still need to adjust

servo2.write(85); //^^

digitalWrite(12,0);

digitalWrite(7,0);

state = 2;

//Serial.println(state);

}

}

}

else if(state == 2) {

Serial.println(“Roger: OH MY GOD, she is coming!!!”);

Serial.println();

Serial.println();

Serial.println();

Serial.println();

Serial.println();

Serial.println();

digitalWrite(12,0);

digitalWrite(7,0);

while(x == 1){

float light_value = analogRead(sensor);

//Serial.println(light_value);

// Serial.println(light_value);

delay(100);

if (light_value > bal2 ){ // when mom goes back, everything turns back on

//Serial.println(light_value);

state = 3;

//Serial.println(state);

x = 0;

y = 1;

}

}

}

else if(state==3) {

Serial.println(“Mom: Oh, he is sleeping! What a good little boy!”);

Serial.println();

Serial.println();

Serial.println();

Serial.println();

Serial.println();

Serial.println();

digitalWrite(12,0);

digitalWrite(7,0);

while( y == 1){

float light_value = analogRead(sensor);

// Serial.println(light_value);

// Serial.println(x);

delay(100);

if(light_value < bal1){

y = 0 ;

z = 1;

state = 4;

//Serial.println(light_value);

//Serial.println(state);

}

}

}

else if(state==4) {

Serial.println(“Roger: See, with this added technology, I will always be safe and be able to play games for as long as I want”);

Serial.println();

Serial.println();

Serial.println();

Serial.println();

Serial.println();

Serial.println();

digitalWrite(12,0);

digitalWrite(7,0);

while(z == 1){

float light_value = analogRead(sensor);

if(light_value > bal2){

//Serial.println(light_value);

state = 1;

//Serial.println(state);

z = 0;

servo1.write(0); // still need to adjust

servo2.write(0); //^^

digitalWrite(12,1);

digitalWrite(7,1);

sing(1);

a=1;

}

}

}

}

/////////////////////////////////////////////////////////////////////////////////////////////////////

void sing(int b) {

if(b == 1){

int size = sizeof(melody) / sizeof(int);

for (int thisNote = 0; thisNote < size; thisNote++) {

int sensorL= analogRead(A0);

if (sensorL > bal2){

// to calculate the note duration, take one second

// divided by the note type.

//e.g. quarter note = 1000 / 4, eighth note = 1000/8, etc.

int noteDuration = 1000 / tempo[thisNote];

buzz(melodyPin, melody[thisNote], noteDuration);

// to distinguish the notes, set a minimum time between them.

// the note’s duration + 30% seems to work well:

int pauseBetweenNotes = noteDuration * 1.30;

delay(pauseBetweenNotes);

// stop the tone playing:

buzz(melodyPin, 0, noteDuration);

}

else{

break;

}

}

}

else{

}

}

void buzz(int targetPin, long frequency, long length) {

long delayValue = 1000000 / frequency / 2; // calculate the delay value between transitions

//// 1 second’s worth of microseconds, divided by the frequency, then split in half since

//// there are two phases to each cycle

long numCycles = frequency * length / 1000; // calculate the number of cycles for proper timing

//// multiply frequency, which is really cycles per second, by the number of seconds to

//// get the total number of cycles to produce

for (long i = 0; i < numCycles ; i++) { // for the calculated length of time…

digitalWrite(targetPin, HIGH); // write the buzzer pin high to push out the diaphram

delayMicroseconds(delayValue); // wait for the calculated delay value

digitalWrite(targetPin, LOW); // write the buzzer pin low to pull back the diaphram

delayMicroseconds(delayValue); // wait again or the calculated delay value

}

}