PROJECT TITLE – YOUR NAME – YOUR INSTRUCTOR’S NAME

Forest Box: build your own forest–Katie–Inmi

CONCEPTION AND DESIGN:

In terms of interaction experience, our idea is to create something similar to VR: users do physical interactions in the real world and resulted in changes on the screen. There are several options we explored in the designing process. Inspired by a work by Zheng Bo called 72 relations with the golden rod, at first, we want to use an actual plant that attach multiple sensors onto it. To let the users explore different relations they can do to plants. For example, we want to attach a air pressure sensor onto the plant and whenever the someone blow to it, the video shown on the computer screen will change. And a pressure sensor that someone can step on it.

But we ended up not choosing these options because the equipment room do not have most of the sensors we need. We then select the color sensor, touch sensor, distance sensor and the motion sensor. However, we did not think carefully about the physical interactions before hooking them up. The first problem is that the motion sensor does not work as the way we want: it only sense motion but cannot identify certain gestures. As a result it sometimes will conflict with the distance sensor, so we give it up. So we have a very awkward stage where we have three sensors hooked up and different videos running according to sensor values but have difficulty to link them together.

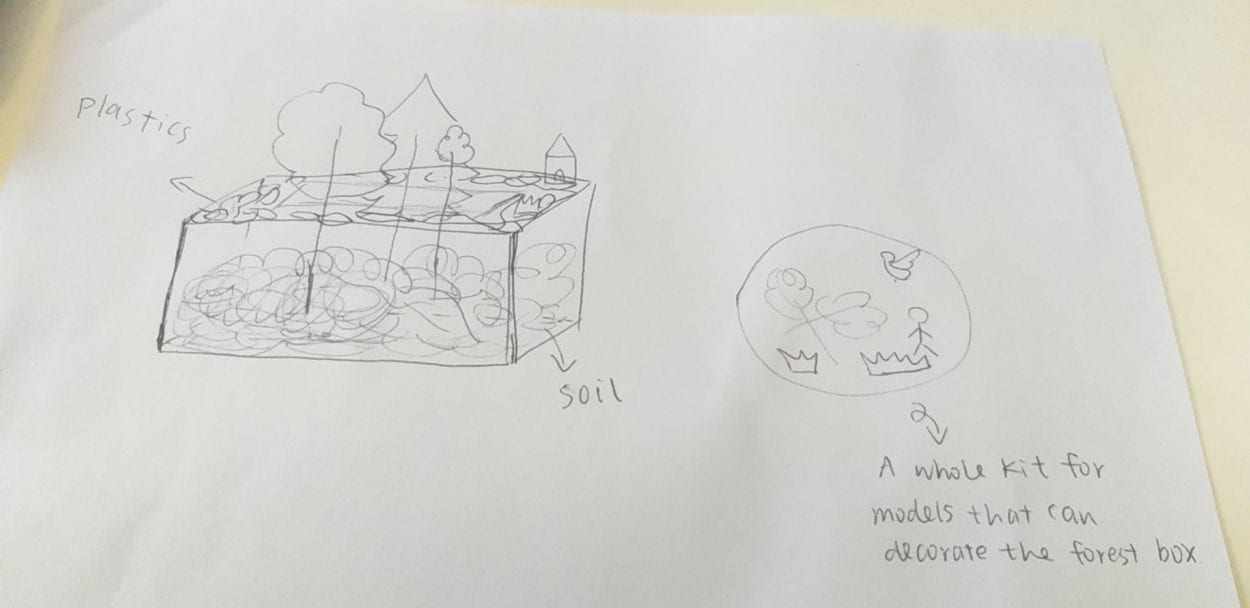

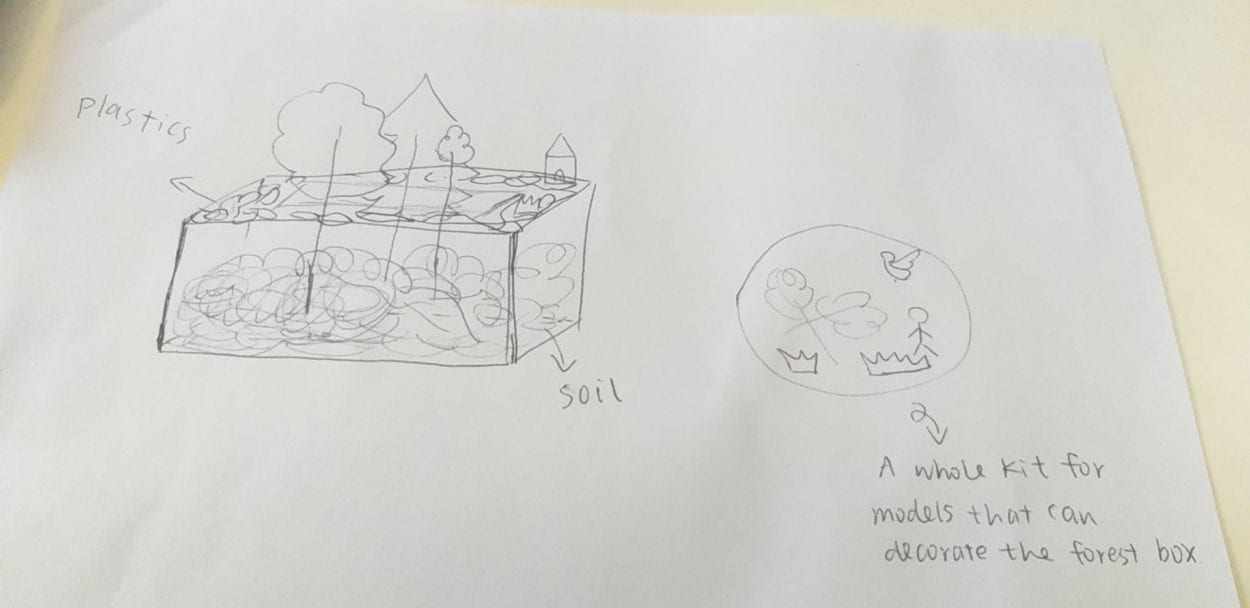

After asking Inmi for advice, the final solution we think of is to make a forest box that users can interact with and the visuals on the screen will change according to different kinds of interactions. If you place fire into the box, a forest fire video will be shown on the screen. if you throw plastics into the box, a video of plastic waste will be shown on the screen. if you pull off the trees, a video of forest being cut down will be shown on the screen. By this kind of interaction, we want to convey the message that every individuals’ small harm to earth can create huge damages. For the first scene, we use a camera with effects to attract user’s attention.

FABRICATION AND PRODUCTION:

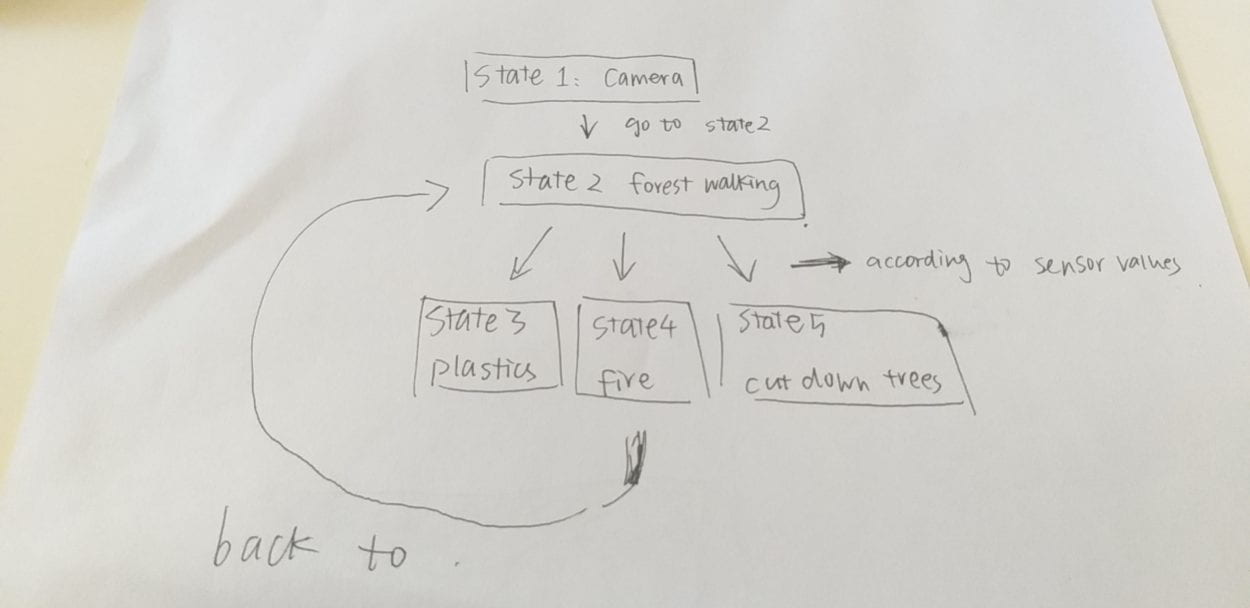

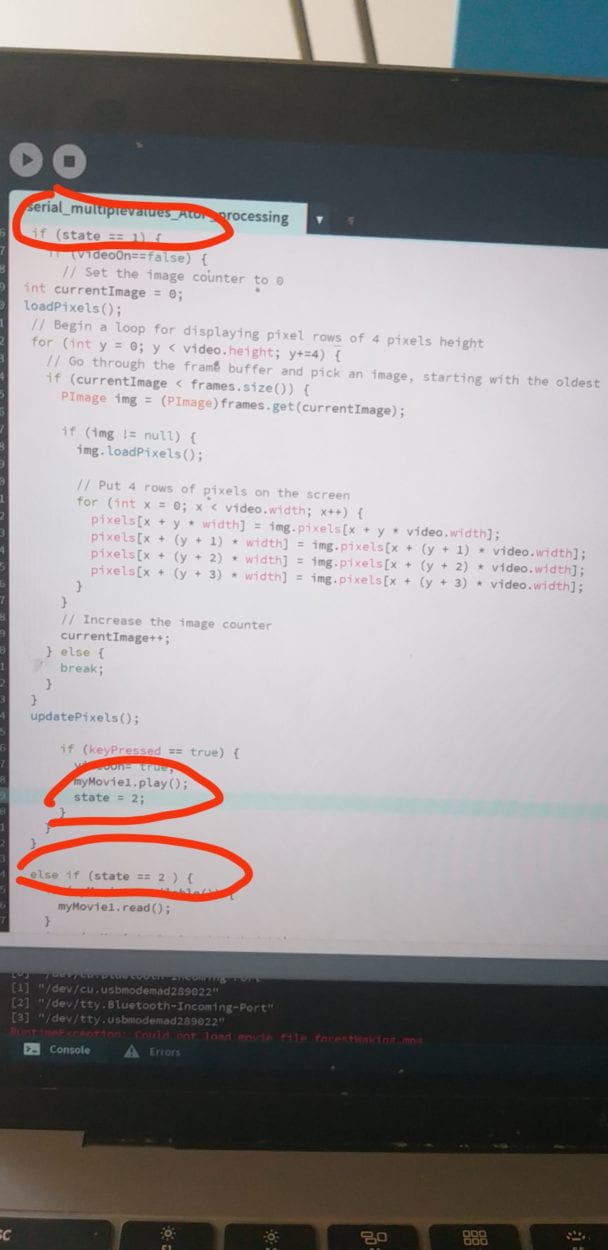

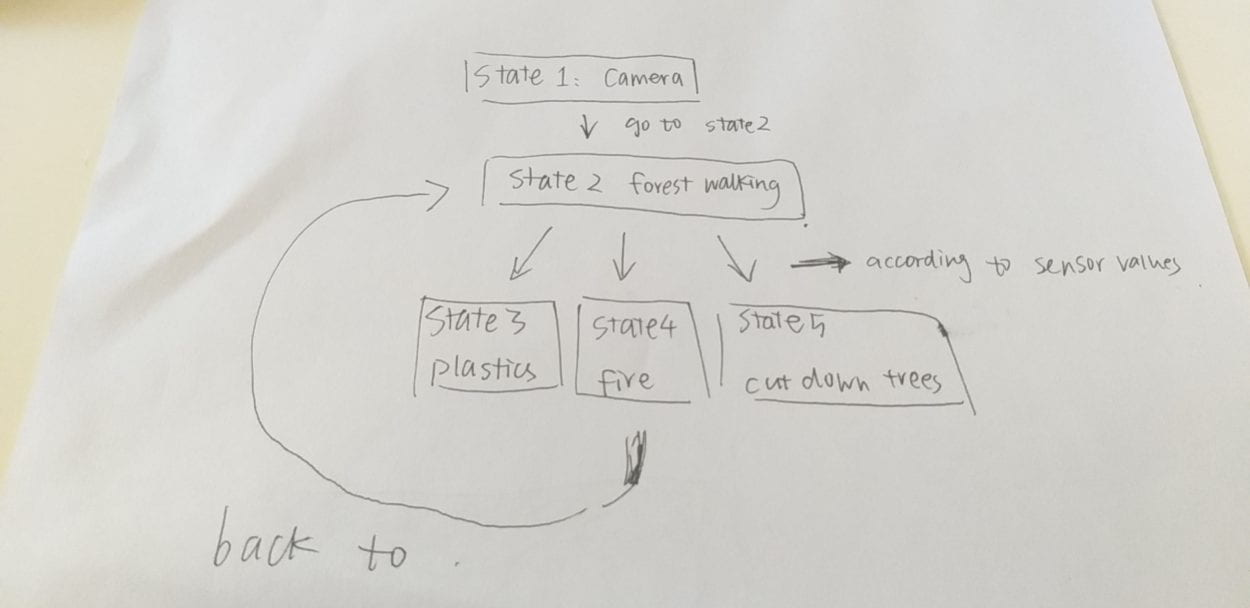

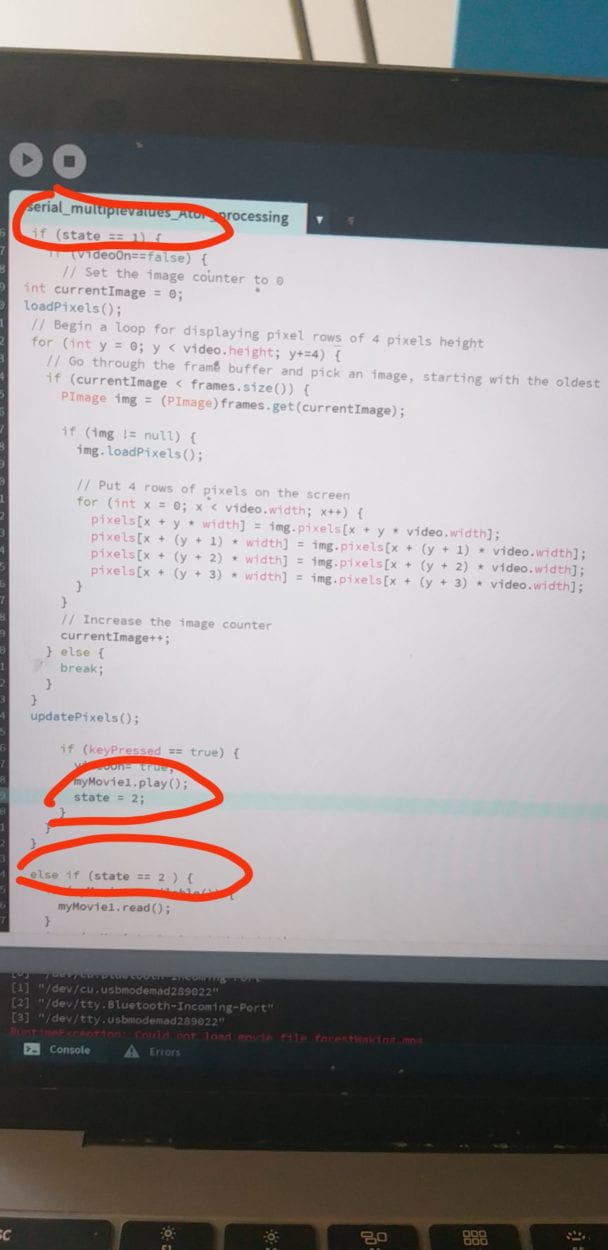

The most significant steps are first: hook up the sensors, Arduino and Processing code and let them communicate. I think the most challenging part for me is to figure out the logic in my Processing code. I did not know how to start at first because there are too many conditions and results. I don’t know how the if statements are arranged to achieve the output I want. The very helpful thing is to draw a flow diagram of how each video will be played.

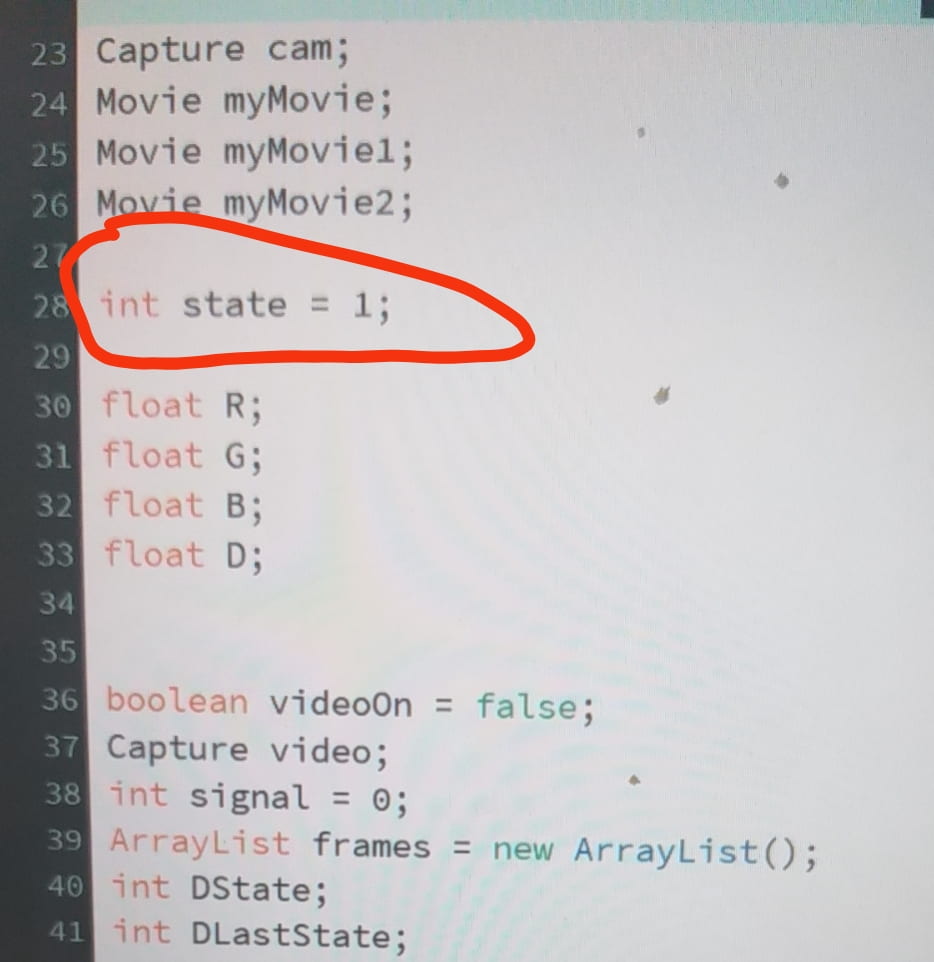

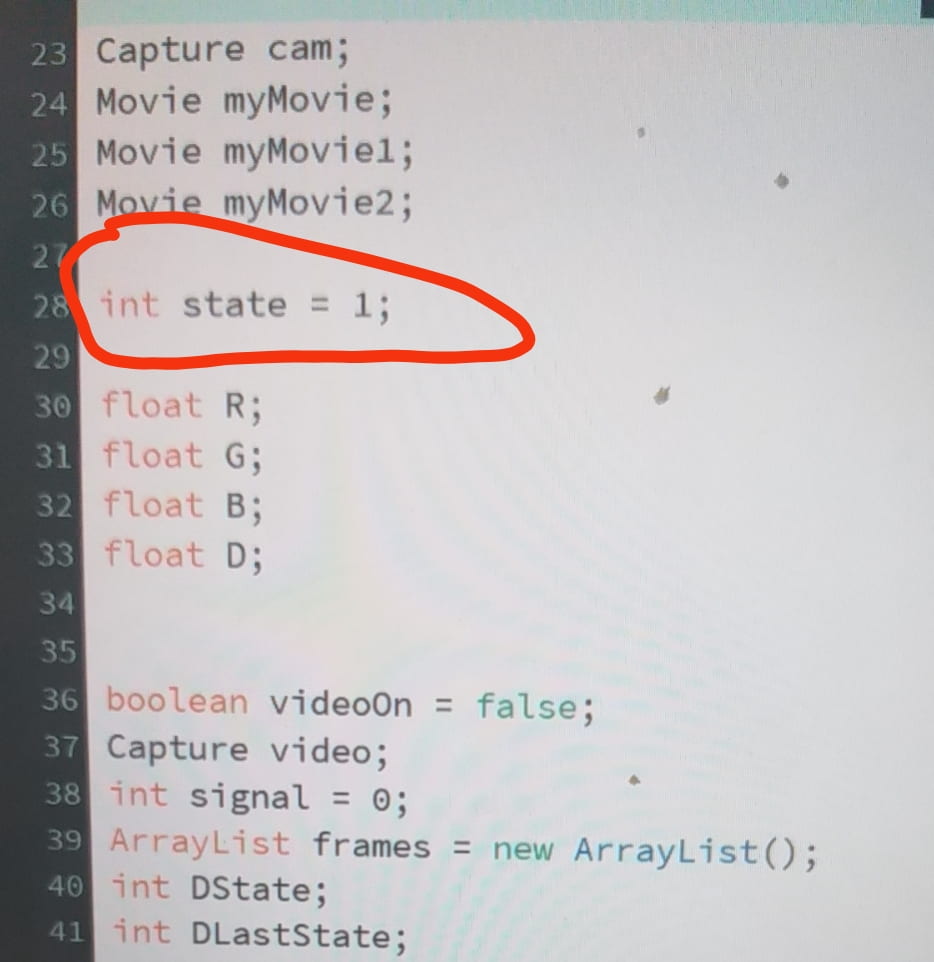

and then we need to define a new variable called state and the initial value is 1.

Then, the logic becomes clear and the work become simpler. I just need to write down what each state does separately and then connect them together. Although the code within one state can be very lengthy and difficult, the overall structure is simple and clear to me.

For example from state 1 to state 2:

Another important thing is we want to switch from state 1 to two by the keypress interaction. However, the video of state 2 only plays when the key is pressed, when you release the key, the state will turn back to 1. To solve this problem, I create a Boolean to trigger the on and off of the video.

At first, we want different users to run from far to near to the screen, wearing different costumes representing plastics and plants. the user wearing which costume first approach the screen determines which video to play. However, during user testing, our users said first, the costume is of poor quality. Second, the process of running is not interactive enough. Our professor also said that there’s no link between what the users are physically doing: running, to what’s happening on the screen: playing educational videos.

So after thinking through this problem, we create a forest box to represent forest, and one can interact with different elements of the box.

In this way, what the user is doing physically has some connections with what is shown on the screen.

CONCLUSIONS:

The goal of our project is to raise people’s awareness about climate issues, and reflect on our daily actions. The project results align with my definition of interaction in the way that output of the screen is determined by the input (users’ physical interaction). It is not align with my definition in the way that there is no “thinking” process between the first and the second input. We’ve already give the options they can do, so there’s no much exploration. I think our audience interact with our project the way we designed.

But there is a lot of things we can improve. First, we can better design the physical interaction with the forest box and let user to design the box the way they the want. For example, we can fill the box with dust and provide different kinds of plants and other decorations. Different users can experience the act of planting a forest together. By placing multiple color sensors in different place on the box, the visuals will change according to the different trees being planted. Second, we could first let plastics fill the surface of the box to indicate the plastic waste nowadays. If the user get rid of the plastics, the visuals on the screens will change, too. Second, for the video shown on the screen, we can draw by ourselves.

The most important thing I’ve learned is experience design. As I reflect on my project designing process, I realize for me now, it’s better to first think of an experience rather than the theme of the project. Sometimes to begin with a very big and broad theme is difficult for me to design the experience. But if you first think of an experience, for example, a game, then it’s easier to adapt the experience to your theme.

The second thing I’ve learned is coding skills. With more can more experience in coding, I think my coding logic improves. For this project, we have many conditions to determine which video to play, so there are a lot of “if statement”. I felt a mess at first, did not know how to start, then Tristan asked me to jump out of the “coding” for a second and think about the logic by drawing a flow diagram. After doing this, I felt much clearer of what I was going to do.

I think the climate issue is certainly a very serious one and everybody should care. Because climate changes effect our daily lives. “Nature does not need humans. Humans need nature.”