Title: D.R.E.A.M.

Subtitle: Data Rules Everything Around Me

Description: D.R.E.A.M. brings you into the middle of Shanghai, where neck-craning skyscrapers and bustling passers-by are surrounding you. Everything seems just another ordinary day in Shanghai, and you might wonder what’s so special about this cityscape. As time goes by, you’re going to notice there is something strange about your surroundings. When you look closely, you can spot a number of buildings (including Oriental Pearl Tower) that are gradually disintegrating. Background noise sounds a bit different now, as footsteps and voices get slightly distorted. The sky is peeling off and the black screen beneath reveals itself; all the people around begin to disintegrate too. Everything what you see now is, quite literally, data.

Location: Lujiazui Skywalk, Shanghai

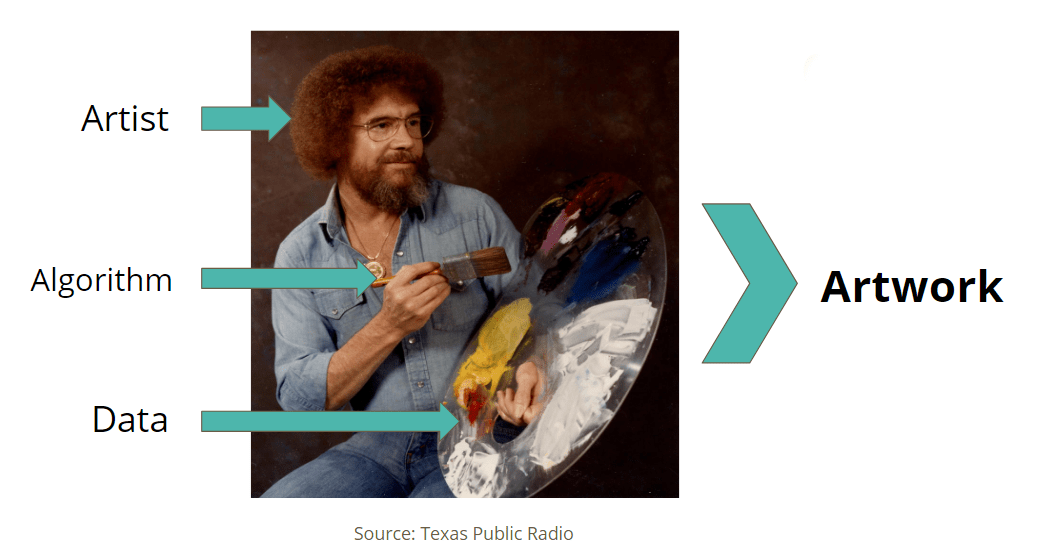

Goal: Our team tried to make the maximum use of glitch effect in VR experience in terms of not only visual but audio part, too. As its title indicates, our video touched upon an idea that we are surrounded by data—or anything that can be reduced to data. We were partly influenced by The Matrix Trilogy, and a thought experiment known as “Brain in a Vat,” in which I might actually be a brain hooked up to a complex computer system that can flawlessly simulate experiences of the outside world. We hope our audience to experience an unreality that is so realistic that they feel as though they are in a simulation within the simulation. The title is also a subtle, intended pun because what rules everything around me is, at least when I am wearing a VR headset, nothing but data.

Filming: Amy, Ryan, and I went to the skywalk twice to shoot 360 degree video. For the first shot, weather was fine but a little shiny so there was a slight light flare in the footage. We took video in two spots; one in a less crowded, park-like place and the other in the middle of the skywalk. Since we picked the late morning time in Tuesday, there were less people than usual. The whole process was quite smooth. Insta 360 Pro 2 camera was equipped with a straightforward user interface. For some reason, the footage we took in the middle of the skywalk was later found to be corrupted, and that was a little bit of a downer. The other footage seemed nice but on the very top of it was the tip of a peeping antenna. For the second shot, we did not make any mistake and could get the full footage of the both spots. The weather was pretty cloudy so more suitable, and luckily nobody disturbed our shooting. A few number of people showed an interest, but most passers-by just continued their way.

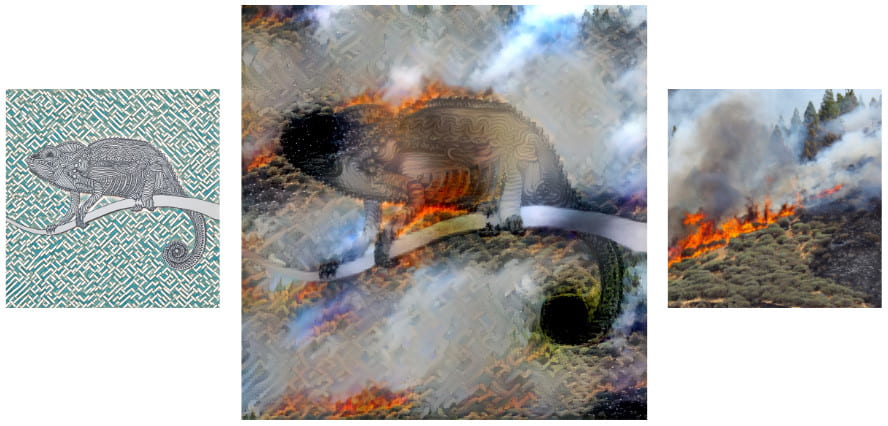

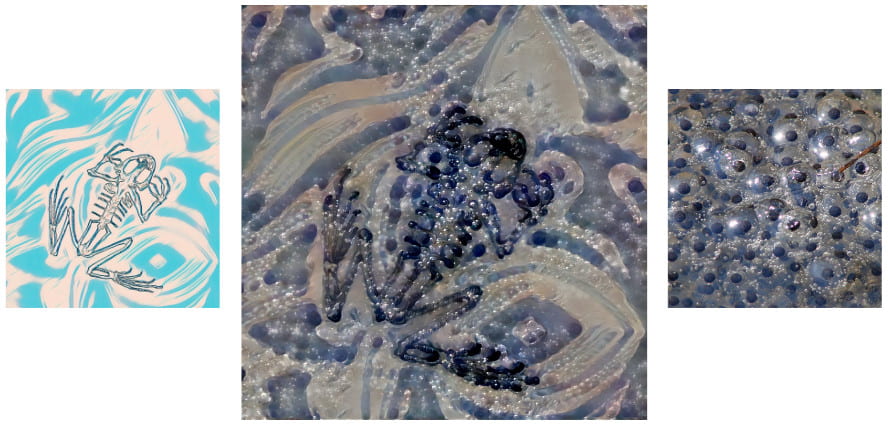

Post-Production: The first thing we did after the shoots was to stitch these video files together. We watched our videos on the Oculus Go and checked how they looked in 3-D display. The videos were highly immersive without no additional edit; they were already quite powerful. We decided to use the second video we took on the skywalk, as it had more a variety of elements that can be manipulated. Since we only used Adobe Premiere Pro for the visual part, effects and tools we could utilize were somewhat limited. If not labelled “VR,” most of the effects were inapplicable to our files since those effects only worked for flat surface. At first, we added a glitch effect on the entire video just to see how it looks like. When the effect is applied for the whole part, the features of 3-D stereo image were hardly visible. So we traced a mask layer from each building and specified the area in which the effect got kicked in—a bit of manual labor but totally worth it. We modified parameters separately for each building so that their glitch effects look distinct from each other, to avoid seeming a way too identical. Later in the video, the glitch effects get contagious so the sky and people also become distorted, gradually disintegrated into particles. The room for improvement, I think, is to make more use of a man who looks the audience straight in the face. In the later part of the video, there is a guy who stands in front of the camera and takes a video of it. We tried to add more effects on this guy, but realized that we needed After Effect to visualize what we wanted to try (to make him continuously back flip or so). Many people, after watching our demo product, gave us a feedback that our video would become more interesting if we put spatial audio. I tried to add spatial audio multiple times, but could not reach a satisfactory result; sci-fi sound effects were positioned on each building as an audio indicator that hints where to look at, however, the result had no significant differences between the spatial audio one and the stereo one. If given more time, we would definitely work more on the spatial audio part so we can possibly direct our audience to see where we want them to see. Overall, we could successfully visualize what we envisioned—but could not maximize the possibilities of audio.

Reflection: Throughout the semester, I definitely enjoyed learning both theoretical and practical knowledge of immersive media. The new concepts and terms we learned were at first a little confusing; and yet, they got more and more clear as we began to work on our own project. During the show, it felt great to be noticed for our efforts when we could see a lot of “wow” faces from our testers. What I learned the most during the course is to think in a 3-D way; all of my filming and video editing skills were limited to 2-D flat screen and so was my visual imagination. This course helped me to add a new dimension in the canvas of my mind. Now I have more understanding on how VR/AR actually works—now I can feel such a deep consideration and hard efforts behind the scenes of virtual reality.

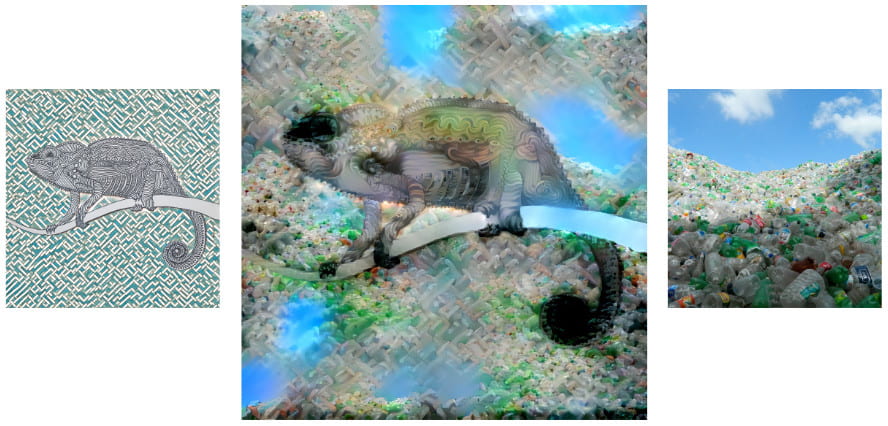

The resolution of this

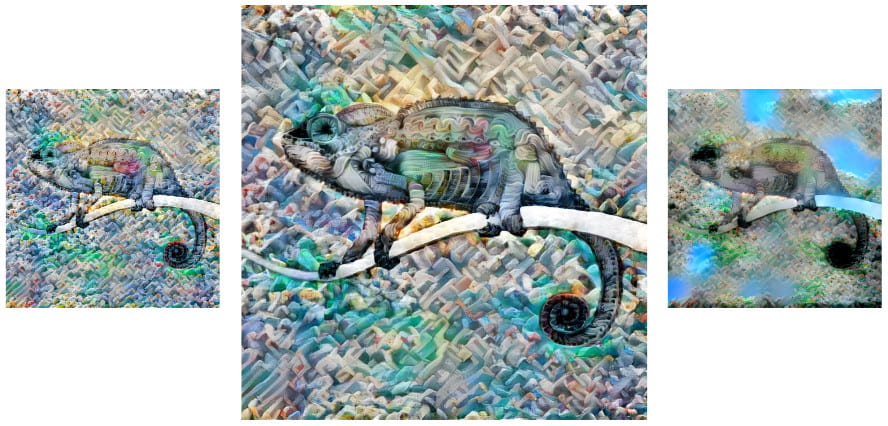

The resolution of this