The idea

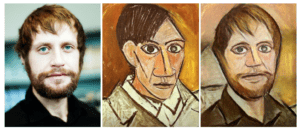

I really hope to combine what I have learned in class with my interest area in daily lives and as I said at the first class of this semester, I am interest in using photography or video in art forms to express myself. At the same time, I want to make this interactive process fun and easier for people to use. It could be better if my project could have some real-life usage.

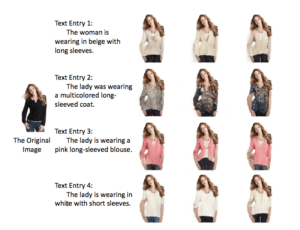

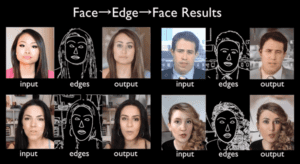

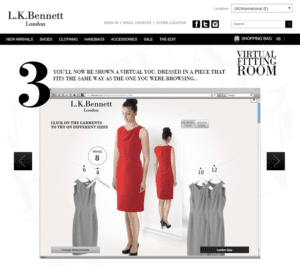

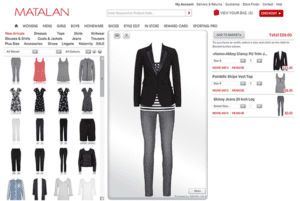

Then I landed on the idea to create an outfit generator. I would like to create an either real-time or non real-time platform where people can select the favorite style they want to have. The detailed form could be as follows. The first way is letting the user simply type in the type of tops and bottoms they want to have and then the system will generate a predicted outfit image. The second way is letting the user input an image and then generate the predicted outfit image onto the user’s body. More detailed plan could be generated later on.

I think in everyone’s daily life, there is a need to create some visualized fitting system to help us select outfits. In reality, some online shopping website like has already implemented this kind of function. In the future, I hope everyone can have this kind of tools by empowering AI technology.

Action Plan

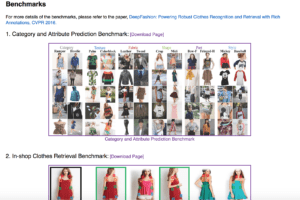

First of all, I planned to search similar work online including their models, their data set and any special techniques they used for reference. Then based on my initial research, I am going to do some deep dive into the areas I am interested in.

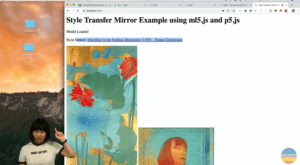

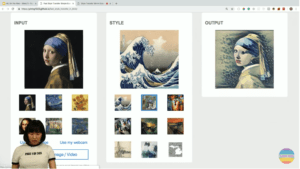

I scanned the major fashion-related interactive machine learning project and find one research paper highly related to my initial idea. I requested for the dataset and find out that the model they used is called fashionGAN. There may have other similar models that could probably generate similar results and that’s the part I am going to research further.

Initial Findings

After taking a closer look at the models I found, I found out it is based on Torch and PyTorch, which I did not have any experience before. That could be hard for me to implement this project and I would like to find more related sources or models and also rethink of the whole workflow for this project.

Reference

https://github.com/zhusz/ICCV17-fashionGAN#complete-demo

http://mmlab.ie.cuhk.edu.hk/projects/DeepFashion/FashionSynthesis.html