Project Name: Namaste

Description

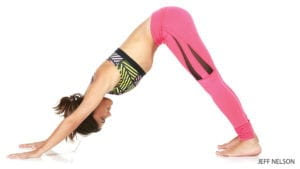

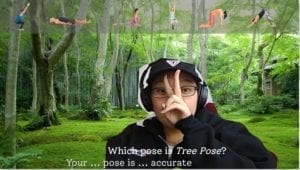

For my final project, I wanted to create an interactive yoga teaching application for people unfamiliar with yoga. The project layout was that users had to choose from 6 different images of yoga poses, and guess which of those poses matched the background on the screen and the pose name at the bottom. Users were able to pick from either cobra, tree, chair, corpse, downwards dog, and cat pose.

The goal of my project was to not only get users to draw the connection between the yoga pose name and the animal/object it was named after, but also feel immersed in the natural environment that these poses are typically found in.

Inspiration

I was thinking of games and body movement/positions, and remembered one of my favorite childhood games, WarioWare Smooth Moves. One of the moments that stuck the most with me in the game, was the pose imitation levels. The point of the game was to complete simple tasks within 3 seconds. It very easily got stressful and fast-paced, so the random “Let’s Pose” breaks where gamers had to simply follow the pose on the screen was peaceful. Thus, the idea came to me where I wanted people to also do yoga poses.

As I was doing research on what poses I could incorporate into my project, I found it interesting that all the poses were named after objects, animals, etc. As I looked into it more, I found that one of yoga’s main principles is to really embrace nature and the environment you’re in. This point of information, in addition to enjoying my midterm project immersive environment, was what made me want to make users feel immersed in the environment and truly understand the context in which these poses were named after. Continue reading “MLNI Final Project – Jessica Chon”