I really like the “StyleTransfer_Video” example, and I think it could have very interesting artistic uses. The video aspect is fun and interactive, and I like the stylistic possibilities.

https://ml5js.github.io/ml5-examples/p5js/StyleTransfer/StyleTransfer_Video/

How it works

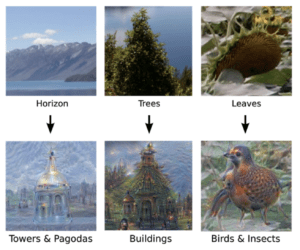

The program takes an image and uses tracking software to match certain stylistic elements to the webcam images. The software applies the color scheme and patterns of the “Style” image to whatever the webcam captures, creating a surprisingly similar style while keeping the webcam aspects identifiable.

Here was the “style” image:

Here are some webcam screencaps of me using this style:

Potential uses

I think it could be a cool public art piece, especially in an area like M50 with a lot of graffiti or outdoor art. The webcam could be displayed on a large screen that places passersby into the art of the location, taking the stylistic inspiration from the art pieces around it. I also think it could be cool way to make “real-time animations” using cartoon or anime styles to stylize webcam footage. If a simple editing software was added to the code, such as slo-mo effects, jump cuts and zoom, and the program could become an interactive game that “directs” people and helps them create their own “animated film.”

I’m also curious how the program would work if the “style” images were screencaps of the webcam itself. Would repeated screencaps of the webcam fed through it as the “style” create trippy, psychedelic video? I would love to find out!