Overview:

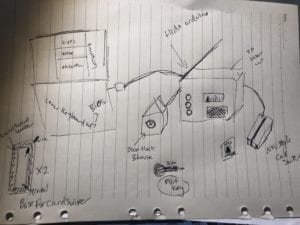

Project display including Processing images, house and key, radio, and card swiper with NYU card. (Not Pictured paper English Bios)

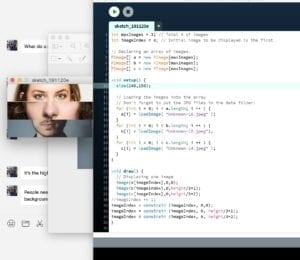

Throughout this project and the phases of design, fabrication, and production our project has completely transformed. Originally, our project was a game/activity in which people would place their hands against different gloves/hand. These hands would be linked up to the processing unit which would rapidly change faces split into three sections the eyes, nose, and mouth. These faces randomly cycled through in the array each time the hands were pressed against. Originally, the eyes section used the webcam to show the user’s face mixed in with the different students faces. However, during user testing we realized that the interaction itself was fairly boring and lacked a greater meaning and experience. During our user testing, we received lots of feedback about the physical difficulties with our project being the live webcam’s accessibility based on height, the connection between users’ actions and meaning is not explicit enough, and the interaction itself being non immersive and simplified. We received overall positive responses to the message and theme of our project is to try and understand and get to know different groups of people at our school that most students didn’t fully understand. Particularly we got feedback from professors and students about incorporating sound/interviews to allow people to tell their own stories. Our project we presented on Thursday is an interactive project intended to share the stories of the workers at NYUSH with the student body and faculty who often overlook them as people and classify them solely as school staff workers. This project involved a Processing element to control sound and visual which used different interview clips which we conducted and different faces which we cut up and assembled into three sections like our original project. Christina conducted most of the interviews and took the pictures along with doing fabrication, and we both contributed to design. I wrote the original code and modified it to this project adding in sound arrays with some help from various professors, fellows, and LAs. I also fabricated the physical projecta creating the buttons and helped Christina with the general fabrication of each element. In addition, I also wired the circuit and cut the audio and photo images to put into the different arrays. Our original inspiration came from face swapping interaction technology like snapchat filters and different face swapping programs, however we adapted this technology to better fit the goal we had, which was sharing the stories of workers who are often overlooked. Also, I came across a similar code to mine (in picture array context) which was inspiring for my code, specifically reminding me to place constraints on the picture. However, the use of his code was more interesting as he planned to share sexual assault survivors awareness through his interaction project, which began my thinking process on how to articulate a story through Processing.

CONCEPTION AND DESIGN:

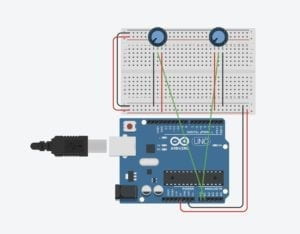

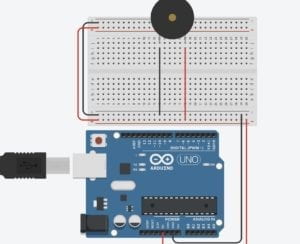

Once we changed our plan after user testing and were influenced and informed by the user testing response we decided we would create three objects to represent each element of the story we would like to tell about the aiyi and workers at NYUSH. This was majorly informed by suggestions from user testing on what people would like to hear and see about the workers, including their interviews mostly in Chinese about work, life, where they are from, etc. People also liked the idea of seeing different faces all mashed up to show both a sense of individuality to the stories, belonging to each worker, but it also showed a bit of group identity representing the workers as a whole and how NYUSH students often overlook and generalize the workers and aiyi at our school. We chose a radio to represent the different stories the workers told with a button wired in to control an array of sound files from interviews where we asked workers a variety of questions about their everyday life including work and outside of school. The only issue we did not account for regarding this radio is once our class saw and heard what the radio did with the audio the only used the radio and disregarded the card swiper and house/house key for a few minutes. The second element to our project was the card swiper which included an NYUSH 2D laser-cut keycard designed to look like a worker’s card. The card and swiper changed the images of the processing unit each time a new “worker swiped in.” This element was meant to bring a real work element of theirs into the interaction to help associate the interaction with our school and NYUSH staff. The last physical element was a house and key. When the user inserted the key audios about their family/home/hometown would play providing a personal connection and deeper background to each worker. This third element was directly impacted by the feedback we got during user testing, explaining people wanted deeper background information on each person to understand the person and their identity not just their face. During our prototyping we used cardboard and real gloves to make the original project, but after we changed ideas we had little time to make a prototype so we went straight to 2D laser cutting a box for the radio and a key card for the swiper. In addition, we used cardboard to and clear tape along with black paint to make our own card swiper creating a button at the bottom of the swiper which sent a 1 to Arduino every time pressure was applied with the key card. For the house and key we used my room key and built a 2D house from a laser cut box. We believed that 2D laser cutting would give us a fast, clean, and professional looking finished product will still be able to modify the final look by painting and adding details to transform the radio from a box into an old-time radio. We rejected the idea of 2D cutting the card swiper because it would be too many pieces and to complicated to add a pressure plate if we cut it. Instead we opted for cardboard and tap still getting a fairly finished look, but the building and assembly process was much quicker. Also, because of the button in the bottom of the swiper we needed access to the base which was easier with flexible cardboard. For the house and radio the 2D cut box was cleaner and we could glue all sides but one for easy access to the Arduino and the switch inside the house.

FABRICATION AND PRODUCTION:

Full Sketch:

In fabricating and producing our final project we went through much trial and error to get the two pressure plates(learned from Building a DIY Dance Dance Revolution) we made work how we planned. Both the house and the card swiper had switches in them which we made with two wires, tinfoil, and cardboard. Each wire attached to a piece of tinfoil and the two sides had a cardboard buffer between them keeping the tinfoil from touching. But, when pressure is applied by the key card or key the tinfoil connects and sends a 1 to the Arduino. The trial and error of creating these buttons with a buffer that is not to think so a light pressure will trigger the button was quite difficult. In addition, building the radio box and physical house were the easiest as the laser cutting went well and all the pieces lined up. The User Testing completely changed our final product in the fabrication and physical output. Although the code for the first and second project are quite similar excluding the addition of sound arrays, the physical aspect of the project changed completely. Our design and production was mostly influenced by creating an accessible project and creating an interaction that connects the physical objects with the meaning to the piece more straightforwardly. We created the house and the radio to represent their background and stories. We also focused on the meaning of our project around getting to know and understand the workers of our school who are often overlooked. The card swiper, house, and radio were also more accessible to all audience no matter the height, which is why we removed the live webcam. I believe these major changes to the physical project helped connect the meaning of the project to the physical interaction and use an interface which matched the output better especially the card swiper and faces along with the radio and stories. Where are project could continue to progress in production is the language accessibility, compared to most other projects ours was geared more towards Chinese language speakers and learners, it would benefit from adding subtitles to the pictures like Tristan suggested during our presentation. The biographies were ok information however the paper interface did not match the digital Processing.

Working Card Swiper: photos change as card swipes

Working House Key: as the house key is fully inserted into the door lock the sound array of background information from different workers plays.

The Radio: As the radio button is pushed a random interview clip from a worker explains her working conditions and how long she has lived in Shanghai.

CONCLUSIONS:

Although our project and meaning changed a lot throughout this process, our final goal was to share the stories of workers and aiyi at our school who are often misunderstood, overlooked, and even ignored. Many of the students both Chinese and international don’t have the chance or make the effort to understand and get to know the workers. We wanted to create an easily accessible interface for NYUSH students and faculty to hear the stories of the staff told by the staff along with sharing the familiar faces of the workers which people often recognize but don’t really know or understand. Through interviews with many different workers at the school including aiyi, Lanzhou workers, and Sproutworks workers we hoped to share their stories and their faces with our audience. According to What Exactly is Interactivity?,understanding our original definition of interaction our project used input, processing, and output along with having two actors in each interaction. The input was the initial pressing of the button or using the card opr key. The processing occurred in Arduino and Processing to communicate between Arduino for the code and circuit and Processing for the code, sound, and visual. The output was the sound clips and the changing faces. However, beyond that definition of interaction, our project also created a larger interaction which made people experience and think about what these workers are saying and what their stories are, hopefully learning their names, a bit about them etc. We hope that the interaction included pushing the buttons and using they key and card but also involved understanding the stories of the workers and the broader message and a sound immersive experience. Although this project had no major user testing other that a few people we found because our final project changed completely after the User Testing, the interaction by our audience was mostly as expected people used the different elements of our project here many audio interviews with different workers and seemed eager to continue to listen and use the face changer. Once the audience used the car swiper and the key they became more intrigued and continued to use each element(but it took awhile for them to switch to the different elements other than just the radio). Overall, I would take many suggestions we heard to improve the project including adding and English element and trying to differentiate the different buttons we have to help the audience understand there are three different options. I would also like to make the piece more experiential and more interactive beyond buttons if you could somehow click on peoples faces or swap them on a touch screen to hear the different stories, but this is not fully realized. Due to the untimely setback/failure of our first project which we learned in user testing, I have learned to sketch, design, prototype, and fabricate(realize my project idea) much faster and more efficiently, which overall is an important skill. In addition, I have learned the value of enjoying your project and its message as the first project’s failure was probably partially due to my lack of understanding its purpose and meaning, but the second project was much more successful because I enjoyed working on it and understood the meaning. I believe the “so what” factor of our project was the importance of NYUSH students to not overlook the staff at NYUSH who work tirelessly to keep the building running. In addition, they should not only be not overlooked but also recognized for their work and their stories as our students often see them as just workers and not full people. One of the most interesting things I learned about these people when conducting interviews was all but one interviewee was from a different province than Shanghai, which means many of these workers are not only separated from their families but also deal with the harsh reality of China’s Hukou system.

Arduino Code:

// IMA NYU Shanghai

// Interaction Lab

// For sending multiple values from Arduino to Processing

void setup() {

Serial.begin(9600);

}

void loop() {

int sensor1 = digitalRead(9);

int sensor2 = digitalRead(7);

int sensor3 = digitalRead(8);

// keep this format

Serial.print(sensor1);

Serial.print(“,”); // put comma between sensor values

Serial.print(sensor2);

Serial.print(“,”);

Serial.print(sensor3);

Serial.println(); // add linefeed after sending the last sensor value

// too fast communication might cause some latency in Processing

// this delay resolves the issue.

delay(100);

}

Processing Code:

// IMA NYU Shanghai

// Interaction Lab

// For receiving multiple values from Arduino to Processing

/*

* Based on the readStringUntil() example by Tom Igoe

* https://processing.org/reference/libraries/serial/Serial_readStringUntil_.html

*/

import processing.serial.*;

import processing.video.*;

import processing.sound.*;

SoundFile sound;

SoundFile sound2;

String myString = null;

Serial myPort;

int NUM_OF_VALUES = 3; /** YOU MUST CHANGE THIS ACCORDING TO YOUR PROJECT **/

int[] sensorValues; /** this array stores values from Arduino **/

int[] prevSensorValues;

int maxImages = 7; // Total # of images

int imageIndex = 0; // Initial image to be displayed

int maxSound= 8;

int maxSound2= 10;

boolean playSound = true;

// Declaring three arrays of images.

PImage[] a = new PImage[maxImages];

PImage[] b = new PImage[maxImages];

PImage[] c = new PImage[maxImages];

//int [] d = new int [maxSound];

//int [] e = new int [maxSound2];

ArrayList<SoundFile> d = new ArrayList<SoundFile>();

ArrayList<SoundFile> e = new ArrayList<SoundFile>();

void setup() {

setupSerial();

size(768, 1024);

prevSensorValues= new int [4];

imageIndex = constrain (imageIndex, 0, 0);

imageIndex = constrain (imageIndex, 0, height/3*1);

imageIndex = constrain (imageIndex, 0, height/3*2);

// Puts images into eacu array

// add all images to data folder

for (int i = 0; i < maxSound; i++ ) {

d.add(new SoundFile(this, "family" + i + ".wav"));

}

for (int i = 0; i < maxSound2; i ++ ) {

e.add(new SoundFile(this, "fun" + i + ".wav"));

}

for (int i = 0; i < a.length; i ++ ) {

a[i] = loadImage( "eye" + i + ".jpg" );

}

for (int i = 0; i < b.length; i ++ ) {

b[i] = loadImage( "noses" + i + ".jpg" );

}

for (int i = 0; i < c.length; i ++ ) {

c[i] = loadImage( "mouths" + i + ".jpg" );

}

}

void draw() {

updateSerial();

// printArray(sensorValues);

image(a[imageIndex], 0, 0);

image(b[imageIndex], 0, height/2*1);

image(c[imageIndex], 0, height/1024*656);

// use the values like this!

// sensorValues[0]

// add your code

if (sensorValues[2]!=prevSensorValues[2]) {

//imageIndex += 1;

println("yes");

imageIndex = int(random(a.length));

imageIndex = int(random(b.length));

imageIndex = int(random(c.length));//card

}

if (sensorValues[1]!=prevSensorValues[1]) {

//imageIndex += 1;

println("yes");

int soundIndex = int(random(d.size()));//pick a random number from array

sound = d.get(soundIndex); //just like d[soundIndex]

if (playSound == true) {

// play the sound

sound.play();

// and prevent it from playing again by setting the boolean to false

playSound = false;

} else {

// if the mouse is outside the circle, make the sound playable again

// by setting the boolean to true

playSound = true;

}

}

if (sensorValues[0]!=prevSensorValues[0]) {

//imageIndex += 1;

println("yes");

int soundIndex = int(random(e.size()));

sound2 = e.get(soundIndex); //just like e[soundIndex]

if (playSound == true) {

// play the sound

sound2.play();

// and prevent it from playing again by setting the boolean to false

playSound = false;

} else {

playSound = true;

}

}

prevSensorValues[0] = sensorValues[0];

println(sensorValues[0], prevSensorValues[0]);

println (",");

prevSensorValues[1] = sensorValues[1];

println(sensorValues[1], prevSensorValues[1]);

println (",");

prevSensorValues[2] = sensorValues[2];

println(sensorValues[2], prevSensorValues[2]);

}

void setupSerial() {

printArray(Serial.list());

myPort = new Serial(this, Serial.list()[ 1 ], 9600);

// WARNING!

// You will definitely get an error here.

// Change the PORT_INDEX to 0 and try running it again.

// And then, check the list of the ports,

// find the port "/dev/cu.usbmodem----" or "/dev/tty.usbmodem----"

// and replace PORT_INDEX above with the index number of the port.

myPort.clear();

// Throw out the first reading,

// in case we started reading in the middle of a string from the sender.

myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII

myString = null;

sensorValues = new int[NUM_OF_VALUES];

}

void updateSerial() {

while (myPort.available() > 0) {

myString = myPort.readStringUntil( 10 ); // 10 = '\n' Linefeed in ASCII

if (myString != null) {

String[] serialInArray = split(trim(myString), ",");

if (serialInArray.length == NUM_OF_VALUES) {

for (int i=0; i<serialInArray.length; i++) {

sensorValues[i] = int(serialInArray[i]);

}

}

}

}

}